When the processes running on your machine attempt to allocate more memory than your system has available, the kernel begins to swap memory pages to and from the disk. This is done in order to free up sufficient physical memory to meet the RAM allocation requirements of the requestor.

Excessive use of swapping is called thrashing and is undesirable because it lowers overall system performance, mainly because hard drives are far slower than RAM.

If your application needs to use a large amount of data, you will be exposed to thrashing and your application could slow dramatically. Two solutions exist: either optimize your applications to use memory more efficiently, or add more physical RAM to the system.

Using a cache is a popular way to optimize your memory usage. The idea is to store in a cache the data loaded from the disk; this cache will contains many slots, each one containing a specific piece of data, and some slots will be released if the cache size exceeds a certain value.

The performance of the cache depends on:

This scenario occurs when you use a large amount of data stored in the disk, and when you load this data into the memory, your application becomes slow. When you search for the cause, you discover that your application consumes a lot of memory. You decide to free the data as soon as you finish using it, but the application is still slow, and this time it's due to thrashing. Indeed you need many times to reload the data released.

Let's take a look inside the Irrlicht engine using CppDepend and discover some facts about the cache used.

The Irrlicht Engine is an open-source high performance realtime 3D engine written in C++. And as every game engine it manages mesh classes.

A mesh is a collection of polygons. Each polygon is stored in memory as an ordered list of 3D points. In order to create a 3D cube mesh we would need to specify the eight corner points of the cube in 3D space. Each polygon could then be defined using four of eight points.

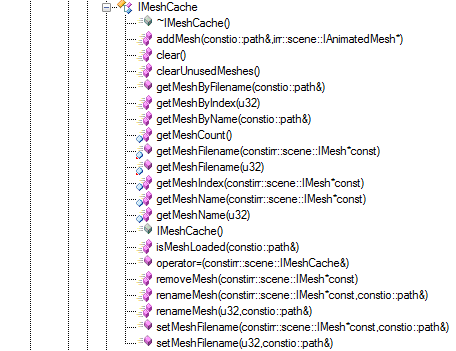

Meshes are stored in disk, and they must be loaded into memory to work with. Irrlicht provides the IMeshCache interface to define the cache contract, and the CMeshCache implement it.

This interface provides many useful methods to deal with the cache, and to discover which container is used let's search for methods invoked by the AddMesh method:

Irrlicht use the templated custom container irr::core::array<t,talloc>, this container is designed to be performent to store and search for meshs by index and name.

Here are for example the methods invoked by getMeshByName

The cache use the binary search algorithm, to improve the performence of the cache when searching mesh by name.

What about the algorithm used to free meshes?

No algorithm is provided from Irrelicht engine to release the cache, indeed it depends on the game logic using the engine, and it's the reponsibility of the Irrlicht user to decide when to free a mesh.

In this case the data used is not stored in the disk, but it's calculated after some process treatments.

Let's take as example the Doxygen tool, which is a useful tool for generating documentation from annotated C++ sources.

Doxygen take as entry source files to parse, and after a lexical analysis, many datas are extracted from these files to be stored in the memory to treat them and generate the documentation.

If you parse a big project, the data extracted from the sources become very big, and it would consume a lot of memory, and to resolve this issue Doxygen uses a cache to store these data.

Doxygen gets the cache max size from the configuration file:

It's a good idea to let this parameter be configurable, so you can increase the cache if you have a machine with a big physical memory size to increase the cache performence. However for the newer Doxygen releases this parameter is removed from the config file, and a default value is used.

And here's the container used by Doxygen:

What about the algorithm to free entries from cache?

The Doxygen developers could decide the optimized algo to release the cache, it depends on how they implemented the parser; here's the code snippet from Doxygen code source responsible for releasing the cache when it reaches its maximum:

As specified in the makeResident method code, the least recently used item is removed if the cache is full.

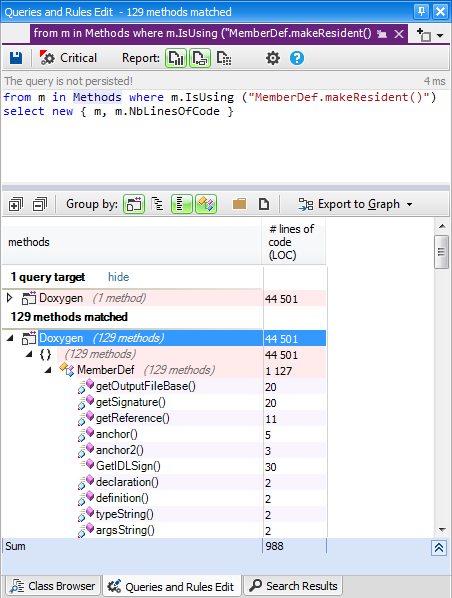

Let's discover where this method is invoked:

This method is invoked for almost all MemberDef methods, it's called each time you have to access the MemberDef state to check if this member is loaded or not. Load it if it's not the case and remove the least recently used member from the cache.

Using the cache could increase the performence of your application, but did the cache bring a big optimisation or just a micro optimisation and it's not worth to add it in your application?

Let's take as example the Doxygen project, I modified its code to not use the cache and I parsed some C++ projects with this modified version. And surprisingly the parsing time has much increased, sometimes from 5 min to 25 min.

Using the cache is a powerful technique to increase the performance if you use a large amount of data. Discovering how open-source projects implement the cache could be very useful to understand how to implement it in your applications.

Excessive use of swapping is called thrashing and is undesirable because it lowers overall system performance, mainly because hard drives are far slower than RAM.

If your application needs to use a large amount of data, you will be exposed to thrashing and your application could slow dramatically. Two solutions exist: either optimize your applications to use memory more efficiently, or add more physical RAM to the system.

Using a cache is a popular way to optimize your memory usage. The idea is to store in a cache the data loaded from the disk; this cache will contains many slots, each one containing a specific piece of data, and some slots will be released if the cache size exceeds a certain value.

The performance of the cache depends on:

- The container: it could be a queue, an array, a list or maybe a custom container. The choice of one of these containers could impact your cache performance a lot.

- The max size of the cache.

- The algorithm used to free entries from the cache: When the cache reaches its maximum, you have to decide which entries to release, for example you could:

- Release the first slots loaded.

- Release the last slots loaded.

- Release the less-used slots.

Scenario 1: The application uses a big amount of data stored in disk (Irrlicht engine)

This scenario occurs when you use a large amount of data stored in the disk, and when you load this data into the memory, your application becomes slow. When you search for the cause, you discover that your application consumes a lot of memory. You decide to free the data as soon as you finish using it, but the application is still slow, and this time it's due to thrashing. Indeed you need many times to reload the data released.

Let's take a look inside the Irrlicht engine using CppDepend and discover some facts about the cache used.

The Irrlicht Engine is an open-source high performance realtime 3D engine written in C++. And as every game engine it manages mesh classes.

A mesh is a collection of polygons. Each polygon is stored in memory as an ordered list of 3D points. In order to create a 3D cube mesh we would need to specify the eight corner points of the cube in 3D space. Each polygon could then be defined using four of eight points.

Meshes are stored in disk, and they must be loaded into memory to work with. Irrlicht provides the IMeshCache interface to define the cache contract, and the CMeshCache implement it.

This interface provides many useful methods to deal with the cache, and to discover which container is used let's search for methods invoked by the AddMesh method:

Irrlicht use the templated custom container irr::core::array<t,talloc>, this container is designed to be performent to store and search for meshs by index and name.

Here are for example the methods invoked by getMeshByName

The cache use the binary search algorithm, to improve the performence of the cache when searching mesh by name.

What about the algorithm used to free meshes?

No algorithm is provided from Irrelicht engine to release the cache, indeed it depends on the game logic using the engine, and it's the reponsibility of the Irrlicht user to decide when to free a mesh.

Scenario 2: The application uses a big ammount of calculated data in memory (Doxygen)

In this case the data used is not stored in the disk, but it's calculated after some process treatments.

Let's take as example the Doxygen tool, which is a useful tool for generating documentation from annotated C++ sources.

Doxygen take as entry source files to parse, and after a lexical analysis, many datas are extracted from these files to be stored in the memory to treat them and generate the documentation.

If you parse a big project, the data extracted from the sources become very big, and it would consume a lot of memory, and to resolve this issue Doxygen uses a cache to store these data.

Doxygen gets the cache max size from the configuration file:

int cacheSize = Config_getInt("SYMBOL_CACHE_SIZE");

It's a good idea to let this parameter be configurable, so you can increase the cache if you have a machine with a big physical memory size to increase the cache performence. However for the newer Doxygen releases this parameter is removed from the config file, and a default value is used.

And here's the container used by Doxygen:

Doxygen::symbolCache = new ObjCache(16+cacheSize); // 16 -> room for 65536 elements,

What about the algorithm to free entries from cache?

The Doxygen developers could decide the optimized algo to release the cache, it depends on how they implemented the parser; here's the code snippet from Doxygen code source responsible for releasing the cache when it reaches its maximum:

void MemberDef::makeResident() const

{

if (m_cacheHandle==-1) // not yet in cache

{

MemberDef *victim = 0;

MemberDef *that = (MemberDef*)this; // fake method constness

that->m_cacheHandle = Doxygen::symbolCache->add(that,(void **)&victim);

//printf("adding %s to cache, handle=%d\n",m_impl->name.data(),that->m_cacheHandle);

if (victim) // cache was full, victim was the least recently used item and has to go

{

victim->m_cacheHandle=-1; // invalidate cache handle

victim->saveToDisk(); // store the item on disk

}

else // cache not yet full

{

//printf("Adding %s to cache, handle=%d\n",m_impl->name.data(),m_cacheHandle);

}

if (m_storagePos!=-1) // already been written to disk

{

if (isLocked()) // locked in memory

{

assert(m_impl!=0);

that->m_flushPending=FALSE; // no need to flush anymore

}

else // not locked in memory

{

assert(m_impl==0);

loadFromDisk();

}

}

}

else // already cached, make this object the most recently used.

{

assert(m_impl!=0);

//printf("Touching symbol %s\n",m_impl->name.data());

Doxygen::symbolCache->use(m_cacheHandle);

}

}

As specified in the makeResident method code, the least recently used item is removed if the cache is full.

Let's discover where this method is invoked:

This method is invoked for almost all MemberDef methods, it's called each time you have to access the MemberDef state to check if this member is loaded or not. Load it if it's not the case and remove the least recently used member from the cache.

The impact of using the cache

Using the cache could increase the performence of your application, but did the cache bring a big optimisation or just a micro optimisation and it's not worth to add it in your application?

Let's take as example the Doxygen project, I modified its code to not use the cache and I parsed some C++ projects with this modified version. And surprisingly the parsing time has much increased, sometimes from 5 min to 25 min.

Conclusion

Using the cache is a powerful technique to increase the performance if you use a large amount of data. Discovering how open-source projects implement the cache could be very useful to understand how to implement it in your applications.