This subject is an incredibly important one that gets a lot less attention than it should. Most videogame development guides and tutorials fail to acknowledge that

communication is a game development skill.

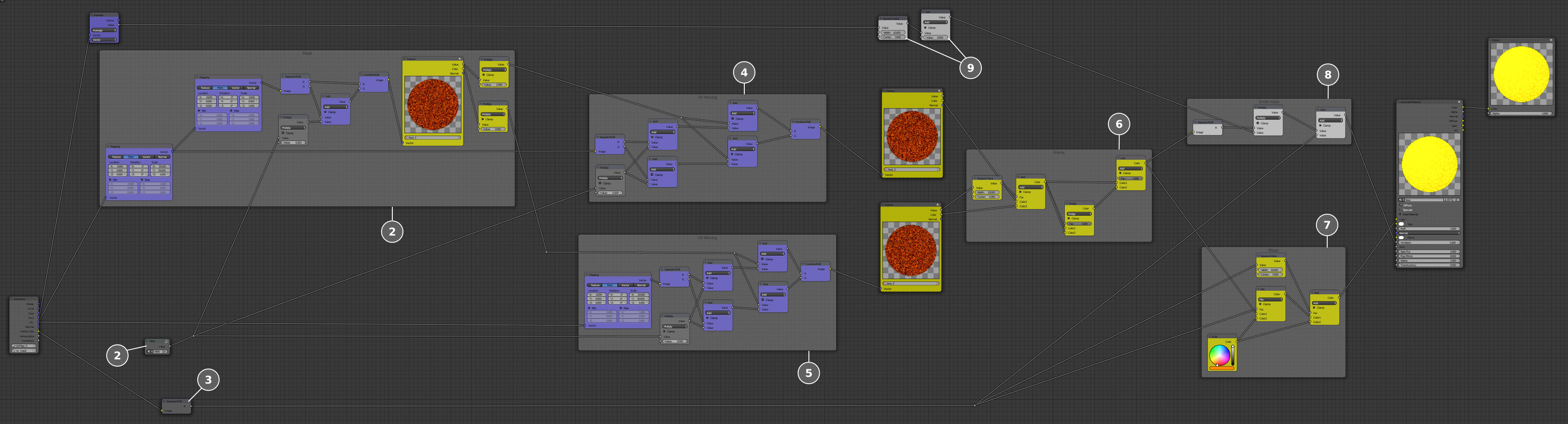

Knowing how to speak and write effectively is right up there alongside knowing how to program, use Photoshop, follow design process, build levels, compose music, or prepare sounds. As with any of those skills there are techniques to learn, concepts to master, and practice to be done before someone’s prepared to bring communication as a valuable skill to a team.

Game Developers Especially

Surely, everyone in the world needs to communicate, and could benefit from doing it better. Why pick out game developers in particular?

“…sometimes when people are fighting against your point, they’re not really disagreeing with what you said. They’re disagreeing with how you said it!”

I don’t meant to claim that only game developers have communications issues. But after spending much of the past ten years around hundreds of computer science students, indie developers, and professional software engineers, I can say that there are particular patterns to the types of communication issues most common among the game developers that I’ve met. This is also an issue of particular interest to us because it’s not just a matter of making the day go smoother; our ability to communicate well has a real impact on the level of work that we’re able to accomplish, collaboratively and even independently. Game developers often get excited about our work, for good reason, but whether a handful of desirable features don’t make it in because of technical limitations or because of communication limitations, either way the game suffers for it the same.

Whose Job is It?

If programmers program, designers design, artists make art, and audio specialists make audio, is there a communication role in the same way?

There absolutely is. There are several, even.

The Producer. Even though on small hobby or student teams this is often wrapped into one of the other roles, the producer focuses on communication between team members, and between team members and the outside world. Sometimes this work gets misunderstood as just scheduling, but for that schedule to get planned and adjusted sensibly requires a great deal of conversations and e-mails, followed by ongoing communications to keep everyone on the same page and on track.

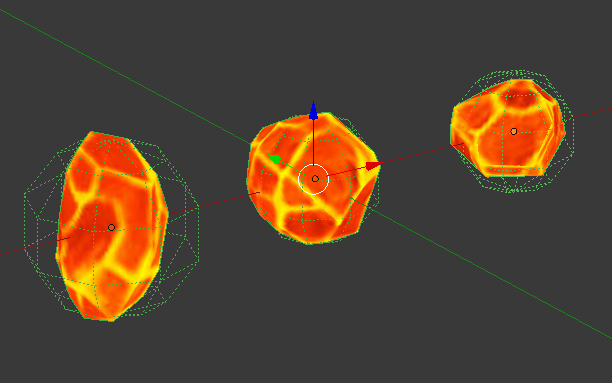

The Designer(s). One way to think about the designer’s role in game development is to communicate with the player through the game. Indicating what’s the goal, what will cause harm or benefit, where the player should or shouldn’t try to go next, expressing the right amount of internal state information – these are matters of a game’s design more so than its programming. Depending on a game team’s skill makeup, in some cases the designer’s only direct work with the game is in level layouts or value tuning, making it even more critical that within the team a designer can communicate well with programmers, artists, and others on the team when and where the work intersects. On a small team when the person mostly responsible for the design is also filling one or more other roles (often the programming) communication then becomes integral to keeping others involved in how the game takes shape.

The Leads. On a team large enough to have leads, which is common for a professional team, the Lead Programmer, Lead Designer, or Lead Artist also have to bring top notch communication skills to the table. Those people aren’t necessarily the lead on account of being the best programmer, designer, or artist – though of course they do need to be skilled – they’re in that position because they can also lead others effectively, which involves a ton of communication in all directions: to the people they lead, from the people they lead, even mediating communications between people they lead or the people they lead and others.

Some of the most talented programmers, designers, artists and composers that I’ve met have been quiet people. This isn’t an arbitrary personality difference though. In practice it limits their work – when they don’t speak up with their input it can cost their game, team, or company.

The Writer. Not every game genre involves a writer, but for those that do, communication becomes even more important. Similar to the designer that isn’t also helping as a programmer, a team’s writer typically isn’t directly creating much of the content or functionality, aside perhaps from actual dialog or other in-game and interstitial text. It’s not enough to write some things down and call it a day, the writer and content creators need to be in frequent communication to ensure that satisfactory compromises can be found between implementation realities and the world as ideally envisioned.

Non-Development Roles. And all that’s only thinking about the internal communications on a team during development. Learning how to communicate better with testers, players, or if you’ve got a commercial project your customers and potential new hires (even ignoring investors and finance professionals), is a whole other world of challenges that at a large enough scale get dealt with by separate HCI (Human-Computer Interaction) specialists, marketing experts, PR (public relations) people and HR (Human Resources) employees. If you’re a hobby, student, solo, or indie developer, you’ve got to wear all of these hats, too!

There are two main varieties of communication issues that we tend to encounter. Although they may seem like polar opposites, in reality they’re a lot closer than they appear. In certain circumstances one can even evolve from the other.

Challenge 1: Shyness

The first of these issues is that some of us can be a little too shy. Some of the most talented programmers, designers, artists and composers that I’ve met have been quiet people. This isn’t an arbitrary personality difference though. In practice it limits their work – when they don’t speak up with their input it can cost their game, team, or company.

It’s unfortunately very easy to rationalize shyness. After all, maybe the reason a talented, quiet person was able to develop their talent is because they’ve made an effort to stay out of what they perceive as bickering. Unfortunately this line of thinking is unproductive in helping them and the team benefit more from what they know. Conversation between team members serves a real function in the game’s development, and if it’s going to affect what gets made and how it can’t be dismissed as just banter. Sometimes work needs to get done in 3D Studio Max, and sometimes it needs to get done around a table.

Another factor I’ve found underlying shyness is that a person’s awareness of what’s great in their field can leave their self-confidence with a ding, since they can always see how much improvement their work still needs just to meet their own high expectations. Ira Glass has a great bit on this:

It doesn’t matter though where an individual stands in the whole world of people within their discipline, all that matters is that developers on the project know different things than one another. That’s inevitably always the case since everyone’s strengths, interests, and backgrounds are different.

Challenge 2: Abrasiveness

Sometimes shyness seems to evolve as an overcompensation for unsuccessful past interactions. Someone tried to speak up, to share their idea or input, just to add to someone else’s point and yet it somehow wound up in hurt feelings and no difference in results. Entering into the discussion got people riled up, one too many times, so after one last time throwing hands into the air out of frustration, a developer decides to just stop trying. Maybe they feel that their input wasn’t properly received, or even if it was it simply wasn’t worth the trouble involved.

As one of my mentors in my undergraduate years pointed out to me: “Chris, sometimes when people are fighting against your point, they’re not really disagreeing with what you said. They’re disagreeing with how you said it! If you made the same point differently they might get behind it.”

He was absolutely right. Once I heard that idea, in addition to catching myself doing it, I began to notice it everywhere from others as well. It causes tension in meetings, collaborative classroom projects, even just everyday conversations between people. Well-meaning folks with no intention of being combative, indeed in total overall agreement about both goals and general means, often wind up in counterproductive, circular scuffles arising from an escalation of unintended hostility.

There are causes and patterns of behavior that lead to this problem. After 10 years of working on it, I’ve gotten better about this, but it still happens on occasion, and it’s still something that I have to actively keep ahead of.

It’s understandable how someone could run through this pattern only so many times before feeling like their engaging with the group is the cause of the trouble. This is in turn followed by backing off, toning down their level of personal investment in the dialog, and (often bitterly) following orders from the action items that remain after others get done with the discussion.

In either case – shyness or abrasiveness – and in any role on a team, nobody gains from having one less voice of experience, skill, and genuine concern involved. Simply tuning out isn’t doing that person, their team, the game, or the players any real benefit. The issue isn’t the person or their ideas, the issue is just how the communication is performed, and just as with any other skill a person can improve how they communicate.

Failing to figure out a way to overcome these communications challenges can cause the team and developer much more trouble later, since not dealing with a few small problems early on when they’re still small can cause them to grow and erupt later beyond all proportion.

Listening and Taking to Heart

You’ve heard this all your life. You’ll no doubt hear it again. Hopefully every time communication comes up this gets mentioned too, first or prominently.

Listening well, meaning not just hearing what they have to say or giving them an outlet but trying to work with them to get at underlying meaning or concerns and adapting accordingly, is way harder than it sounds, or at least more unnatural than we’d like to think. You can benefit from practicing better listening. I can say that without knowing anything about you, because everyone – presidents and interns, parents and kids, students and teachers – can always listen better.

There’s a tendency, even though we rationally know it’s out of touch with reality, to think of oneself as the protagonist, and others like NPCs. Part of listening is consciously working to get past that. The goal isn’t to get others to adopt your ideas, but rather it’s to figure out a way forward that gains from the multiple backgrounds and perspectives available, in a positive way that people can feel good about being involved with.

Don’t Care Who Wins, Everyone Wins

There’s no winner in a conversation.

This one also probably sounds obvious, but it’s an important one that enough people run into that it isn’t pointed out nearly enough. Development discussion doesn’t need to be a debate. Even to the extent that creative tension will inevitably present certain situations in which incompatible ideas are vying for limited development attention on a schedule, debate isn’t the right way to approach the matter.

In one model for how a dialog exchange proceeds, two people with different ideas enter, and at the end of the exchange, one person won, one person lost. I don’t think (hope?) that anyone consciously thinks about dialog this way, but rather it may emerge as a default from the kinds of exchanges we hear on television from political talking heads, movie portrayals of exchanges to establish relative status between characters, or even just our instinctive fight or flight sense of turf.

Rather than thinking in terms of who the spectators (note: though avoid spectators when possible, as it can often pollute an otherwise civil exchange with defensive, ego-protecting posturing) or an impartial judge might declare a winner, consider which positions the two people involved would likely take in separate future arguments.

If all of your prior references have led you to believe strongly about a particular direction, you only do that rhetorical position (and the team/project!) a disservice by creating opponents of it. Whenever we come across as unlikeable, especially in matters like design, art, or business where a number of directions may be equally viable, then it doesn’t matter what theoretical support an option has if people associate it with a negative, hostile feeling or person.

It doesn’t matter what theoretical support an option has if people associate it with a negative, hostile feeling.

Be friendly about it. Worry first about understanding the merits and considerations of their point, then about your own perspective being understood for consideration. Notice that neither of those is about “convincing” them, or showing them the “right” way, it’s about trying to understand one another because without that the rest of discussion just amounts to barking and battling over imagined differences.

You Might Just be Wrong

Speaking of understanding one another: don’t ever be afraid to back down from a point after figuring out what’s going on, and realizing that there’s another approach that’ll work just as well or better. There’s a misplaced macho sense of identity attached to sticking to our guns over standing up for our ideas – especially when the ideas aren’t necessarily thoroughly developed and aren’t exactly noble or golden anyhow.

A smart person is open to changing their mind when new information or considerations come to light. You’re not playing on Red team competing against Blue team. You’re all on the same team, trying to get the ball to go the same direction, and maybe your teammate has a good point about which direction the actual goal is in.

The other side of this is to give the other person a way out. Presenting new information or concerns may make it easier for them to change their mind, even if that particular information or concern isn’t actually why they change their mind, simply because it can feel more appropriate to respond to new information then to appear to have been uncertain in the first place. Acknowledging the advantages in the position they’re holding doesn’t make your position seem weaker by comparison, it makes them feel listened to, acknowledged, and like there’s a better chance you’re considering not just your own initial thoughts but theirs too. When a point gets settled, whichever way things go, let the difference go instead of forming an impression of who’s with or against you. (Such feelings have a way of being self-fulfilling. In practice, reasonable people are for or against particular points that come up, not for or against people.)

When an idea inevitably gets compromised or thrown out, being a skilled communicator means not getting bitter or caught up in that. Don’t take it personally. It’s in the best interests of the team, and therefore the team’s members (yourself included), that not every idea raised makes it into implementation or remains in the final game.

Benefit of the Doubt, Assume the Best

A straw man argument is when we disagree with or attempt to disprove a simplified opposition position. In informal, heated arguments over differences in politics or religious/cultural beliefs, these are frequently found in the form of disagreeing with the most extreme, irrational, or obviously troubled members of the group, rather than dealing with the more moderate, rational, and competent justifications of their most thoughtful adherents. This leads to deadlock, since both sides feel as though they’re advancing an argument against the other, yet neither side feels as though their own points have been addressed.

When the goal is to make a more successful collaboration, rather than to just make ourselves temporarily feel good, the right thing to do is often the opposite of setting up a straw man argument. Assume that the other person is coming from a rational, informed, well-intentioned place with their position, and if that’s not what you’re seeing from what has been communicated so far, then seek to further understand. Alternatively, even help them further develop their idea by looking for additional merit to identify in it beyond what they might have originally had in mind – maybe from where you’re coming from it has possible benefits that they didn’t realize mattered to you.

If the idea you may be holding is different than what someone else is proposing, welcome your idea really being put to the test by measuring it against as well put-together an alternative as the two of you can conceive. If it gets replaced by a better proposal that you arrived at through real discussion and consideration, or working together to identify a path that seems more likely to pan out well for both of you, all the better.

Your Frustration is With Yourself

This is one of those little life lessons that I learned from my wrestling coach which has stayed with me well after I finished participating in athletic competitions. Most of the time when people are upset or frustrated or disappointed, they’re upset or frustrated or disappointed mostly with themselves, and directing that at somebody else through blame isn’t ever going to diffuse it.

Even if this isn’t 100% completely and totally true in every situation – sure, sometimes people can be very inconsiderate, selfish, or irresponsible and there may be good reason to be upset with them – I find that it’s an incredibly useful way to frame thinking about our emotional state because it takes it from being something the outside world has control over and changes to focus to what we can do about it.

Disappointed with someone violating our trust? Our disappointment may be with our failing to recognize we should not have trusted them. Upset with someone for doing something wrong? We may be upset with ourselves for not making the directions or expectations more clear. Frustrated with someone that doesn’t understand something that you find obvious? Your frustration well may lie in your feeling of present inability to coherently and productively articulate to that person exactly what it is you think they’re not understanding.

If your point isn’t well understood or received but you believe it has value that isn’t being rightly considered, rather than assuming the other person is incapable of understanding it, put the onus on yourself to make a clearer case for it. Maybe they don’t follow your reference, or could better get what you’re trying to say if you captured it in a simple visual like a diagram or flow chart. Maybe they understand what you’re saying but don’t see why you think it needs to be said, or they get what you mean but don’t see the connection you have in mind for what changes you think it should lead to.

If your point isn’t well understood or received but you believe it has value that isn’t being rightly considered, rather than assuming the other person is incapable of understanding it, put the onus on yourself to make a clearer case for it.

Clarify. Edit it down to summary highlights (people often have trouble absorbing details of an argument until they first already understand the high level). Explain it another way to triangulate. Provide a demonstration case or example. If there’s a point you already made which you think was important to understanding it but that point didn’t seem to stick, find a way to revisit it in a new way that leads with it instead of burying it among other phrases that were perhaps too disorganized at the time to properly set up or support it.

Mistaking Tentative for Definitive

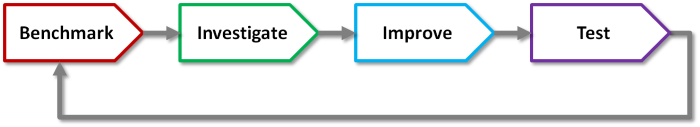

Decisions can change. When they’re in rough draft or

first-pass, they’re likely to – that’s why we do them in rough form first! It’s easier to fix and change things when they’re just a plan, an idea, or a prototype, and the more they get built out into detail or stick around such that later decisions get made based on them, then the more cemented those decisions tend to become.

There are two types of miscommunication that can come from this sort of misunderstanding: mistaking your own tentative ideas for being definitive, or mistaking someone else’s tentative ideas for being definitive. During development, and as more people get involved, projects can change and evolve a bit to reflect what’s working or what isn’t, or to take better advantage of the strengths and interests of team members.

If there was an idea you pushed for earlier in a project and people seemed onboard with it then, it’s possible that discoveries during development or compromises being made for time and resource constraints have caused it to appear in a modified or reduced form. It might even be cut entirely, if not explicitly then maybe just lost in the shuffle. Before raising a case for it, it’s worth rethinking how the project may have changed since the time the idea was initially formed, to determine whether it would need to be updated to still make sense for the direction the team and game has gone in.

Sometimes the value of ideas during development is to give us focus and direction, and whether the idea survives in its originally intended form is secondary to whether the team and players are happy with the software that results. It may turn out to be worth revisiting and bringing back up, possibly in a slightly updated form, as maybe last time was at a phase in development when it wasn’t as applicable as it might be now. Or it may be worth letting go as having been useful at the time, but perhaps not as useful now, a stepping stone to other ideas and realizations the team has made in the time that has passed.

People get optimistic, people make planning mistakes, and people cannot predict the future – but it’s important to not confuse those perfectly human imperfections with knowingly lying or failing to keep a promise.

The other side to this is to make the same mistake in thinking about someone else’s ideas: thinking they are definitive when they are necessarily tentative. This happens perhaps because of how far off the idea relates to the future, and how much will be discovered or answered between now and then that is unknown at the time of the initial conception and discussion. If a project recruits people with the intention of supporting a dozen types of cars, but during development reality sinks in and only three different vehicles make sense in favor of putting that energy into other development necessity, those things happen. People get optimistic, people make planning mistakes, and people cannot predict the future – but it’s important to not confuse those perfectly human imperfections with knowingly lying or failing to keep a promise. If early in a project someone is trying to spell out a vision for what the project may look like later, don’t take that too literally or think of it as a contract, look at it as a direction they see things headed in. Implementation realities have a way of requiring compromise along the way.

Soften That Certainty Away

A common source of fighting on teams is from a misplaced sense of certainty in an observation or statement which reflects value priorities that someone else on the team doesn’t necessarily share, or especially when the confidently made statement steamrolls value priorities of someone else on team.

Acknowledge with some humility that you only have visibility on part of all that’s going on, and that the best you can offer is a clarification of how things look for where you’re coming from or the angle you have on things. Leave wiggle room for disagreement. Little opening phrases like “As best as I can tell…” or “It looks to me like…” or “I of course can’t speak for everyone, but at least based on the games that I’ve played in this genre…” may just seem like filler, but in practice they can be the difference between tying the team in a knot or opening up valuable discussion about different viewpoints.

Consultant’s Frustration

School surrounds people with other people that think and work in similar patterns, with similar values, often of the same generation. Outside the classroom, whether collaborating on a hobby game project, joining a company, or doing basically anything else in the world besides taking Your Field 101, that isn’t typically how things work. Often if your skills are in visual art, you have to work with people that don’t know as much about visual art as you. If your skills are in design, you’ll have to work with a lot of non-designers. If you have technical talents, you will be dealing with a lot of non-technical people.

That is why you are there. Because you know things they don’t know. You can spot concerns that they can’t spot. You understand what’s necessary to do things that they don’t know how to do. If someone else on the team or company completely understood everything that you understand and in the same way, they wouldn’t really need you to be involved. Your objective in this position is to help them understand, not to think poorly of them for knowing different things than you do. Help them see what you see. Teach a little bit.

That is why you are there. Because you know things they don’t know… Your objective in this position is to help them understand, not to think poorly of them for knowing different things than you do. Help them see what you see.

I refer to this as the consultant’s frustration because that’s a case that draws particular emphasis to it: a company with no understanding of sales calls in a sales (or design, or IT, or whatever) consultant, because they have no understanding of that and that’s why they made the call. A naive, inexperienced, unprepared consultant’s reaction to these situations is one of horror and frustration – how on Earth are these people so unaware of the basic things that they need to know? The consultant is there to spot that gap and help them bridge it, not to look down on them for it. Meanwhile they’re doing plenty of things right the specialist likely doesn’t see or fully understand, because that’s not the discipline or problem type that they’re trained and experienced in being able to spot, assess, or repair.

When you see something that concerns you, share that with the team. That is part of how you add value. You may see things that others on the team do not.

Values Are Different Per Role

The other side to the above-mentioned point is appreciating that other factors and issues less visible to your own vantage point may have to be balanced against this point, or in some cases may even override it.

Frustration can arise from an exaggerated form of the consultant’s frustration: a programmer may instinctively think of other roles on the team as second-rate programmers, or the designer may perceive everyone else on the team as second-rate designers, etc. This is not a productive way to think, because it’s not just that they are less-well suited to doing your position, but you’re also less-well suited to doing theirs. A position goes beyond what skills someone brings to move a project forward, it also brings with them an identity and responsibility on the team to uphold certain aspects of the project, a trained eye to keep watch for certain kinds of issues. The programmer may not be worried about the color scheme, the artist may not be worried about how the code is organized, the designer may not care about either as long as the gameplay works.

…a programmer may instinctively think of other roles on the team as second-rate programmers…

That’s one of the benefits of having multiple people filling specialized roles, even if it’s people that are individually capable of wearing multiple hats or doing solo projects if they had to.

In the intersection of these concerns, compromises inevitably have to get made. The artist may be annoyed by a certain anomaly in how the graphics render, but the engineer may have a solid case for why that’s the best the team’s going to get out for the given style of the technology they have available. The musician or sound designer may feel that certain advanced scoring and dynamic adjustment methods could benefit the game’s sounds cape, but the gameplay and/or level designer may have complications they’re close to about user experience, stage length, or input scheme that place some tricky limits on the applicability of those approaches.

One of the reasons why producers (on very small student/hobby/indie teams this is often also either the lead programmer or lead designer) get a bad rap sometimes, as the “manager” that just doesn’t get it, is because their particular accountability is to ensure that the game makes forward progress until it’s done and released in a timely manner. So the compromise justification that they often have to counter with is, “…but we have to keep this game on schedule” which is a short-term version of “…but this game has to get done.” If someone isn’t fighting that fight for the project, it doesn’t get done.

Be glad that other people on a team, when you have the privilege of working with a good and well-balanced team, are looking out for where you have blind spots. Push yourself to be a better communicator so that you can help do the same for them.

Too Much Emphasis on Role

After that whole section on role, I feel the need to clarify that especially for small team development (i.e. I can total understand military-like hierarchy and clarity for 200+ person companies) role shouldn’t pigeonhole someone’s ability to be involved in discussions and considerations.

While it’s true that the person drawing the visual art is likely to have final say on how the art needs to be done (not only as a matter of aesthetic preference, but as a side effect of working within their own capabilities, strengths, and limitations), that does not mean that others from the team shouldn’t be able to offer some feedback or input in terms of what style they feel better about working with, what best plays to their own strengths and limitations (ex. just because an artist can generate a certain visual style doesn’t mean the programmer’s going to be able to support it in real-time), and what they like just as fellow fans of games and media.

Does one team member know more about animation than others on the project? Then for goodness sake, of course that person needs to be involved in discussions affecting the implementation or scheduling of animation. But even if you’re not an animator, that you’ve accumulated a different set of media examples to draw upon, and have an idea for how that work may intersect with technical, design, or other complications, there’s still often value in being a part of that discussion (though of course still leaving much of the decision with whoever it affects most, and whoever has the most related experience).

It’s unhelpful to hide behind your role, thinking either “Well, I’m not the artist so that isn’t my problem” or “Well, I’m the designer, so this isn’t your problem to worry about.” The quality of the game affects everyone who got involved with making it. You make a point of surrounding yourself with capable people that are coming from different backgrounds and have different points of view to offer. Find ways to make the most of that.

A related distinction to these notes about role is the concept of

servant-leadership. Rather than a producer, director, or lead designer feeling like the boss of other people who are supposed to do what they say, it can be healthy and constructive for them to approach the development as another collaborator on the team, just with particular responsibilities to look out for and different types of experience to draw from. They’re having to balance their own ideas with facilitating those of others to grow a shared vision, they’re trying to keep the team happy and on track, that’s their version of the compiler or Photoshop.

![Attached Image: boss-leader.jpg]()

Handling Critique Productively

When critique comes up – whether of your game after it’s done or of a small subpoint in a disagreement – separate yourself personally from the point discussed. When people give feedback on work you’re doing, whether it’s on your programming, art, audio, or otherwise, the feedback is about the work you’re doing, it’s not feedback about you (even if, and let’s be fair here, we could all honestly benefit from a little more feedback about ourselves as a work-in-progress, too!).

Feedback is almost always in the interest of making the work better, to point out perceived issues within a smaller setting before it’s too late to fix the work in time for affecting more people, or before getting too far into the project to easily backtrack on it. Sometimes the feedback comes too late to fix them in this case, in which case rather than disagree with it accept that’s the case and keep it in mind to improve future efforts (this isn’t the last game or idea you’re ever going to work on, right?).

Defensiveness, of the sort mentioned in

the recent playtesting entry, is often counterproductive, or at lease a waste of limited time and energy.

Systems and Regular Channels

Forms and routine one-on-one check-in meetings can feel like a bureaucratic chore, but in proper balance and moderation they can serve an important function. People need to have an outlet to have their concerns and thoughts heard. People need to be in semi-regular contact with the people who they might need to raise their concerns with, before there is a concern to be raised, so that there’s some history of trust and prior interaction to build upon in those cases and it doesn’t seem like a weirdly hostile exception just to bring up something small.

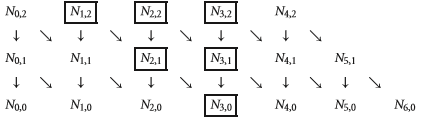

In one of the game development groups I’ve been involved with recently we were trying to narrow down possible directions for going forward from an early stage when little had been set into action yet. From just an open discussion, three of the dozen or so ideas on the whiteboard got boxed as seeming to be in the lead. When we paused to get a show of hands to see how many people were interested in each of the ideas on the board, we discovered that one of the boxed items had only a few supporters – those few just happened to be some of the more vocal people in the room. Even introducing just a tiny bit of structure can be important in giving more of an outlet to the less outspoken people involved with a project, who have ideas and considerations that are likely just as good and, as mentioned earlier, probably weighing different sets of concerns and priorities.

Practice, Make Mistakes to Learn From

Seek out opportunities to get more practice communicating. In all roles, and at all scales. As part of a crowd. In front of a crowd. In formal and informal settings. Out with a few people. Out with a lot of people.

Now, for personal context: I don’t drink. I don’t go to bars or clubs. I’ve admittedly never been one for parties. This weekend I have no plans to watch the Super Bowl. I’m not saying you should force yourself to do things that you don’t want to do. What I am saying is to look for (or create) situations where you can comfortably exercise your communication abilities. Whatever form that may take for you.

Given a choice to work alone or work with a group, welcome the opportunity to deal with the challenges of working with a group. Attend a meetup. Find some clubs to participate in. When a team you’re on needs to present an update, volunteer to be the one presenting that update.

Communication is a game development skill. As with any other game development skill, you’ll find the biggest gains in ability through continued and consistent practice.

Recommended Reading

A few books that I’ve found helpful on this subject include:

Note: This article was originally published in 2 parts (

Part 1,

Part 2) on Chris DeLeon's blog "HobbyGameDev.com". Chris kindly gives permission to reproduce his content.