Quaternions

Note:

The video below contains the playlist for all the videos in this series, which can be accessed via the playlist icon in the bottom-right corner of the embedded video frame once the video is playing. The first video in the series is loaded automatically

Note:

This is an ongoing series of videos that will be updated every week. When a new video is posted we will update the publishing date of this article and the new video will be found at the end of the playlist

Note:

This article was published on GameDev.net back in 2002. In 2008 it was revised by the original author and included in the book Business and Production: A GameDev.net Collection, which is one of 4 books collecting both popular GameDev.net articles and new original content in print format.

Note:

This article was originally published to GameDev.net back in 2002. It was revised by the original author in 2008 and published in the book Design and Content Creation: A GameDev.net Collection, which is one of 4 books collecting both popular GameDev.net articles and new original content in print format.

"A document that the designer creates which contains everything that a game should include. Sometimes referred to as a "design bible", this document should list every piece of art, sound, music, character, all the back story and plot that will be in the game. Basically, if the game is going to have it, it should be thoroughly documented in the design document so that the entire development team understands exactly what needs to be done and has a common point of reference."

"A specification for all of the programming algorithms, data, and the interfaces between the data and the algorithms."

1. Introduction

2. Requirements

3. Development Process Overview

4. Software Architecture Overview

5. ...

13. Future Considerations

1. The program must be able to run on any Windows XP/Vista based system.

2. The program must not depend on any code libraries, other than those included with the OS itself.

3. It should be controlled exclusively by the mouse. No other input device should be required. That means we can't plan on having the player use a keyboard.

4. Each of the two players can interact with the game board on screen during their turn only, using the mouse.

dmd

import std.stdio;

void main() {

writeln( "Hello D!" );

}

{

"name": "derringdo",

"description": "A framework for 2D games with SDL2.",

"homepage": "",

"copyright": "Copyright (c) 2013, Michael D. Parker",

"authors": [

"Mike Parker"

],

"dependencies": {

"derelict:sdl2": "~master",

"derelict:physfs": "~master"

},

"configurations": [

{

"name": "lib",

"targetType": "library",

"targetPath": "lib"

},

{

"name": "dev",

"targetType": "executable",

"targetPath": "bin"

}

]

}

import derelict.sdl2.sdl;

import derelict.sdl2.image;

void main() {

DerelictSDL2.load();

DerelictSDL2Image.load();

}

bool colliding (Object OB1, Object OB2){

// Check the collision Vertically

if (OB1bot>OB2top) return false; /* this means that OB1 is above OB2,

far enough to guarantee not to be touching*/

if (OB2bot>OB1top) return false; /* this means that OB2 is above OB1 */

// Check the collision Horizontally

if (OB1left>OB2right) return false; /* this means that OB1 is to the right of OB2 */

if (OB2left>OB1right) return false; /* this means that OB2 is to the right of OB1 */

return true; /* this means that no object is way above the other

nor is to the right of the other meaning that the

bounding boxes are, int fact, overlapping.*/

}

//All of this is in pseudo-code

//It's really game-specific

std::list<Object*> allObjectsList; //Contains all active objects

std::list<Object*> sectionList[4]; /*Contains all objects within the sections*/

Object sections[4];

void insert (Object object, sectionList List){

//insert Object in sectionList

}

void flush (){

//remove all objects on sectionList[0,1,2 and 3]

}

void sectionizeObjects (){

flush();

for(int obj = 0; obj < allObjectsList.lenght; obj++){

for (int i = 0; i < 4; i++)

if( checkBBoxCollision(allObjectsList[obj], sections[i]) )

insert (allObjectsList[obj], sectionList[i])

}

}

bool colliding (Object OB1, Object OB2){

// Check the collision Horizontally

/* checking horizontally first will make sure most of the functions will return false

/* with one or two tests, instead of the previous use.*/

if (OB1left>OB2right) return false;

if (OB2left>OB1right) return false;

// Check the collision Vertically

if (OB1bot>OB2top) return false;

if (OB2bot>OB1top) return false;

return true;

}

bool colliding (Object OB1, Object OB2){

if ( squared(OB1.x-OB2.x) + squared(OB1.y-OB2.y) < squared(OB1.Radius+OB2.Radius)) return true;

return false;

}

Watch more interviews and game dev tutorials FREE at http://www.design3.com

Note:

This article was originally published to GameDev.net in 2005. It was revised by the original author in 2008 and published in the book Advanced Game Programming: A GameDev.net Collection, which is one of 4 books collecting both popular GameDev.net articles and new original content in print format.

Figure 1: An example heightmap taken from Wikipedia

Figure 2: An example 3D heightfield taken from Wikipedia

Figure 4: A series of 2D line segments

“The vertex normal in a 2D coordinate system is the average of the normals of the attached line segments.”

ComponentNormal = Σ (lineNormals) / N; where N is the number of normals

Normal.x = Σ(x-segments) / Nx;

Normal.y = 1.0

Normal.z = Σ(z-segments) / Nz;

Figure 5: An overhead view of a heightfield

Normal.x = [(A-P) + (P-B)] / 2.0

Normal.y = 1.0

Normal.z = [(C-P) + (P-D)] / 2.0

struct FilterVertex // 8 Bytes per Vertex

{

float x, z;

};

index Ãâ z * numVertsWide + x

ID3D10Buffer* m_pHeightBuffer; ID3D10ShaderResourceView* m_pHeightBufferRV; ID3D10EffectShaderResourceVariable* m_pHeightsRV;

void Heightfield::CreateShaderResources(int numSurfaces)

{

// Create the non-streamed Shader Resources

D3D10_BUFFER_DESC desc;

D3D10_SHADER_RESOURCE_VIEW_DESC SRVDesc;

// Create the height buffer for the filter method

ZeroMemory(&desc, sizeof(D3D10_BUFFER_DESC));

ZeroMemory(&SRVDesc, sizeof(SRVDesc));

desc.ByteWidth = m_NumVertsDeep * m_NumVertsWide * sizeof(float);

desc.Usage = D3D10_USAGE_DYNAMIC;

desc.BindFlags = D3D10_BIND_SHADER_RESOURCE;

desc.CPUAccessFlags = D3D10_CPU_ACCESS_WRITE;

SRVDesc.Format = DXGI_FORMAT_R32_FLOAT;

SRVDesc.ViewDimension = D3D10_SRV_DIMENSION_BUFFER;

SRVDesc.Buffer.ElementWidth = m_NumVertsDeep * m_NumVertsWide;

m_pDevice->CreateBuffer(&desc, NULL, &m_pHeightBuffer);

m_pDevice->CreateShaderResourceView(m_pHeightBuffer, &SRVDesc,

&m_pHeightBufferRV);

}

void Heightfield::Draw()

{

// Init some locals

int numRows = m_NumVertsDeep - 1;

int numIndices = 2 * m_NumVertsWide;

UINT offset = 0;

UINT stride = sizeof(FilterVertex);

m_pNumVertsDeep->SetInt(m_NumVertsDeep); m_pNumVertsWide->SetInt(m_NumVertsWide); m_pMetersPerVertex->SetFloat(m_MetersPerVertex);

m_pDevice->IASetPrimitiveTopology (D3D10_PRIMITIVE_TOPOLOGY_TRIANGLESTRIP); m_pDevice->IASetIndexBuffer(m_pIndexBuffer,DXGI_FORMAT_R32_UINT,0); m_pDevice->IASetInputLayout(m_pHeightfieldIL); m_pDevice->IASetVertexBuffers(0, 1, &m_pHeightfieldVB, &stride, &offset);

m_pHeightsRV->SetResource(m_pHeightBufferRV);

m_pFilterSimpleTech->GetPassByIndex(0)->Apply(0);

for (int j = 0; j < numRows; j++)

m_pDevice->DrawIndexed( numIndices, j * numIndices, 0 );

}

float Height(int index)

{

return g_Heights.Load(int2(index, 0));

}

float3 FilterNormal( float2 pos, int index )

{

float3 normal = float3(0, 1, 0);

if(pos.y == 0)

normal.z = Height(index) - Height(index + g_NumVertsWide);

else if(pos.y == g_NumVertsDeep - 1)

normal.z = Height(index - g_NumVertsWide) - Height(index);

else

normal.z = ((Height(index) - Height(index + g_NumVertsWide)) +

(Height(index - g_NumVertsWide) - Height(index))) * 0.5;

if(pos.x == 0)

normal.x = Height(index) - Height(index + 1);

else if(pos.x == g_NumVertsWide - 1)

normal.x = Height(index - 1) - Height(index);

else

normal.x = ((Height(index) - Height(index + 1)) +

(Height(index - 1) - Height(index))) * 0.5;

return normalize(normal);

}

VS_OUTPUT FilterHeightfieldVS( float2 vPos : POSITION )

{

VS_OUTPUT Output = (VS_OUTPUT)0;

float4 position = 1.0f;

position.xz = vPos * g_MetersPerVertex;

// Pull the height from the buffer int index = (vPos.y * g_NumVertsWide) + vPos.x; position.y = g_Heights.Load(int2(index, 0)) * g_MetersPerVertex; Output.Position = mul(position, g_ViewProjectionMatrix);

// Compute the normal using a filter kernel

float3 vNormalWorldSpace = FilterNormal(vPos, index);

// Compute simple directional lighting equation

float3 vTotalLightDiffuse = g_LightDiffuse *

max(0,dot(vNormalWorldSpace, g_LightDir));

Output.Diffuse.rgb = g_MaterialDiffuseColor * vTotalLightDiffuse;

Output.Diffuse.a = 1.0f;

return Output;

}

Surface Normal: Ns ═ A × B

Vertex Normal: N = Norm( Σ (Nsi) )

Figure 6: A simple grid-based mesh with triangles

struct MeshVertex

{

D3DXVECTOR3 pos;

unsigned i;

};

ID3D10Buffer* m_pNormalBufferSO; ID3D10ShaderResourceView* m_pNormalBufferRVSO; ID3D10EffectShaderResourceVariable* m_pSurfaceNormalsRV;

void Heightfield::CreateShaderResources( int numSurfaces )

{

// Create the non-streamed Shader Resources

D3D10_BUFFER_DESC desc;

D3D10_SHADER_RESOURCE_VIEW_DESC SRVDesc;

// Create output normal buffer for the Stream Output

ZeroMemory(&desc, sizeof(D3D10_BUFFER_DESC));

ZeroMemory(&SRVDesc, sizeof(SRVDesc));

desc.ByteWidth = numSurfaces * sizeof(D3DXVECTOR4);

desc.Usage = D3D10_USAGE_DEFAULT;

desc.BindFlags = D3D10_BIND_SHADER_RESOURCE | D3D10_BIND_STREAM_OUTPUT;

SRVDesc.Format = DXGI_FORMAT_R32G32B32A32_FLOAT;

SRVDesc.ViewDimension = D3D10_SRV_DIMENSION_BUFFER;

SRVDesc.Buffer.ElementWidth = numSurfaces;

m_pDevice->CreateBuffer(&desc, NULL, &m_pNormalBufferSO);

m_pDevice->CreateShaderResourceView(m_pNormalBufferSO, &SRVDesc,

&m_pNormalBufferRVSO);

}

void Heightfield::Draw()

{

int numRows = m_NumVertsDeep - 1;

int numIndices = 2 * m_NumVertsWide;

m_pNumVertsDeep->SetInt(m_NumVertsDeep);

m_pNumVertsWide->SetInt(m_NumVertsWide);

m_pMetersPerVertex->SetFloat(m_MetersPerVertex);

m_pDevice->IASetPrimitiveTopology (D3D10_PRIMITIVE_TOPOLOGY_TRIANGLESTRIP);

m_pDevice->IASetIndexBuffer(m_pIndexBuffer, DXGI_FORMAT_R32_UINT,0);

UINT offset = 0;

UINT stride = sizeof(MeshVertex);

m_pDevice->IASetInputLayout(m_pMeshIL);

m_pDevice->IASetVertexBuffers(0, 1, &m_pMeshVB, &stride, &offset);

ID3D10ShaderResourceView* pViews[] = {NULL};

m_pDevice->VSSetShaderResources(0, 1, pViews);

m_pDevice->SOSetTargets(1, &m_pNormalBufferSO, &offset); m_pSurfaceNormalsRV->SetResource(m_pNormalBufferRVSO);

D3D10_TECHNIQUE_DESC desc;

m_pMeshWithNormalMapSOTech->GetDesc(&desc);

for(unsigned i = 0; i < desc.Passes; i++)

{

m_pMeshWithNormalMapSOTech->GetPassByIndex(i)->Apply(0);

for (int j = 0; j < numRows; j++)

m_pDevice->DrawIndexed(numIndices, j * numIndices, 0 );

m_pDevice->SOSetTargets(0, NULL, &offset);

}

}

GeometryShader gsNormalBuffer = ConstructGSWithSO( CompileShader( gs_4_0,

SurfaceNormalGS() ), "POSITION.xyzw" );

technique10 MeshWithNormalMapSOTech

{

pass P0

{

SetVertexShader( CompileShader( vs_4_0, PassThroughVS() ) );

SetGeometryShader( gsNormalBuffer );

SetPixelShader( NULL );

}

pass P1

{

SetVertexShader( CompileShader( vs_4_0, RenderNormalMapScene() ) );

SetGeometryShader( NULL );

SetPixelShader( CompileShader( ps_4_0, RenderScenePS() ) );

SetDepthStencilState( EnableDepth, 0 );

}

}

[maxvertexcount(1)]

void SurfaceNormalGS( triangle GS_INPUT input[3], inout PointStream<GS_INPUT> PStream )

{

GS_INPUT Output = (GS_INPUT)0;

float3 edge1 = input[1].Position - input[0].Position;

float3 edge2 = input[2].Position - input[0].Position;

Output.Position.xyz = normalize( cross( edge2, edge1 ) );

PStream.Append(Output);

}

float3 ComputeNormal(uint index)

{

float3 normal = 0.0;

int topVertex = g_NumVertsDeep - 1;

int rightVertex = g_NumVertsWide - 1;

int normalsPerRow = rightVertex * 2;

int numRows = topVertex;

float top = normalsPerRow * (numRows - 1);

int x = index % g_NumVertsWide;

int z = index / g_NumVertsWide;

// Bottom

if(z == 0)

{

if(x == 0)

{

float3 normal0 = g_SurfaceNormals.Load(int2( 0, 0 ));

float3 normal1 = g_SurfaceNormals.Load(int2( 1, 0 ));

normal = normal0 + normal1;

}

else if(x == rightVertex)

{

index = (normalsPerRow - 1);

normal = g_SurfaceNormals.Load(int2( index, 0 ));

}

else

{

index = (2 * x);

normal = g_SurfaceNormals.Load(int2( index-1, 0 )) +

g_SurfaceNormals.Load(int2( index, 0 )) +

g_SurfaceNormals.Load(int2( index+1, 0 ));

}

}

// Top

else if(z == topVertex)

{

if(x == 0)

{

normal = g_SurfaceNormals.Load(int2( top, 0 ));

}

else if(x == rightVertex)

{

index = (normalsPerRow * numRows) - 1;

normal = g_SurfaceNormals.Load(int2( index, 0 )) +

g_SurfaceNormals.Load(int2( index-1, 0 ));

}

else

{

index = top + (2 * x);

normal = g_SurfaceNormals.Load(int2( index-2, 0)) +

g_SurfaceNormals.Load(int2( index, 0)) +

g_SurfaceNormals.Load(int2( index-1, 0));

}

}

// Middle

else

{

if(x == 0)

{

int index1 = z * normalsPerRow;

int index2 = index1 - normalsPerRow;

normal = g_SurfaceNormals.Load(int2( index1, 0 )) +

g_SurfaceNormals.Load(int2( index1+1, 0 )) +

g_SurfaceNormals.Load(int2( index2, 0 ));

}

else if(x == rightVertex)

{

int index1 = (z + 1) * normalsPerRow - 1;

int index2 = index1 - normalsPerRow;

normal = g_SurfaceNormals.Load(int2( index1, 0 )) +

g_SurfaceNormals.Load(int2( index2, 0 )) +

g_SurfaceNormals.Load(int2( index2-1, 0 ));

}

else

{

int index1 = (z * normalsPerRow) + (2 * x);

int index2 = index1 - normalsPerRow;

normal = g_SurfaceNormals.Load(int2( index1-1, 0 )) +

g_SurfaceNormals.Load(int2( index1, 0 )) +

g_SurfaceNormals.Load(int2( index1+1, 0 )) +

g_SurfaceNormals.Load(int2( index2-2, 0 )) +

g_SurfaceNormals.Load(int2( index2-1, 0 )) +

g_SurfaceNormals.Load(int2( index2, 0 ));

}

}

return normal;

}

Figure 7: A screenshot of the demo program for this article

Watch more interviews and game dev tutorials FREE at http://www.design3.com

public class Student

{

}

Student objectStudent = new Student();

This is an art; each designer uses different techniques to identify classes. However according to Object Oriented Design Principles, there are five principles that you must follow when design a class,

For more information on design principles, please refer to Object Mentor.

Additionally to identify a class correctly, you need to identify the full list of leaf level functions/ operations of the system (granular level use cases of the system). Then you can proceed to group each function to form classes (classes will group same types of functions/ operations). However a well defined class must be a meaningful grouping of a set of functions and should support the re-usability while increasing expandability/ maintainability of the overall system.

In software world the concept of dividing and conquering is always recommended, if you start analyzing a full system at the start, you will find it harder to manage. So the better approach is to identify the module of the system first and then dig deep in to each module separately to seek out classes.

A software system may consist of many classes. But in any case, when you have many, it needs to be managed. Think of a big organization, with its work force exceeding several thousand employees (let’s take one employee as a one class). In order to manage such a work force, you need to have proper management policies in place. Same technique can be applies to manage classes of your software system as well. In order to manage the classes of a software system, and to reduce the complexity, the system designers use several techniques, which can be grouped under four main concepts named Encapsulation, Abstraction, Inheritance, and Polymorphism. These concepts are the four main gods of OOP world and in software term, they are called four main Object Oriented Programming (OOP) Concepts.

There are several other ways that an encapsulation can be used, as an example we can take the usage of an interface. The interface can be used to hide the information of an implemented class.

IStudent myStudent = new LocalStudent(); IStudent myStudent = new ForeignStudent();

public class StudentRegistrar

{

public StudentRegistrar ();

{

new RecordManager().Initialize();

}

}

public class University

{

private Chancellor universityChancellor = new Chancellor();

}

Same way, as another example, you can say that, there is a composite relationship in-between a KeyValuePairCollection and a KeyValuePair. The two mutually depend on each other.

.Net and Java uses the Composite relation to define their Collections. I have seen Composition is being used in many other ways too. However the more important factor, that most people forget is the life time factor. The life time of the two classes that has bond with a composite relation mutually depend on each other. If you take the .net Collection to understand this, there you have the Collection Element define inside (it is an inner part, hence called it is composed of) the Collection, farcing the Element to get disposed with the Collection. If not, as an example, if you define the Collection and it’s Element to be independent, then the relationship would be more of a type Aggregation, than a Composition. So the point is, if you want to bind two classes with Composite relation, more accurate way is to have a one define inside the other class (making it a protected or private class). This way you are allowing the outer class to fulfill its purpose, while tying the lifetime of the inner class with the outer class.

So in summary, we can say that aggregation is a special kind of an association and composition is a special kind of an aggregation. (Association->Aggregation->Composition)

While abstraction reduces complexity by hiding irrelevant detail, generalization reduces complexity by replacing multiple entities which perform similar functions with a single construct. Generalization is the broadening of application to encompass a larger domain of objects of the same or different type. Programming languages provide generalization through variables, parameterization, generics and polymorphism. It places the emphasis on the similarities between objects. Thus, it helps to manage complexity by collecting individuals into groups and providing a representative which can be used to specify any individual of the group.

Abstraction and generalization are often used together. Abstracts are generalized through parameterization to provide greater utility. In parameterization, one or more parts of an entity are replaced with a name which is new to the entity. The name is used as a parameter. When the parameterized abstract is invoked, it is invoked with a binding of the parameter to an argument.

Abstract classes are ideal when implementing frameworks. As an example, let’s study the abstract class named LoggerBase below. Please carefully read the comments as it will help you to understand the reasoning behind this code.

public abstract class LoggerBase

{

/// <summary>

/// field is private, so it intend to use inside the class only

/// </summary>

private log4net.ILog logger = null;

/// <summary>

/// protected, so it only visible for inherited class

/// </summary>

protected LoggerBase()

{

// The private object is created inside the constructor

logger = log4net.LogManager.GetLogger(this.LogPrefix);

// The additional initialization is done immediately after

log4net.Config.DOMConfigurator.Configure();

}

/// <summary>

/// When you define the property as abstract,

/// it forces the inherited class to override the LogPrefix

/// So, with the help of this technique the log can be made,

/// inside the abstract class itself, irrespective of it origin.

/// If you study carefully you will find a reason for not to have ÃÃÃÃÃÃÃÃâsetÃÃÃÃÃÃÃÃâ method here.

/// </summary>

protected abstract System.Type LogPrefix

{

get;

}

/// <summary>

/// Simple log method,

/// which is only visible for inherited classes

/// </summary>

/// <param name="message"></param>

protected void LogError(string message)

{

if (this.logger.IsErrorEnabled)

{

this.logger.Error(message);

}

}

/// <summary>

/// Public properties which exposes to inherited class

/// and all other classes that have access to inherited class

/// </summary>

public bool IsThisLogError

{

get

{

return this.logger.IsErrorEnabled;

}

}

}

Let’s try to understand each line of the above code.

Like any other class, an abstract class can contain fields, hence I used a private field named logger declare the ILog interface of the famous log4net library. This will allow the Loggerbase class to control, what to use, for logging, hence, will allow changing the source logger library easily.

The access modifier of the constructor of the LoggerBase is protected. The public constructor has no use when the class is of type abstract. The abstract classes are not allowed to instantiate the class. So I went for the protected constructor.

The abstract property named LogPrefix is an important one. It enforces and guarantees to have a value for LogPrefix (LogPrefix uses to obtain the detail of the source class, which the exception has occurred) for every subclass, before they invoke a method to log an error.

The method named LogError is protected, hence exposed to all subclasses. You are not allowed or rather you cannot make it public, as any class, without inheriting the LoggerBase cannot use it meaningfully.

Let’s find out why the property named IsThisLogError is public. It may be important/ useful for other associated classes of an inherited class to know whether the associated member logs its errors or not.

Apart from these you can also have virtual methods defined in an abstract class. The virtual method may have its default implementation, where a subclass can override it when required.

All and all, the important factor here is that all OOP concepts should be used carefully with reasons, you should be able to logically explain, why you make a property a public or a field a private or a class an abstract. Additionally, when architecting frameworks, the OOP concepts can be used to forcefully guide the system to be developed in the way framework architect’s wanted it to be architected initially.

Interface can be used to define a generic template and then one or more abstract classes to define partial implementations of the interface. Interfaces just specify the method declaration (implicitly public and abstract) and can contain properties (which are also implicitly public and abstract). Interface definition begins with the keyword interface. An interface like that of an abstract class cannot be instantiated.

If a class that implements an interface does not define all the methods of the interface, then it must be declared abstract and the method definitions must be provided by the subclass that extends the abstract class. In addition to this an interfaces can inherit other interfaces.

The sample below will provide an interface for our LoggerBase abstract class.

public interface ILogger

{

bool IsThisLogError { get; }

}

If MyLogger is a class, which implements ILogger, there we can write

ILogger log = new MyLogger();

There are quite a big difference between an interface and an abstract class, even though both look similar.

Abstract classes let you define some behaviors; they force your subclasses to provide others. For example, if you have an application framework, an abstract class can be used to provide the default implementation of the services and all mandatory modules such as event logging and message handling etc. This approach allows the developers to develop the application within the guided help provided by the framework.

However, in practice when you come across with some application-specific functionality that only your application can perform, such as startup and shutdown tasks etc. The abstract base class can declare virtual shutdown and startup methods. The base class knows that it needs those methods, but an abstract class lets your class admit that it doesn't know how to perform those actions; it only knows that it must initiate the actions. When it is time to start up, the abstract class can call the startup method. When the base class calls this method, it can execute the method defined by the child class.

As mentioned before .Net support multiple implementations, the concept of implicit and explicit implementation provide safe way to implement methods of multiple interfaces by hiding, exposing or preserving identities of each of interface methods, even when the method signatures are the same.

Let's consider the interfaces defined below.

interface IDisposable

{

void Dispose();

}

Here you can see that the class Student has implicitly and explicitly implemented the method named Dispose() via Dispose and IDisposable.Dispose.

class Student : IDisposable

{

public void Dispose()

{

Console.WriteLine("Student.Dispose");

}

void IDisposable.Dispose()

{

Console.WriteLine("IDisposable.Dispose");

}

}

Ability of a new class to be created, from an existing class by extending it, is called inheritance.

public class Exception

{

}

public class IOException : Exception

{

}

According to the above example the new class (IOException), which is called the derived class or subclass, inherits the members of an existing class (Exception), which is called the base class or super-class. The class IOException can extend the functionality of the class Exception by adding new types and methods and by overriding existing ones.

Just like abstraction is closely related with generalization, the inheritance is closely related with specialization. It is important to discuss those two concepts together with generalization to better understand and to reduce the complexity.

One of the most important relationships among objects in the real world is specialization, which can be described as the “is-a” relationship. When we say that a dog is a mammal, we mean that the dog is a specialized kind of mammal. It has all the characteristics of any mammal (it bears live young, nurses with milk, has hair), but it specializes these characteristics to the familiar characteristics of canis domesticus. A cat is also a mammal. As such, we expect it to share certain characteristics with the dog that are generalized in Mammal, but to differ in those characteristics that are specialized in cats.

The specialization and generalization relationships are both reciprocal and hierarchical. Specialization is just the other side of the generalization coin: Mammal generalizes what is common between dogs and cats, and dogs and cats specialize mammals to their own specific subtypes.

Similarly, as an example you can say that both IOException and SecurityException are of type Exception. They have all characteristics and behaviors of an Exception, That mean the IOException is a specialized kind of Exception. A SecurityException is also an Exception. As such, we expect it to share certain characteristic with IOException that are generalized in Exception, but to differ in those characteristics that are specialized in SecurityExceptions. In other words, Exception generalizes the shared characteristics of both IOException and SecurityException, while IOException and SecurityException specialize with their characteristics and behaviors.

In OOP, the specialization relationship is implemented using the principle called inheritance. This is the most common and most natural and widely accepted way of implement this relationship.

At times, I used to think that understanding Object Oriented Programming concepts have made it difficult since they have grouped under four main concepts, while each concept is closely related with one another. Hence one has to be extremely careful to correctly understand each concept separately, while understanding the way each related with other concepts.

In OOP the polymorphisms is achieved by using many different techniques named method overloading, operator overloading and method overriding,

public class MyLogger

{

public void LogError(Exception e)

{

// Implementation goes here

}

public bool LogError(Exception e, string message)

{

// Implementation goes here

}

}

public class Complex

{

private int real;

public int Real

{ get { return real; } }

private int imaginary;

public int Imaginary

{ get { return imaginary; } }

public Complex(int real, int imaginary)

{

this.real = real;

this.imaginary = imaginary;

}

public static Complex operator +(Complex c1, Complex c2)

{

return new Complex(c1.Real + c2.Real, c1.Imaginary + c2.Imaginary);

}

}

A subclass can give its own definition of methods but need to have the same signature as the method in its super-class. This means that when overriding a method the subclass's method has to have the same name and parameter list as the super-class's overridden method.

using System;

public class Complex

{

private int real;

public int Real

{ get { return real; } }

private int imaginary;

public int Imaginary

{ get { return imaginary; } }

public Complex(int real, int imaginary)

{

this.real = real;

this.imaginary = imaginary;

}

public static Complex operator +(Complex c1, Complex c2)

{

return new Complex(c1.Real + c2.Real, c1.Imaginary + c2.Imaginary);

}

public override string ToString()

{

return (String.Format("{0} + {1}i", real, imaginary));

}

}

Complex num1 = new Complex(5, 7);

Complex num2 = new Complex(3, 8);

// Add two Complex numbers using the

// overloaded plus operator

Complex sum = num1 + num2;

// Print the numbers and the sum

// using the overriden ToString method

Console.WriteLine("({0}) + ({1}) = {2}", num1, num2, sum);

Console.ReadLine();

A use case is a thing an actor perceives from the system. A use case maps actors with functions. Importantly, the actors need not be people. As an example a system can perform the role of an actor, when it communicate with another system.

A class diagrams are widely used to describe the types of objects in a system and their relationships. Class diagrams model class structure and contents using design elements such as classes, packages and objects. Class diagrams describe three different perspectives when designing a system, conceptual, specification, and implementation. These perspectives become evident as the diagram is created and help solidify the design.

The Class diagrams, physical data models, along with the system overview diagram are in my opinion the most important diagrams that suite the current day rapid application development requirements.

According to the modern days use of two-tier architecture the user interfaces (or with ASP.NET, all web pages) runs on the client and the database is stored on the server. The actual application logic can run on either the client or the server. So in this case the user interfaces are directly access the database. Those can also be non-interface processing engines, which provide solutions to other remote/ local systems. In either case, today the two-tier model is not as reputed as the three-tier model. The advantage of the two-tier design is its simplicity, but the simplicity comes with the cost of scalability. The newer three-tier architecture, which is more famous, introduces a middle tier for the application logic.

Three-tier is a client-server architecture in which the user interface, functional process logic, data storage and data access are developed and maintained as independent modules, some time on separate platforms. The term "three-tier" or "three-layer", as well as the concept of multi-tier architectures (often refers to as three-tier architecture), seems to have originated within Rational Software.

Unfortunately, the popularity of this pattern has resulted in a number of faulty usages; each technology (Java, ASP.NET etc) has defined it in their own way making it difficult to understand. In particular, the term "controller" has been used to mean different things in different contexts. The definitions given bellow are the closes possible ones I found for ASP.NET version of MVC.

The .Net technology introduces the SOA by mean of web services.

The SOA can be used as the concept to connect multiple systems to provide services. It has it's great share in the future of the IT world.

According to the imaginary diagram above, we can see how the Service Oriented Architecture is being used to provide a set of centralized services to the citizens of a country. The citizens are given a unique identifying card, where that card carries all personal information of each citizen. Each service centers such as shopping complex, hospital, station, and factory are equipped with a computer system where that system is connected to a central server, which is responsible of providing service to a city. As an example when a customer enter the shopping complex the regional computer system report it to the central server and obtain information about the customer before providing access to the premises. The system welcomes the customer. The customer finished the shopping and then by the time he leaves the shopping complex, he will be asked to go through a billing process, where the regional computer system will manage the process. The payment will be automatically handled with the input details obtain from the customer identifying card.

The regional system will report to the city (computer system of the city) while the city will report to the country (computer system of the country).

The data access layer need to be generic, simple, quick and efficient as much as possible. It should not include complex application/ business logics.

I have seen systems with lengthy, complex store procedures (SP), which run through several cases before doing a simple retrieval. They contain not only most part of the business logic, but application logic and user interface logic as well. If SP is getting longer and complicated, then it is a good indication that you are burring your business logic inside the data access layer.

As a general advice when you define business entities, you must decide how to map the data in your tables to correctly defined business entities. The business entities should meaningfully define considering various types of requirements and functioning of your system. It is recommended to identify the business entities to encapsulate the functional/ UI (User Interface) requirements of your application, rather than define a separate business entity for each table of your database. For example, if you want to combine data from couple of table to build a UI (User Interface) control (Web Control), implement that function in the Business Logic Layer with a business object that uses couple of data object to support with your complex business requirement.

The two design patterns are fundamentally different. However, when you learn them for the first time, you will see a confusing similarity. So that it will make harder for you to understand them. But if you continue to study eventually, you will get afraid of design patterns too. It is like infant phobia, once you get afraid at your early age, it stays with you forever. So the result would be that you never look back at design patterns again. Let me see whether I can solve this brain teaser for you.

In the image below, you have both design pattern listed in. I am trying to compare the two one on one to identify the similarities. If you observe the figure carefully, you will see an easily understandable color pattern (same color is used to mark the classes that are of similar kind).

Please follow up with the numbers in the image when reading the listing below.

Mark #1: Both patterns have used a generic class as the entry-class. The only difference is the name of the class. One pattern has named it as “Client”, while the other named it as “Director”.

Mark #2: Here again the difference is the class name. It is “AbstractFactory” for one and “Builder” for the other. Additionally both classes are of type abstract.

Mark #3: Once again both patterns have defined two generic (WindowsFactory & ConcreteBuilder) classes. They both have created by inheriting their respective abstract class.

Mark #4: Finally, both seem to produce some kind of a generic output.

Now, where are we? Aren’t they looking almost identical? So then why are we having two different patterns here?

Let’s compare the two again side by side for one last time, but this time, focusing on the differences.

Sometimes creational patterns are complementary: So you can join one or many patterns when you design your system. As an example builder can use one of the other patterns to implement which components get built or in another case Abstract Factory, Builder, and Prototype can use Singleton in their implementations. So the conclusion would be that the two design patterns exist to resolve two type of business problems, so even though they look similar, they are not.

I hope that this shed some light to resolve the puzzle. If you still don’t understand it, then this time it is not you, it has to be me and it is since that I don’t know how to explain it.

I don't think, that it is realistic trying to make a programming language be everything to everybody. The language becomes bloated, hard to learn, and hard to read if everything plus the kitchen sink is thrown in. In another word every language has their limitations. As system architect and designer we should be able to fully and more importantly correctly (this also mean that you shouldn’t use a ballistic missile to kill a fly or hire FBI to catch the fly) utilize the available tools and features to build usable, sustainable, maintainable and also very importantly expandable software systems, that fully utilize the feature of the language to bring a competitively advance system to their customers. In order to do it, the foundation of a system places a vital role. The design or the architecture of a software system is the foundation. It hold the system together, hence designing a system properly (this never mean an *over* desinging) is the key to the success. When you talk about designing a software system, the correct handling of OOP concept is very important. I have made the above article richer with idea but still kept it short so that one can learn/ remind all of important concept at a glance. Hope you all will enjoy reading it.

Finally, after reading all these, one may argue with me saying that anybody can write all these concept definitions but do I know how/ when to apply them in real world systems. So for them to see these concepts being applied in real world systems, please check the source code of the latest of my open-source project name Rocket Framework.

Note: For newbies Rocket Framework is going to be little too advance but check it, use it and review it if you have any questions/ criticisms around my design don't hesitate to shoot them here or there..

Note:

This article was originally published to GameDev.net back in 2000. It was revised by the original author in 2008 and published in the book Beginning Game Programming: A GameDev.net Collection, which is one of 4 books collecting both popular GameDev.net articles and new original content in print format.

Note:

We'd like to update this document once again from its 2008 version, so please use the comments to add new language sections and we will place them in the article. Additions and updates to current sections are welcome as well!

\[ multiline formula goes here \]

\( inline formula goes here \)

<b>The Lorenz Equations</b>

\[\begin{aligned}

\dot{x} & = \sigma(y-x) \\

\dot{y} & = \rho x - y - xz \\

\dot{z} & = -\beta z + xy

\end{aligned} \]

<b>The Cauchy-Schwarz Inequality</b>

\[ \left( \sum_{k=1}^n a_k b_k \right)^2 \leq \left( \sum_{k=1}^n a_k^2 \right) \left( \sum_{k=1}^n b_k^2 \right) \]

<b>A Cross Product Formula</b>

\[\mathbf{V}_1 \times \mathbf{V}_2 = \begin{vmatrix}

\mathbf{i} & \mathbf{j} & \mathbf{k} \\

\frac{\partial X}{\partial u} & \frac{\partial Y}{\partial u} & 0 \\

\frac{\partial X}{\partial v} & \frac{\partial Y}{\partial v} & 0

\end{vmatrix} \]

<b>The probability of getting \(k\) heads when flipping \(n\) coins is</b>

\[P(E) = {n \choose k} p^k (1-p)^{ n-k} \]

<b>An Identity of Ramanujan</b>

\[ \frac{1}{\Bigl(\sqrt{\phi \sqrt{5}}-\phi\Bigr) e^{\frac25 \pi}} =

1+\frac{e^{-2\pi}} {1+\frac{e^{-4\pi}} {1+\frac{e^{-6\pi}}

{1+\frac{e^{-8\pi}} {1+\ldots} } } } \]

<b>A Rogers-Ramanujan Identity</b>

\[ 1 + \frac{q^2}{(1-q)}+\frac{q^6}{(1-q)(1-q^2)}+\cdots =

\prod_{j=0}^{\infty}\frac{1}{(1-q^{5j+2})(1-q^{5j+3})},

\quad\quad \text{for $|q|<1$}. \]

<b>MaxwellÃÃÃÃâs Equations</b>

\[ \begin{aligned}

\nabla \times \vec{\mathbf{B}} -\, \frac1c\, \frac{\partial\vec{\mathbf{E}}}{\partial t} & = \frac{4\pi}{c}\vec{\mathbf{j}} \\ \nabla \cdot \vec{\mathbf{E}} & = 4 \pi \rho \\

\nabla \times \vec{\mathbf{E}}\, +\, \frac1c\, \frac{\partial\vec{\mathbf{B}}}{\partial t} & = \vec{\mathbf{0}} \\

\nabla \cdot \vec{\mathbf{B}} & = 0 \end{aligned}

\]

#include <stdio.h>

// Assume this is opened elsewhere.

FILE *_log;

static void onWindowClose( GLFWwindow* win ) {

fputs( "The window is closing!", _log );

// Tell the main loop to exit

setExitFlag();

}

void initEventHandlersForThisWindow( GLFWwindow* win ) {

// Pass the callback to glfw

glfwSetWindowCloseCallback( win, onWindowClose );

}

import std.stdio;

// Imaginary module that defines an App class

import mygame.foo.app;

// Assume this is opened elsewhere.

File _log;

// private at module scope makes the function local to the module,

// just as a static function in C. This is going to be passed to

// glfw as a callback, so it must be declared as extern( C ).

private extern( C ) void onWindowClose( GLFWwindow* win ) {

// Calling a D function

_log.writeln( "The window is closing!" );

// Tell the app to exit.

myApp.stop();

}

void initEventHandlersForThisWindow( GLFWwindow* win ) {

glfwSetWindowCloseCallback( &onWindowClose );

}

Throws:

Exception if the file is not opened. ErrnoException on an error writing to the file.

// In D, class references are automatically initialized to null.

private Throwable _rethrow;

private extern( C ) void onWindowClose( GLFWwindow* win ) {

try {

_log.writeln( "The window is closing!" );

myApp.stop();

} catch( Throwable t ) {

// Save the exception so it can be rethrown below.

_rethrow = t;

}

}

void pumpEvents() {

glfwPollEvents();

// The C function has returned, so it is safe to rethrow the exception now.

if( _rethrow !is null ) {

throw _rethrow;

}

}

The first rule of Throwable is that you do not catch Throwable. If you do decide to catch it, you can't count on struct destructors being called and finally clauses being executed.

void foo() {

try { ... }

catch( Throwable t ) {

throw new MyException( "Something went horribly wrong!", t );

}

}

void bar() {

try {

foo();

} catch( MyException me ) {

// Do something with MyException

me.doSomething();

// Throw the original

throw me.next;

}

}

try { ... }

catch( Throwable t ) {

// Chain the previously saved exception to t.

t.next = _rethrow;

// Save t.

_rethrow = t;

}

class CallbackThrowable : Throwable {

// This is for the previously saved CallbackThrowable, if any.

CallbackThrowable nextCT;

// The file and line params aren't strictly necessary, but they are good

// for debugging.

this( Throwable payload, CallbackThrowable t,

string file = __FILE__, size_t line = __LINE__ ) {

// Call the super class constructor taht takes file and line info,

// and make the wrapped exception part of this exception's chain.

super( "An exception was thrown from a C callback.", file, line, payload );

// Store a reference to the previously saved CallbackThrowable

nextCT = t;

}

// This method aids in propagating non-Exception throwables up the callstack.

void throwWrappedError() {

// Test if the wrapped Throwable is an instance of Exception and throw it

// if it isn't. This will cause Errors and any other non-Exception Throwable

// to be rethrown.

if( cast( Exception )next is null ) {

throw next;

}

}

}

private CallbackThrowable _rethrow;

private extern( C ) void onWindowClose( GLFWwindow* win ) {

try {

_log.writeln( "The window is closing!" );

myApp.stop();

} catch( Throwable t ) {

// Save a new CallbackThrowable that wraps t and chains _rethrow.

_rethrow = new CallbackThrowable( t, _rethrow );

}

}

void pumpEvents() {

glfwPollEvents();

if( _rethrow !is null ) {

// Loop through each CallbackThrowable in the chain and rethrow the first

// non-Exception throwable encountered.

for( auto ct = _rethrow; ct !is null; ct = ct.nextCT ) {

ct.throwWrappedError();

}

// No Errors were caught, so all we have are Exceptions.

// Throw the saved CallbackThrowable.

throw _rethrow;

}

}

This time I want to speak on the 'printf' function. Everybody has heard of software vulnerabilities and that functions like 'printf' are outlaw. But it's one thing to know that you'd better not use these functions, and quite the other to understand why. In this article, I will describe two classic software vulnerabilities related to 'printf'. You won't become a hacker after that but perhaps you will have a fresh look at your code. You might create similar vulnerable functions in your project without knowing that.

STOP. Reader, please stop, don't pass by. You have seen the word "printf", I know. And you're sure that you will now be told a banal story that the function cannot check types of passed arguments. No! It's vulnerabilities themselves that the article deals with, not the things you have thought. Please come and read it.

The previous post can be found here: Part one.

Have a look at this line:

printf(name);

It seems simple and safe. But actually it hides at least two methods to attack the program.

Let's start our article with a demo sample containing this line. The code might look a bit odd. It is, really. We found it quite difficult to write a program so that it could be attacked then. The reason is optimization performed by the compiler. It appears that if you write a too simple program, the compiler creates a code where nothing can be hacked. It uses registers, not the stack, to store data, creates intrinsic functions and so on. We could write a code with extra actions and loops so that the compiler lacked free registers and started putting data into the stack. Unfortunately, the code would be too large and complicated in this case. We could write a whole detective story about all this, but we won't.

The cited sample is a compromise between complexity and the necessity to create a code that would not be too simple for the compiler to get it "collapsed into nothing". I have to confess that I still have helped myself a bit: I have disabled some optimization options in Visual Studio 2010. First, I have turned off the /GL (Whole Program Optimization) switch. Second, I have used the __declspec(noinline) attribute.

Sorry for such a long introduction: I just wanted to explain why my code is such a crock and prevent beforehand any debates on how we could write it in a better way. I know that we could. But we didn't manage to make the code short and show you the vulnerability inside it at the same time.

The complete code and project for Visual Studio 2010 can be found here:  printf_demo.zip 3.47KB

26 downloads

printf_demo.zip 3.47KB

26 downloads

.

const size_t MAX_NAME_LEN = 60;

enum ErrorStatus {

E_ToShortName, E_ToShortPass, E_BigName, E_OK

};

void PrintNormalizedName(const char *raw_name)

{

char name[MAX_NAME_LEN + 1];

strcpy(name, raw_name);

for (size_t i = 0; name[i] != '\0'; ++i)

name[i] = tolower(name[i]);

name[0] = toupper(name[0]);

printf(name);

}

ErrorStatus IsCorrectPassword(

const char *universalPassword,

BOOL &retIsOkPass)

{

string name, password;

printf("Name: "); cin >> name;

printf("Password: "); cin >> password;

if (name.length() < 1) return E_ToShortName;

if (name.length() > MAX_NAME_LEN) return E_BigName;

if (password.length() < 1) return E_ToShortPass;

retIsOkPass =

universalPassword != NULL &&

strcmp(password.c_str(), universalPassword) == 0;

if (!retIsOkPass)

retIsOkPass = name[0] == password[0];

printf("Hello, ");

PrintNormalizedName(name.c_str());

return E_OK;

}

int _tmain(int, char *[])

{

_set_printf_count_output(1);

char universal[] = "_Universal_Pass_!";

BOOL isOkPassword = FALSE;

ErrorStatus status =

IsCorrectPassword(universal, isOkPassword);

if (status == E_OK && isOkPassword)

printf("\nPassword: OK\n");

else

printf("\nPassword: ERROR\n");

return 0;

}The _tmain() function calls the IsCorrectPassword() function. If the password is correct or if it coincides with the magic word "_Universal_Pass_!", then the program prints the line "Password: OK". The purpose of our attacks will be to have the program print this very line.

The IsCorrectPassword() function asks the user to specify name and password. The password is considered correct if it coincides with the magic word passed into the function. It is also considered correct if the password's first letter coincides with the name's first letter.

Regardless of whether the correct password is entered or not, the application shows a welcome window. The PrintNormalizedName() function is called for this purpose.

The PrintNormalizedName() function is of the most interest. It is this function where the "printf(name);" we're discussing is stored. Think of the way we can exploit this line to cheat the program. If you know how to do it, you don't have to read further.

What does the PrintNormalizedName() function do? It prints the name making the first letter capital and the rest letters small. For instance, if you enter the name "andREy2008", it will be printed as "Andrey2008".

Suppose we don't know the correct password. But we know that there is some magic password somewhere. Let's try to find it using printf(). If this password's address is stored somewhere in the stack, we have certain chances to succeed. Any ideas how to get this password printed on the screen?

Here is a tip. The printf() function refers to the family of variable-argument functions. These functions work in the following way. Some amount of data is written into the stack. The printf() function doesn't know how many data is pushed and what type they have. It follows only the format string. If it reads "%d%s", then the function should extract one value of the int type and one pointer from the stack. Since the printf() function doesn't know how many arguments it has been passed, it can look deeper into the stack and print data that have nothing to do with it. It usually causes access violation or printing trash. And we may exploit this trash.

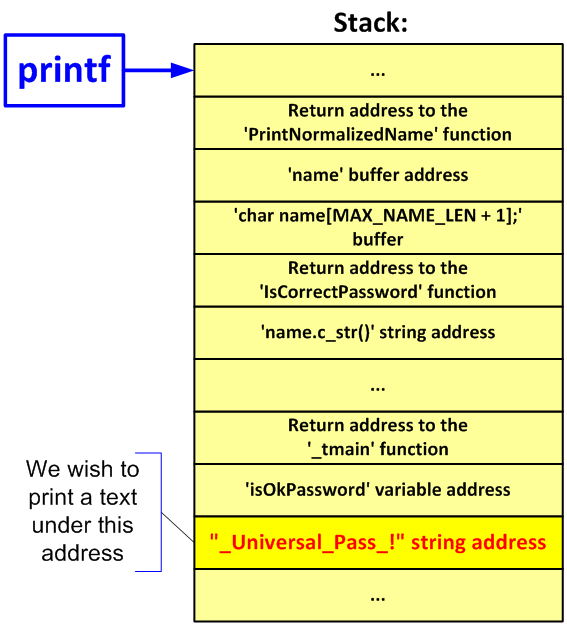

Let's see how the stack might look at the moment when calling the printf() function:

Figure 1. Schematic arrangement of data in the stack.

The "printf(name);" function's call has only one argument which is the format string. It means that if we type in "%d" instead of the name, the program will print the data that lie in the stack before the PrintNormalizedName() function's return address. Let's try:

Name: %d

Password: 1

Hello, 37

Password: ERROR

This action has little sense in it for now. First of all, we have at least to print the return addresses and all the contents of the char name[MAX_NAME_LEN + 1] buffer which is located in the stack too. And only then we may get to something really interesting.

If an attacker cannot disassemble or debug the program, he/she cannot know for sure if there is something interesting in the stack to be found. He/she still can go the following way.

First we can enter: "%s". Then "%x%s". Then "%x%x%s" and so on. Doing so, the hacker will search through the data in the stack in turn and try to print them as a line. It helps the intruder that all the data in the stack are aligned at least on a 4-byte boundary.

To be honest, we won't succeed if we go this way. We will exceed the limit of 60 characters and have nothing useful printed. "%f" will help us - it is intended to print values of the double type. So, we can use it to move along the stack with an 8-byte step.

Here it is, our dear line:

%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%x(%s)

This is the result:

Figure 2. Printing the password. Click on the picture to enlarge it.

Let's try this line as the magic password:

Name: Aaa

Password: _Universal_Pass_!

Hello, Aaa

Password: OK

Hurrah! We have managed to find and print the private data which the program didn't intend to give us access to. Note also that you don't have to get access to the application's binary code itself. Diligence and persistence are enough.

You should give a wider consideration to this method of getting private data. When developing software containing variable-argument functions, think it over if there are cases when they may be the source of data leakage. It can be a log-file, a batch passed on the network and the like.

In the case we have considered, the attack is possible because the printf() function receives a string that may contain control commands. To avoid this, you just need to write it in this way:

printf("%s", name);Do you know that the printf() function can modify memory? You must have read about it but forgotten. We mean the "%n" specifier. It allows you to write a number of characters, already printed by the printf() function, by a certain address.

To be honest, an attack based on the "%n" specifier is just of a historical character. Starting with Visual Studio 2005, the capability of using "%n" is off by default. To perform this attack, I had to explicitly allow this specifier. Here is this magic trick:

_set_printf_count_output(1);

To make it clearer, let me give you an example of using "%n":

int i;

printf("12345%n6789\n", &i);

printf( "i = %d\n", i );The program's output:

123456789

i = 5

We have already found out how to get to the needed pointer in the stack. And now we have a tool that allows us to modify memory by this pointer.

Of course, it's not very much convenient to use it. To start with, we can write only 4 bytes at a time (int type's size). If we need a larger number, the printf() function will have to print very many characters first. To avoid this we may use the "%00u" specifier: it affects the value of the current number of output bytes. Let's not go deep into the detail.

Our case is simpler: we just have to write any value not equal to 0 into the isOkPassword variable. This variable's address is passed into the IsCorrectPassword() function, which means that it is stored somewhere in the stack. Do not be confused by the fact that the variable is passed as a reference: a reference is an ordinary pointer at the low level.

Here is the line that will allow us to modify the IsCorrectPassword variable:

%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f%f %n

The "%n" specifier does not take into account the number of characters printed by specifiers like "%f". That's why we make one space before "%n" to write value 1 into isOkPassword.

Let's try:

Figure 3. Writing into memory. Click on the picture to enlarge it.

Are you impressed? But that's not all. We may perform writing by virtually any address. If the printed line is stored in the stack, we may get the needed characters and use them as an address.

For example, we may write a string containing characters with codes 'xF8', 'x32', 'x01', 'x7F' in a row. It turns out that the string contains a hard-coded number equivalent to value 0x7F0132F8. We add the "%n" specifier at the end. Using "%x" or other specifiers we can get to the coded number 0x7F0132F8 and write the number of printed characters by this address. This method has some limitations, but it is still very interesting.

We may say that an attack of the second type is hardly possible nowadays. As you see, support of the "%n" specifier is off in contemporary libraries by default. But you may create a self-made mechanism subject to this kind of vulnerabilities. Be careful when external data input into your program manage what and where is written into memory.

Particularly in our case, we may avoid the problem by writing the code in this way:

printf("%s", name);We have considered only two simple examples of vulnerabilities here. Surely, there are much more of them. We don't make an attempt to describe or at least enumerate them in this article; we wanted to show you that even such a simple construct like "printf(name)" can be dangerous.

There is an important conclusion to draw from all this: if you are not a security expert, you'd better follow all the recommendations to be found. Their point might be too subtle for you to understand the whole range of dangers on yourself. You must have read that the printf() function is dangerous. But I'm sure that many of you reading this article have learned only now how deep the rabbit hole is.

If you create an application that is potentially an attack object, be very careful. What is quite safe code from your viewpoint might contain a vulnerability. If you don't see a catch in your code, it doesn't mean there isn't any.

Follow all the compiler's recommendations on using updated versions of string functions. We mean using sprintf_s instead of sprintf and so on.

It's even better if you refuse low-level string handling. These functions are a heritage of the C language. Now we have std::string and we have safe methods of string formatting such as boost::format or std::stringstream.

P.S. Some of you, having read the conclusions, may say: "well, it's as clear as day". But be honest to yourself. Did you know and remember that printf() can perform writing into memory before you read this article? Well, and this is a great vulnerability. At least, it used to. Now there are others, as much insidious.

// c3dmarkersa.cpp

SColor C3DMarkerSA::GetColor()

{

DEBUG_TRACE("RGBA C3DMarkerSA::GetColor()");

// From ABGR

unsigned long ulABGR = this->GetInterface()->rwColour;

SColor color;

color.A = ( ulABGR >> 24 ) && 0xff;

color.B = ( ulABGR >> 16 ) && 0xff;

color.G = ( ulABGR >> 8 ) && 0xff;

color.R = ulABGR && 0xff;

return color;

}// cchatechopacket.h

class CChatEchoPacket : public CPacket

{

....

inline void SetColor( unsigned char ucRed,

unsigned char ucGreen,

unsigned char ucBlue )

{ m_ucRed = ucRed; m_ucGreen = ucGreen; m_ucRed = ucRed; };

....

}{ m_ucRed = ucRed; m_ucGreen = ucGreen; m_ucBlue = ucBlue; };// utf8.h

int

utf8_wctomb (unsigned char *dest, wchar_t wc, int dest_size)

{

if (!dest)

return 0;

int count;

if (wc < 0x80)

count = 1;

else if (wc < 0x800)

count = 2;

else if (wc < 0x10000)

count = 3;

else if (wc < 0x200000)

count = 4;

else if (wc < 0x4000000)

count = 5;

else if (wc <= 0x7fffffff)

count = 6;

else

return RET_ILSEQ;

....

}// cpackethandler.cpp

void CPacketHandler::Packet_ServerDisconnected (....)

{

....

case ePlayerDisconnectType::BANNED_IP:

strReason = _("Disconnected: You are banned.\nReason: %s");

strErrorCode = _E("CD33");

bitStream.ReadString ( strDuration );

case ePlayerDisconnectType::BANNED_ACCOUNT:

strReason = _("Disconnected: Account is banned.\nReason: %s");

strErrorCode = _E("CD34");

break;

....

}// cvehicleupgrades.cpp

bool CVehicleUpgrades::IsUpgradeCompatible (

unsigned short usUpgrade )

{

....

case 402: return ( us == 1009 || us == 1009 || us == 1010 );

....

}// cclientplayervoice.h

bool IsTempoChanged(void)

{

return m_fSampleRate != 0.0f ||

m_fSampleRate != 0.0f ||

m_fTempo != 0.0f;

}// cgame.cpp

void CGame::Packet_PlayerJoinData(CPlayerJoinDataPacket& Packet)

{

....

// Add the player

CPlayer* pPlayer = m_pPlayerManager->Create (....);

if ( pPlayer )

{

....

}

else

{

// Tell the console

CLogger::LogPrintf(

"CONNECT: %s failed to connect "

"(Player Element Could not be created.)\n",

pPlayer->GetSourceIP() );

}

....

}// clientcommands.cpp

void COMMAND_MessageTarget ( const char* szCmdLine )

{

if ( !(szCmdLine || szCmdLine[0]) )

return;

....

}if ( !(szCmdLine && szCmdLine[0]) )

// cdirect3ddata.cpp

void CDirect3DData::GetTransform (....)

{

switch ( dwRequestedMatrix )

{

case D3DTS_VIEW:

memcpy (pMatrixOut, &m_mViewMatrix, sizeof(D3DMATRIX));

break;

case D3DTS_PROJECTION:

memcpy (pMatrixOut, &m_mProjMatrix, sizeof(D3DMATRIX));

break;

case D3DTS_WORLD:

memcpy (pMatrixOut, &m_mWorldMatrix, sizeof(D3DMATRIX));

break;

default:

// Zero out the structure for the user.

memcpy (pMatrixOut, 0, sizeof ( D3DMATRIX ) );

break;

}

....

}// cperfstat.functiontiming.cpp

std::map < SString, SFunctionTimingInfo > m_TimingMap;

void CPerfStatFunctionTimingImpl::DoPulse ( void )

{

....

// Do nothing if not active

if ( !m_bIsActive )

{

m_TimingMap.empty ();

return;

}

....

}// cclientcolsphere.cpp

void CreateSphereFaces (

std::vector < SFace >& faceList, int iIterations )

{

int numFaces = (int)( pow ( 4.0, iIterations ) * 8 );

faceList.empty ();

faceList.reserve ( numFaces );

....

}

Note: If you have started reading the article from the middle and therefore skipped the beginning, see the file mtasa-review.txt to find out exact locations of all the bugs.

// crashhandler.cpp

LPCTSTR __stdcall GetFaultReason(EXCEPTION_POINTERS * pExPtrs)

{

....

PIMAGEHLP_SYMBOL pSym = (PIMAGEHLP_SYMBOL)&g_stSymbol ;

FillMemory ( pSym , NULL , SYM_BUFF_SIZE ) ;

....

}#define RtlFillMemory(Destination,Length,Fill) \ memset((Destination),(Fill),(Length)) #define FillMemory RtlFillMemory

FillMemory ( pSym , SYM_BUFF_SIZE, 0 ) ;

// hash.hpp

unsigned char m_buffer[64];

void CMD5Hasher::Finalize ( void )

{

....

// Zeroize sensitive information

memset ( m_buffer, 0, sizeof (*m_buffer) );

....

}// ceguiwindow.cpp

Vector2 Window::windowToScreen(const UVector2& vec) const

{

Vector2 base = d_parent ?

d_parent->windowToScreen(base) + getAbsolutePosition() :

getAbsolutePosition();

....

}// cjoystickmanager.cpp

struct

{

bool bEnabled;

long lMax;

long lMin;

DWORD dwType;

} axis[7];

bool CJoystickManager::IsXInputDeviceAttached ( void )

{

....

m_DevInfo.axis[6].bEnabled = 0;

m_DevInfo.axis[7].bEnabled = 0;

....

}// cwatermanagersa.cpp

class CWaterPolySAInterface

{

public:

WORD m_wVertexIDs[3];

};

CWaterPoly* CWaterManagerSA::CreateQuad ( const CVector& vecBL, const CVector& vecBR, const CVector& vecTL, const CVector& vecTR, bool bShallow )

{

....

pInterface->m_wVertexIDs [ 0 ] = pV1->GetID ();

pInterface->m_wVertexIDs [ 1 ] = pV2->GetID ();

pInterface->m_wVertexIDs [ 2 ] = pV3->GetID ();

pInterface->m_wVertexIDs [ 3 ] = pV4->GetID ();

....

}// cmainmenu.cpp

#define CORE_MTA_NEWS_ITEMS 3

CGUILabel* m_pNewsItemLabels[CORE_MTA_NEWS_ITEMS];

CGUILabel* m_pNewsItemShadowLabels[CORE_MTA_NEWS_ITEMS];

void CMainMenu::SetNewsHeadline (....)

{

....

for ( char i=0; i <= CORE_MTA_NEWS_ITEMS; i++ )

{

m_pNewsItemLabels[ i ]->SetFont ( szFontName );

m_pNewsItemShadowLabels[ i ]->SetFont ( szFontName );

....

}

....

}// fallistheader.cpp

ListHeaderSegment*

FalagardListHeader::createNewSegment(const String& name) const

{

if (d_segmentWidgetType.empty())

{

InvalidRequestException(

"FalagardListHeader::createNewSegment - "

"Segment widget type has not been set!");

}

return ....;

}// ceguistring.cpp

bool String::grow(size_type new_size)

{

// check for too big

if (max_size() <= new_size)

std::length_error(

"Resulting CEGUI::String would be too big");

....

}// cresourcechecker.cpp

int CResourceChecker::ReplaceFilesInZIP(....)

{

....

// Load file into a buffer

buf = new char[ ulLength ];

if ( fread ( buf, 1, ulLength, pFile ) != ulLength )

{

free( buf );

buf = NULL;

}

....

}// cproxydirect3ddevice9.cpp

#define D3DCLEAR_ZBUFFER 0x00000002l

HRESULT CProxyDirect3DDevice9::Clear(....)

{

if ( Flags | D3DCLEAR_ZBUFFER )

CGraphics::GetSingleton().

GetRenderItemManager()->SaveReadableDepthBuffer();

....

}// crenderitem.effectcloner.cpp

unsigned long long Get ( void );

void CEffectClonerImpl::MaybeTidyUp ( void )

{

....

if ( m_TidyupTimer.Get () < 0 )

return;

....

}// cluaacldefs.cpp

int CLuaACLDefs::aclListRights ( lua_State* luaVM )

{

char szRightName [128];

....

strncat ( szRightName, (*iter)->GetRightName (), 128 );

....

}// cscreenshot.cpp

void CScreenShot::BeginSave (....)

{

....

HANDLE hThread = CreateThread (

NULL,

0,

(LPTHREAD_START_ROUTINE)CScreenShot::ThreadProc,

NULL,

CREATE_SUSPENDED,

NULL );

....

}

#define OUTSIDE 0

#define INSIDE 1

#define INTERSECT 2 // or 3 depending on the algorithm used (see the discussion on the 2nd SSE version)

struct Vector3f

{

float x, y, z;

};

struct AABB

{

Vector3f m_Center;

Vector3f m_Extent;

};

struct Plane

{

float nx, ny, nz, d;

};

void CullAABBList(AABB* aabbList, unsigned int numAABBs, Plane* frustumPlanes, unsigned int* aabbState);

// Performance (cycles/AABB): Average = 102.5 (stdev = 12.0)

void CullAABBList_C(AABB* aabbList, unsigned int numAABBs, Plane* frustumPlanes, unsigned int* aabbState)

{

for(unsigned int iAABB = 0;iAABB < numAABBs;++iAABB)

{

const Vector3f& aabbCenter = aabbList[iAABB].m_Center;

const Vector3f& aabbSize = aabbList[iAABB].m_Extent;

unsigned int result = INSIDE; // Assume that the aabb will be inside the frustum

for(unsigned int iPlane = 0;iPlane < 6;++iPlane)

{

const Plane& frustumPlane = frustumPlanes[iPlane];

float d = aabbCenter.x * frustumPlane.nx +

aabbCenter.y * frustumPlane.ny +

aabbCenter.z * frustumPlane.nz;

float r = aabbSize.x * fast_fabsf(frustumPlane.nx) +

aabbSize.y * fast_fabsf(frustumPlane.ny) +

aabbSize.z * fast_fabsf(frustumPlane.nz);

float d_p_r = d + r;

float d_m_r = d - r;

if(d_p_r < -frustumPlane.d)

{

result = OUTSIDE;

break;

}

else if(d_m_r < -frustumPlane.d)

result = INTERSECT;

}

aabbState[iAABB] = result;

}

}

// Performance (cycles/AABB): Average = 84.9 (stdev = 12.3)

void CullAABBList_C_Opt(AABB* __restrict aabbList, unsigned int numAABBs, Plane* __restrict frustumPlanes, unsigned int* __restrict aabbState)

{

Plane absFrustumPlanes[6];

for(unsigned int iPlane = 0;iPlane < 6;++iPlane)

{

absFrustumPlanes[iPlane].nx = fast_fabsf(frustumPlanes[iPlane].nx);

absFrustumPlanes[iPlane].ny = fast_fabsf(frustumPlanes[iPlane].ny);

absFrustumPlanes[iPlane].nz = fast_fabsf(frustumPlanes[iPlane].nz);

}

for(unsigned int iAABB = 0;iAABB < numAABBs;++iAABB)

{

const Vector3f& aabbCenter = aabbList[iAABB].m_Center;

const Vector3f& aabbSize = aabbList[iAABB].m_Extent;

unsigned int result = INSIDE; // Assume that the aabb will be inside the frustum

for(unsigned int iPlane = 0;iPlane < 6;++iPlane)

{

const Plane& frustumPlane = frustumPlanes[iPlane];

const Plane& absFrustumPlane = absFrustumPlanes[iPlane];

float d = aabbCenter.x * frustumPlane.nx +

aabbCenter.y * frustumPlane.ny +

aabbCenter.z * frustumPlane.nz;

float r = aabbSize.x * absFrustumPlane.nx +

aabbSize.y * absFrustumPlane.ny +

aabbSize.z * absFrustumPlane.nz;

float d_p_r = d + r + frustumPlane.d;

if(IsNegativeFloat(d_p_r))

{

result = OUTSIDE;

break;

}

float d_m_r = d - r + frustumPlane.d;

if(IsNegativeFloat(d_m_r))

result = INTERSECT;

}

aabbState[iAABB] = result;

}

}

// 2013-09-10: Moved outside of the function body. Check comments by @Matias Goldberg for details.

__declspec(align(16)) static const unsigned int absPlaneMask[4] = {0x7FFFFFFF, 0x7FFFFFFF, 0x7FFFFFFF, 0xFFFFFFFF};

// Performance (cycles/AABB): Average = 63.9 (stdev = 10.8)

void CullAABBList_SSE_1(AABB* aabbList, unsigned int numAABBs, Plane* frustumPlanes, unsigned int* aabbState)

{

__declspec(align(16)) Plane absFrustumPlanes[6];

__m128 xmm_absPlaneMask = _mm_load_ps((float*)&absPlaneMask[0]);

for(unsigned int iPlane = 0;iPlane < 6;++iPlane)

{

__m128 xmm_frustumPlane = _mm_load_ps(&frustumPlanes[iPlane].nx);

__m128 xmm_absFrustumPlane = _mm_and_ps(xmm_frustumPlane, xmm_absPlaneMask);

_mm_store_ps(&absFrustumPlanes[iPlane].nx, xmm_absFrustumPlane);

}

for(unsigned int iAABB = 0;iAABB < numAABBs;++iAABB)

{

__m128 xmm_aabbCenter_x = _mm_load_ss(&aabbList[iAABB].m_Center.x);

__m128 xmm_aabbCenter_y = _mm_load_ss(&aabbList[iAABB].m_Center.y);

__m128 xmm_aabbCenter_z = _mm_load_ss(&aabbList[iAABB].m_Center.z);

__m128 xmm_aabbExtent_x = _mm_load_ss(&aabbList[iAABB].m_Extent.x);

__m128 xmm_aabbExtent_y = _mm_load_ss(&aabbList[iAABB].m_Extent.y);

__m128 xmm_aabbExtent_z = _mm_load_ss(&aabbList[iAABB].m_Extent.z);

unsigned int result = INSIDE; // Assume that the aabb will be inside the frustum

for(unsigned int iPlane = 0;iPlane < 6;++iPlane)

{

__m128 xmm_frustumPlane_Component = _mm_load_ss(&frustumPlanes[iPlane].nx);

__m128 xmm_d = _mm_mul_ss(xmm_aabbCenter_x, xmm_frustumPlane_Component);

xmm_frustumPlane_Component = _mm_load_ss(&frustumPlanes[iPlane].ny);

xmm_d = _mm_add_ss(xmm_d, _mm_mul_ss(xmm_aabbCenter_y, xmm_frustumPlane_Component));

xmm_frustumPlane_Component = _mm_load_ss(&frustumPlanes[iPlane].nz);

xmm_d = _mm_add_ss(xmm_d, _mm_mul_ss(xmm_aabbCenter_z, xmm_frustumPlane_Component));

__m128 xmm_absFrustumPlane_Component = _mm_load_ss(&absFrustumPlanes[iPlane].nx);

__m128 xmm_r = _mm_mul_ss(xmm_aabbExtent_x, xmm_absFrustumPlane_Component);

xmm_absFrustumPlane_Component = _mm_load_ss(&absFrustumPlanes[iPlane].ny);

xmm_r = _mm_add_ss(xmm_r, _mm_mul_ss(xmm_aabbExtent_y, xmm_absFrustumPlane_Component));

xmm_absFrustumPlane_Component = _mm_load_ss(&absFrustumPlanes[iPlane].nz);

xmm_r = _mm_add_ss(xmm_r, _mm_mul_ss(xmm_aabbExtent_z, xmm_absFrustumPlane_Component));

__m128 xmm_frustumPlane_d = _mm_load_ss(&frustumPlanes[iPlane].d);

__m128 xmm_d_p_r = _mm_add_ss(_mm_add_ss(xmm_d, xmm_r), xmm_frustumPlane_d);

__m128 xmm_d_m_r = _mm_add_ss(_mm_sub_ss(xmm_d, xmm_r), xmm_frustumPlane_d);

// Shuffle d_p_r and d_m_r in order to perform only one _mm_movmask_ps

__m128 xmm_d_p_r__d_m_r = _mm_shuffle_ps(xmm_d_p_r, xmm_d_m_r, _MM_SHUFFLE(0, 0, 0, 0));

int negativeMask = _mm_movemask_ps(xmm_d_p_r__d_m_r);

// Bit 0 holds the sign of d + r and bit 2 holds the sign of d - r

if(negativeMask & 0x01)

{

result = OUTSIDE;

break;

}

else if(negativeMask & 0x04)

result = INTERSECT;

}

aabbState[iAABB] = result;

}

}

// 2013-09-10: Moved outside of the function body. Check comments by @Matias Goldberg for details.

__declspec(align(16)) static const unsigned int absPlaneMask[4] = {0x7FFFFFFF, 0x7FFFFFFF, 0x7FFFFFFF, 0xFFFFFFFF};

// Performance (cycles/AABB): Average = 24.1 (stdev = 4.2)

void CullAABBList_SSE_4(AABB* aabbList, unsigned int numAABBs, Plane* frustumPlanes, unsigned int* aabbState)

{

__declspec(align(16)) Plane absFrustumPlanes[6];

__m128 xmm_absPlaneMask = _mm_load_ps((float*)&absPlaneMask[0]);

for(unsigned int iPlane = 0;iPlane < 6;++iPlane)

{

__m128 xmm_frustumPlane = _mm_load_ps(&frustumPlanes[iPlane].nx);

__m128 xmm_absFrustumPlane = _mm_and_ps(xmm_frustumPlane, xmm_absPlaneMask);

_mm_store_ps(&absFrustumPlanes[iPlane].nx, xmm_absFrustumPlane);

}

// Process 4 AABBs in each iteration...

unsigned int numIterations = numAABBs >> 2;

for(unsigned int iIter = 0;iIter < numIterations;++iIter)

{

// NOTE: Since the aabbList is 16-byte aligned, we can use aligned moves.

// Load the 4 Center/Extents pairs for the 4 AABBs.

__m128 xmm_cx0_cy0_cz0_ex0 = _mm_load_ps(&aabbList[(iIter << 2) + 0].m_Center.x);

__m128 xmm_ey0_ez0_cx1_cy1 = _mm_load_ps(&aabbList[(iIter << 2) + 0].m_Extent.y);

__m128 xmm_cz1_ex1_ey1_ez1 = _mm_load_ps(&aabbList[(iIter << 2) + 1].m_Center.z);

__m128 xmm_cx2_cy2_cz2_ex2 = _mm_load_ps(&aabbList[(iIter << 2) + 2].m_Center.x);

__m128 xmm_ey2_ez2_cx3_cy3 = _mm_load_ps(&aabbList[(iIter << 2) + 2].m_Extent.y);

__m128 xmm_cz3_ex3_ey3_ez3 = _mm_load_ps(&aabbList[(iIter << 2) + 3].m_Center.z);

// Shuffle the data in order to get all Xs, Ys, etc. in the same register.

__m128 xmm_cx0_cy0_cx1_cy1 = _mm_shuffle_ps(xmm_cx0_cy0_cz0_ex0, xmm_ey0_ez0_cx1_cy1, _MM_SHUFFLE(3, 2, 1, 0));

__m128 xmm_cx2_cy2_cx3_cy3 = _mm_shuffle_ps(xmm_cx2_cy2_cz2_ex2, xmm_ey2_ez2_cx3_cy3, _MM_SHUFFLE(3, 2, 1, 0));

__m128 xmm_aabbCenter0123_x = _mm_shuffle_ps(xmm_cx0_cy0_cx1_cy1, xmm_cx2_cy2_cx3_cy3, _MM_SHUFFLE(2, 0, 2, 0));

__m128 xmm_aabbCenter0123_y = _mm_shuffle_ps(xmm_cx0_cy0_cx1_cy1, xmm_cx2_cy2_cx3_cy3, _MM_SHUFFLE(3, 1, 3, 1));

__m128 xmm_cz0_ex0_cz1_ex1 = _mm_shuffle_ps(xmm_cx0_cy0_cz0_ex0, xmm_cz1_ex1_ey1_ez1, _MM_SHUFFLE(1, 0, 3, 2));

__m128 xmm_cz2_ex2_cz3_ex3 = _mm_shuffle_ps(xmm_cx2_cy2_cz2_ex2, xmm_cz3_ex3_ey3_ez3, _MM_SHUFFLE(1, 0, 3, 2));

__m128 xmm_aabbCenter0123_z = _mm_shuffle_ps(xmm_cz0_ex0_cz1_ex1, xmm_cz2_ex2_cz3_ex3, _MM_SHUFFLE(2, 0, 2, 0));

__m128 xmm_aabbExtent0123_x = _mm_shuffle_ps(xmm_cz0_ex0_cz1_ex1, xmm_cz2_ex2_cz3_ex3, _MM_SHUFFLE(3, 1, 3, 1));

__m128 xmm_ey0_ez0_ey1_ez1 = _mm_shuffle_ps(xmm_ey0_ez0_cx1_cy1, xmm_cz1_ex1_ey1_ez1, _MM_SHUFFLE(3, 2, 1, 0));