Abstract

About a year ago we published in our blog a series of articles on development of Visual Studio plugins in C#. We have recently revised those materials and added new sections and now invite you to have a look at the updated version of the manual.

Creating extension packages (plug-ins) for Microsoft Visual Studio IDE appears as quite an easy task at the first sight. There exist an excellent MSDN documentation, as well as various articles, examples and a lot of other additional sources on this topic. But, at the same time, it could also appear as a difficult task when an unexpected behavior is encountered along the way. Although it can be said that such issues are quite common to any programming task, the subject of IDE plug-in development is still not thoroughly covered at this moment.

We develop

PVS-Studio static code analyzer. Although the tool itself is intended for C++ developers, quite a large fragment of it is written in C#. When we just had been starting the development of our plug-in, Visual Studio 2005 had been considered as modern state-of-the-art IDE. Although, at this moment of Visual Studio 2012 release, some could say that Visual Studio 2005 is not relevant anymore, we still provide support for this version in our tool. During our time supporting various Visual Studio versions and exploring capabilities of the environment, we've accumulated a large practical experience on how to correctly (and even more so incorrectly!) develop IDE plug-ins. As holding all of this knowledge inside us was becoming unbearable, we've decided to publish it here. Some of our solutions that seem quite obvious right now were discovered in the course of several years. And the same issues could still haunt other plug-in developers.

The following topics will be covered:

- basic information on creating and debugging MSVS plug-ins and maintaining these extensibility projects for several versions of Visual Studio inside a common source code base;

- overview of Automation Object Model and various Managed Package Framework (MPF) classes

- extending interface of the IDE though the automation object model's API (EnvDTE) and MPF (Managed Package Framework) classes with custom menus, toolbars, windows and options pages;

- overview of Visual Studio project model; Atmel Studio IDE, which is based on Visual Studio Isolated Shell, as an example of interaction with custom third-party project models.

- utilizing Visual C++ project model for gathering data needed to operate an external preprocessor/compiler, such as compilation arguments and settings for different platforms and configurations;

A list of more detailed in-depth references for the article covered here are available at the end of each topic through the links to MSDN library and several other external resources.

The articles will cover the extension development only for Visual Studio 2005 and later versions. This limitation reflects that PVS-Studio also supports integration to Visual Studio starting only from version 8 (Visual Studio 2005). The main reason behind this is that a new extensibility API model was introduced for Visual Studio 2005, and this new version is not backward-compatible with previous IDE extensibility APIs.

Creating, debugging and deploying extension packages for Microsoft Visual Studio 2005/2008/2010/2012

This item contains the overview of several different methods for extending Visual Studio IDE functionality. The creation, debugging, registration and end-user deployment of Visual Studio extension packages will be explained in detail.

Creating and debugging Visual Studio and Visual Studio Isolated Shell VSPackage extension modules

There exists a number of ways to extend Microsoft Visual Studio features. On the most basic level it's possible to automate simple routine user actions using macros. An add-In plug-in module can be used for obtaining an access to environment's UI objects, such as menu commands, windows etc. Extension of IDE's internal editors is possible through MEF (Managed Extensibility Framework) components (starting with MSVS 2010). Finally, a plug-in of the

Extension Package type (known as VSPackage) is best suited for integrating large independent components into Visual Studio. VSPackage allows combining the environment automation through

Automation Object Model with usage of

Managed Package Framework classes (such as Package). In fact, while Visual Studio itself provides only the basic interface components and services, such standard modules as Visual C++ or Visual C# are themselves implemented as IDE extesnions.

In its earlier versions, PVS-Studio plug-in (versions 1.xx and 2.xx to be precise, when it was still known as Viva64) existed as an Add-In package. Starting with PVS-Studio 3.0 it was redesigned as VSPackage because the functionality Add-in was able to provide became insufficient for the tasks at hand and also the debugging process was quite inconvenient. After all, we wanted to have our own logo on Visual Studio splash screen!

VSPackage also provides the means of extending the automation model itself by registering user-defined custom automation objects within it. Such user automation objects will become available through the same automation model to other user-created extensibility packages, providing these packages with access to your custom components. This, in turn, allows third-party developers to add the support of new programming languages and compilers through such extensions into the IDE and also to provide interfaces for the automation of these new components as well.

Besides extending the Visual Studio environment itself, VSPackage extensions could be utilized for addition of new features into Visual Studio Isolated\Integrated shells. Isolated\integrated shell provides any third-party developer with the ability to re-use basic interface components and services of Visual Studio (such as a code editor, autocompletion system etc.), but also to implement the support of other custom project models and\or compilers. Such a distribution will not include any of Microsoft proprietary language modules (such as Visual C++, Visual Basic and so on), and it could be installed by an end-user even if his or her system does not contain a previous Visual Studio installation.

An isolated shell application will remain a separate entity after the installation even if the system contains a previous Visual Studio installation, but an integrated shell application will be merged into the preinstalled version. In case the developer of isolated\integrated shell extends the Visual Studio automation model by adding interfaces to his or her custom components, all other developers of VSPackage extensions will be able to add such components as well. Atmel Studio, an IDE designed for the development of embedded systems, is an example of Visual Studio Isolated Shell application. Atmel Studio utilizes its own custom project model which, in turn, itself is the implementation of a standard Visual Studio project model for the MSBuild, and the specific version of the gcc compiler.

Projects for VSPackage plug-in modules. Creating the extension package. Let's examine the creation of Visual Studio Package plug-in (VSPackage extension). Contrary to Add-In plug-ins, developing VS extension packages requires the installation of Microsoft Visual Studio SDK for a targeted version of IDE, i.e. a separate SDK should be installed with every version of Visual Studio for which an extension is being developed. In case of the extension that targets Visual Studio Isolated\Integrated Shell, an SDK for the version of Visual Studio on which such a shell is based will be required.

We will be examining extension development for the 2005, 2008, 2009 and 2012 versions of Visual Studio and Visual Studio 2010 based Isolated Shells. Installation of Visual Studio SDK adds a standard project template for Visual Studio Package (on the 'Other Project Types -> Extensibility' page) to VS template manager. If selected, this template will generate a basic MSBuild project for an extension package, allowing several parameters to be specified beforehand, such as a programming language to be used and the automatic generation of several stub components for generic UI elements, such as menu item, an editor, user tool window etc.

We will be using a C# VSPackage project (csproj), which is a project for managed dynamic-link library (DLL). The corresponding csproj MSBuild project for this managed assembly will also contain several XML nodes specific to a Visual Studio package, such as VSCT compiler and IncludeinVSIX (in later IDE versions).

The main class of an extension package should be inherited from the

Microsoft.VisualStudio.Shell.Package. This base class provides managed wrappers for IDE interaction APIs, implementation of which is required from a fully-functional Visual Studio extension package

public sealed class MyPackage: Package

{

public MyPackage ()

{}

...

}The

Package class allows overriding of its base

Initialize method. This method receives execution control at the moment of package initialization in the current session of IDE.

protected override void Initialize()

{

base.Initialize();

...

}

The initialization of the module will occur when it is invoked for the first time, but it also could be triggered automatically, for example after IDE is started or when user enters a predefined environment UI context state.

Being aware of the package's initialization and shutdown timings is crucial. It's quite possible that the developer would be requesting some of Visual Studio functionality at the moment when it is still unavailable to the package. During PVS-Studio development we've encountered several such situations when the environment "punished us" for not understanding this, for instance, we are not allowed to "straightforwardly" display message boxes after Visual Studio enters a shutdown process.

Debugging extension packages. Experimental Instance. The task of debugging a plug-in module or extension intended for an integrated development environment is not quite a trivial one. Quite often such environment itself is utilized for plug-in's development and debugging. Hooking up an unstable module to this IDE can lead to instability of the environment itself. The necessity to uninstall a module under development from the IDE before every debugging session, which in turn often requires restarting it, is also a major inconvenience (IDE could block the DLL that needs to be replaced by a newer version for debugging).

It should be noted that a VSPackage debugging process in this aspect is substantially easier than that of an Add-In package. This was one of the reasons for changing the project type of PVS-Studio plug-in.

VSPackage solves the aforementioned development and debugging issues by utilizing Visual Studio Experimental Instance mechanism. Such an experimental instance could be easily started by passing a special command line argument:

"C:\Program Files (x86)\Microsoft Visual Studio 10.0\

Common7\IDE\devenv.exe" /RootSuffix Exp

An experimental instance of the environment utilizes a separate independent Windows registry hive (called experimental hive) for storing all of its settings and component registration data. As such, any modifications in the IDE's settings or changes in its component registration data, which were made inside the experimental hive, will not affect the instance which is employed for the development of the module (that is your main regular instance which is used by default).

Visual Studio SDK provides a special tool for creating or resetting such experimental instances —

CreateExpInstance. To create a new experimental hive, it should be executed with these arguments:

CreateExpInstance.exe /Reset /VSInstance=10.0 /RootSuffix=PVSExp

Executing this command will create a new experimental registry hive with a PVSExp suffix in its name for the 10th version of IDE (Visual Studio 2010), also resetting all of its settings to their default values in advance. The registry path for this new instance will look as follows:

HKEY_CURRENT_USER\Software\Microsoft\VisualStudio\10.0PVSExp

While the Exp suffix is utilized by default for package debugging inside VSPackage template project, other experimental hives with unique names could also be created by the developer at will. To start an instance of the environment for the hive we've created earlier (containing PVSExp in its name), these arguments should be used:

"C:\Program Files (x86)\Microsoft Visual Studio 10.0\

Common7\IDE\devenv.exe" /RootSuffix PVSExp

A capacity for creating several different experimental hives on a single local workstation could be quite useful, as, for example, to provide a simultaneous and isolated development of several extension packages.

After installing the SDK package, a link is created in the Visual Studio program's menu group for resetting the default Experimental Instance for this version of the IDE (for instance, "Reset the Microsoft Visual Studio 2010 Experimental Instance").

In case of extension targeting an Isolated Shell, the issues with the "corruption" of the development environment are irrelevant, and so there is no need for Experimental Instance utilization. But, in any case, the faster you'll figure out how the debugging environment works, the fewer issues you'll encounter in understanding how plug-in initialization works during development.

Registering and deploying Visual Studio extension packages

Registering a VS extension package requires registering a package itself, as well as registering all of the components it integrates into the IDE (for example, menu items, option pages, user windows etc.). The registration is accomplished by creating records corresponding to these components inside the main system registry hive of Visual Studio.

All the information required for registration is placed, after building your VSPackage, inside a special pkgdef file, according to several special attributes of the main class of your package (which itself should be a subclass of the MPF

Package class). The pkgdef can also be created manually using the

CreatePkgDef. This tool collects all of the required module registration information from these special attributes by the means of .NET reflection. Let's study these registration attributes in detail.

The

PackageRegistration attribute tells the registration tool that this class is indeed a Visual Studio extension package. Only if this attribute is discovered will the tool perform its search for additional ones.

[PackageRegistration(UseManagedResourcesOnly = true)]

The

Guid attribute specifies a unique package module identifier, which will be used for creating a registry sub-key for this module in Visual Studio hive.

[Guid("a0fcf0f3-577e-4c47-9847-5f152c16c02c")]The

InstalledProductRegistration attribute adds information to 'Visual Studio Help -> About' dialog and the loading splash screen.

[InstalledProductRegistration("#110", "#112", "1.0",

IconResourceID = 400)]The

ProvideAutoLoad attribute links automatic module initialization with the activation of a specified environment UI context. When a user enters this context, the package will be automatically loaded and initialized. This is an example of setting module initialization to the opening of a solution file:

[ProvideAutoLoad("D2567162-F94F-4091-8798-A096E61B8B50")]The GUID values for different IDE UI contexts can be found in the

Microsoft.VisualStudio.VSConstants.UICONTEXT class.

The

ProvideMenuResource attribute specifies an ID of resource that contains user created menus and commands for their registration inside IDE.

[ProvideMenuResource("Menus.ctmenu", 1)]The

DefaultRegistryRoot attribute specifies a path to be used for writing registration data to the system registry. Starting with Visual Studio 2010 this attribute can be dropped as the corresponding data will be present in manifest file of a VSIX container. An example of registering a package for Visual Studio 2008:

[DefaultRegistryRoot("Software\\Microsoft\\VisualStudio\\9.0")]Registration of user-created components, such as toolwidows, editors, option pages etc. also requires the inclusion of their corresponding attributes for the user's

Package subclass. We will examine these attributes separately when we will be examining corresponding components individually.

It's also possible to write any user-defined registry keys (and values to already existing keys) during package registration through custom user registration attributes. Such attributes can be created by inheriting the

RegistrationAttribute abstract class.

[AttributeUsage(AttributeTargets.Class, Inherited = true,

AllowMultiple = false)]

public class CustomRegistrationAttribute : RegistrationAttribute

{

}The

RegistrationAttribute-derived attribute must override its

Register and

Unregister methods, which are used to modify registration information in the system registry.

The

RegPkg tool can be used for writing registration data to Windows registry. It will add all of the keys from pkgdef file passed to it into the registry hive specified by the /root argument. For instance, the RegPkg is utilized by default in Visual Studio VSPackage project template for registering the module in the Visual Studio experimental hive, providing convenient seamless debugging of the package being developed. After all of the registration information have been added to the registry, Visual Studio (devenv.exe) should be started with '/setup' switch to complete registration for new components inside the IDE.

Deploying plug-ins for developers and end-users. Package Load Key. Before proceeding to describe the deployment process itself, one particular rule should be stressed:

Each time after a new version of the distribution containing your plug-in is created, this new distribution should be tested on a system without Visual Studio SDK installed, as to make sure that it will be registered correctly on the end-user system.Today, as the releases of early versions of PVS-Studio are past us, we do not experience these kinds of issues, but several of these early first versions were prone to them.

Deploying a package for Visual Studio 2005/2008 will require launching of regpkg tool for a pkgdef file and passing the path to Visual Studio main registry hive into it. Alternately, all keys from a pkgdef can be written to Windows registry manually. Here is the example of automatically writing all the registration data from a pkgdef file by regpkg tool (in a single line):

RegPkg.exe /root:Software\Microsoft\VisualStudio\9.0Exp

"/pkgdeffile:obj\Debug\PVS-Studio-vs2008.pkgdef"

"C:\MyPackage\MyPackage.dll"

After adding the registration information to the system registry, it is necessary to start Visual Studio with a /setup switch to complete the component registration. It is usually the last step in the installation procedure of a new plug-in.

Devenv.exe /setup

Starting the environment with this switch instructs Visual Studio to absorb resource metadata for user-created components from all available extension packages, so that these components will be correctly displayed by IDE's interface. Starting devenv with this key will not open its main GUI window.

We do not employ RepPkg utility as part of PVS-Studio deployment, instead manually writing required data to the registry by using our stand-alone installer. We chose this method because we have no desire of being dependent on some external third-party tools and we want full control over the installation process. Still, we do use RegPkg during plug-in development for convenient debugging.

VSIX packages Beginning from Visual Studio 2010, VSPackage deployment process can be significantly simplified through the usage of

VSIX packages. VSIX package itself is a common (Open Packaging Conventions) archive containing plug-in's binary files and all of the other auxiliary files which are necessary for plug-in's deployment. By passing such archive to the standard VSIXInstaller.exe utility, its contents will be automatically registered in the IDE:

VSIXInstaller.exe MyPackage.vsix

VSIX installer could also be used with /uninstall switch to remove the previously installed package from a system. A unique GUID of the extension package should be used to identify such package:

VSIXInstaller.exe /uninstall: 009084B1-6271-4621-A893-6D72F2B67A4D

Contents of a VSIX container are defined through the special vsixmanifest file, which should be added to plug-in's project. Vsixmanifest file permits the following properties to be defined for an extension:

- targeted Visual Studio versions and editions, which will be supported by the plug-in;

- a unique GUID identifier;

- a list of components to be registered (VSPackage, MEF components, toolbox control etc.);

- general information about the plug-in to be installed (description, license, version, etc.);

To include additional files into a VSIX container, the IncludeInVSIX node should be added to their declarations inside your MSBuild project (alternately, they could also be marked as included into VSIX from their respective property windows, by opening it from Visual Studio Solution Explorer).

<Content Include="MyPackage.pdb">

<IncludeInVSIX>true</IncludeInVSIX>

</Content>

In fact, the VSIX file could be viewed as an almost full-fledged installer for extension packages on the latest versions of Visual Studio (2010 and 2012), allowing the extensions to be deployed by a "one-click" method. Publishing your VSIX container in the official

Visual Studio Gallery for extensions allows end-users to install such packages through the Tools -> Extension Manager IDE dialog.

VSIX allows the extension to be deployed either for one of the regular Visual Studio editions, or for the isolated\integrated shell based distributions. In case of developing an extension for isolated shell application, instead of the Visual Studio version the VSIX manifest file should contain a special identification string for the targeted environment. For example, the identification string for Atmel Studio 6.1 should be "AtmelStudio, 6.1". But, if the extension you are developing utilizes only common automation model interfaces (such as the ones for the text editor, abstract project tree and so on), and does not require any of the specific ones (for example, interfaces for Visual C++ projects), then it is possible for you to specify several different editions of Visual Studio, as well as an isolated shell based ones, in the manifest file. This, in turn, will permit you to use a single installer for a wide range of Visual Studio based applications.

This new VSIX installation procedure in Visual Studio 2010 does substantially alleviate package deployment for end-users (as well as for developers themselves). Some developers even had decided to support only VS2010 IDE and versions above it, if only not to get involved with the development of a package and installer for earlier IDE versions.

Unfortunately, several issues can be encountered when using VSIX installer together with Visual Studio 2010 extension manager interface. For instance, sometimes the extension's binary files are not removed correctly after uninstall, which in turn blocks the VSIX installer from installing/reinstalling the same extension. As such, we advise you not to depend upon the VSIX installer entirely and to provide some backup, for example by directly removing your files from a previous plug-in installation before proceeding with a new one.

Package Load Key Each VSPackage module loaded into Visual Studio must possess a unique Package Load Key (PLK). PLK key is specified through the

ProvideLoadKey attribute for the

Package subclass in 2005/2008 versions of the IDE.

[ProvideLoadKey("Standard", "9.99", "MyPackage", "My Company", 100)]Starting with Visual Studio 2010, the presence of a PLK, as well as of the

ProvideLoadKey attribute respectively, in a package is not required, but it can still be specified in case the module under development is targeting several versions of MSVS. The PLK can be obtained by registering at the

Visual Studio Industry Partner portal, meaning it guarantees that the development environment can load only packages certified by Microsoft.

However, systems containing Visual Studio SDK installed are exceptions to this, as Developer License Key is installed together with the SDK. It allows the corresponding IDE to load any extension package, regardless of validity of its PLK.

Considering the aforementioned, one more time it is necessary to stress the importance of testing the distribution on a system without Visual Studio SDK present, because the extension package will operate properly on developer's workstation regardless of its PLK correctness.

Extension registration specifics in the context of supporting several different versions of Visual Studio IDE By default, VSPackage project template will generate an extensibility project for the version of Visual Studio that is used for the development. This is not a mandatory requirement though, so it is possible to develop an extension for a particular version of IDE using a different one. It also should be noted that after automatically upgrading a project file to a newer version through devenv /Upgrade switch, the targeted version of the IDE and its corresponding managed API libraries will remain unchanged, i.e. from a previous version of Visual Studio.

To change the target of the extension to another version of Visual Studio (or to register an extension into this version to be more precise), you should alter values passed to the

DefaultRegistryRoot attribute (only for 2005/2008 IDE versions, as starting from Visual Studio 2010 this attribute is no longer required) or change the target version in the VSIX manifest file (for versions above 2008).

VSIX support appears only starting from Visual Studio 2010, so building and debugging the plug-in targeted for the earlier IDE version from within Visual Studio 2010 (and later) requires setting up all the aforementioned registration steps manually, without VSIX manifest. While changing target IDE version one should also not forget to switch referenced managed assemblies, which contain COM interface wrappers utilized by the plug-in, to the corresponding versions as well.

Altering the IDE target version of the plug-in affects the following Package subclass attributes:

- the InstalledProductRegistration attribute does not support overloading of its constructor with a (Boolean, String, String, String) signature, starting from Visual Studio 2010;

- the presence of DefaultRegistryRoot and ProvideLoadKey attributes is not mandatory starting from Visual Studio 2010, as similar values are now specified inside VSIX manifest;

References

- MSDN. Experimental Build.

- MSDN. How to: Register a VSPackage.

- MSDN. VSIX Deployment.

- MSDN. How to: Obtain a PLK for a VSPackage.

- MZ-Tools. Resources about Visual Studio .NET extensibility.

- MSDN. Creating Add-ins and Wizards.

- MSDN. Using a Custom Registration Attribute to Register an Extension.

- MSDN. Shell (Integrated or Isolated).

Visual Studio Automation Object Model. EnvDTE and Visual Studio Shell Interop interfaces.

This item contains an overview of Visual Studio Automation Object Model. Model's overall structure and the means of obtaining access to its interfaces through DTE/DTE2 top level objects are examined. Several examples of utilizing elements of the model are provided. Also discussed are the issues of using model's interfaces within multithreaded applications; an example of implementing such mechanism for multithreaded interaction with COM interfaces in managed code is provided as well.

Introduction

Visual Studio development environment is built upon the principles of automation and extensibility, providing the developers using it with the ability of integrating almost any custom element into the IDE and allowing for an easy interaction with its default and user-created components. As the means of implementing these tasks, Visual Studio users are provided with several cross-complementing toolsets, the most basic and versatile among these is the Visual Studio Automation Object Model.

Automation Object Model is represented by a series of libraries containing a vast and well-structured API set which covers all aspects of IDE automation and the majority of its extensibility capabilities. Although, in comparison to other IDE extensibility tools, this model does not provide access to some portions of Visual Studio (this applies mostly to the extension of some IDE's features), it is nonetheless the most flexible and versatile among them.

The majority of the model's interfaces are accessible from within every type of IDE extension module, which allows interacting with the environment even from an external independent process. Moreover, the model itself could be extended along with the extension of Visual Studio IDE, providing other third-party developers with an access to user-created custom components.

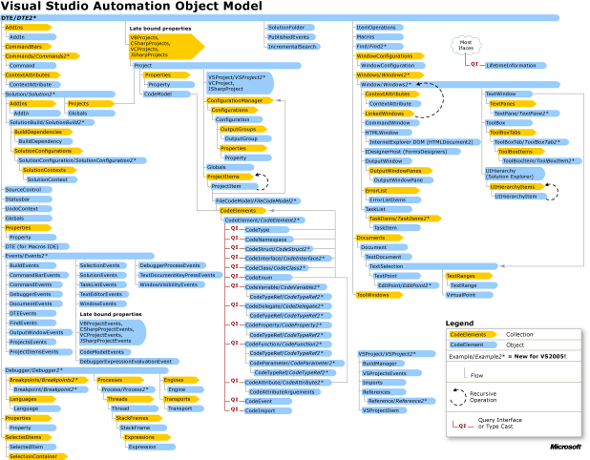

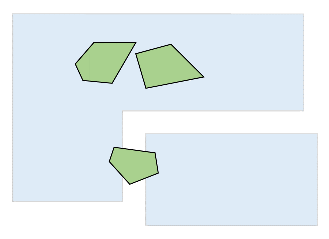

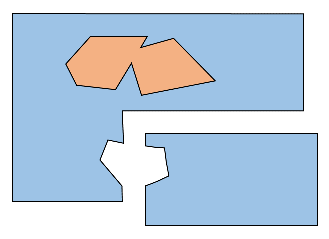

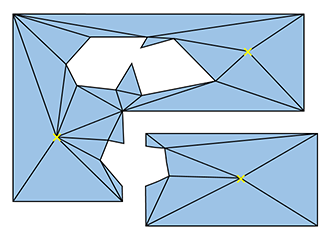

Automation Object Model structure

Visual Studio automation model is composed of several interconnected functional object groups covering all aspects of the development environment; it also provides capabilities for controlling and extending these groups. Accessing any of them is possible through the top-level global DTE interface (Development Tools Environment). Figure 1 shows the overall structure of the automation model and how it is divided among functionality groups.

![image1.png]()

Figure 1 — Visual Studio Automation Object Model (click the picture to zoom in)

The model itself could be extended by user in one of the following groups:

- project models (implementing new project types, support for new languages);

- document models (implementing new document types and document editors)

- code editor level models (support for specific language constructs)

- project build-level models

Automation model could be extended from plug-ins of VSPackage type only.

All of the automation model's interfaces could be conventionally subdivided into two large groups. 1st group are the interfaces of the EnvDTE and Visual Studio Interop namespaces, these interfaces allow interactions with basic common components of the IDE itself, such as tool windows, editors, event handling services and so on. 2nd group are the interfaces of the specific project model. The figure above specifies this interface group as late-bound properties, i.e. these interfaces are implemented in a separate dynamically loaded library. Each standard (i.e. the one that is included in a regular Visual Studio distribution) project model, such as Visual C++ or Visual Basic, provides a separate implementation for these interfaces. Third-party developers are able to extend the automation model by adding their own custom project models and by providing an implementation of these automation interfaces.

Also worth noting is that the interfaces of the 1st group, which was specified above, are universal, meaning that they could be utilized for interaction with any of the project models or Visual Studio editions, including the integrated\isolated Visual Studio shells. In this article we will examine this group in more detail.

But still, despite the model's versatility, not every group belonging to the model could be equally utilized from all the types of IDE extensions. For instance, some of the model's capabilities are inaccessible to external processes; these capabilities are tied to specific extension types, such as Add-In or VSPackage. Therefore, when selecting the type for the extension to be developed, it is important to consider the functionality that this extension will require.

The

Microsoft.VisualStudio.Shell.Interop namespace also provides a group of COM interfaces, which can be used to extend and automate Visual Studio application from managed code. Managed Package Framework (MPF) classes, which we utilized earlier for creating a VSPackage plugin, are actually themselves based on these interfaces. Although theses interfaces are not a part of EnvDTE automation model described above, nevertheless they greatly enhance this model by providing additional functionality for VSPackage extensions, which is otherwise unavailable for extensions of other types.

Obtaining references to DTE/DTE2 objects.

In order to create a Visual Studio automation application it is necessary to obtain access to the automation objects themselves in the first place. To accomplish this, first of all it is necessary to hook up the correct versions of libraries containing the required managed API wrappers in the EnvDTE namespace. Secondly, the reference to the automation model top-level object, that is the DTE2 interface, should be obtained.

In the course of Visual Studio evolution, several of its automation objects had been modified or received some additional functionality. So, to maintain a backward compatibility with existing extension packages, new EnvDTE80, EnvDTE90, EnvDTE100 etc. namespaces were created instead of updating the interfaces from the original EnvDTE namespace. The majority of such updated interfaces from these new namespaces do maintain the same names as in the original ones, but with addition of an ordinal number at the end of the name, for example Solution and Solution2. It is advised that these updated interfaces should be utilized when creating a new project, as they do contain the most recent functionality. It's worth noting that properties and methods of DTE2 interface usually return object references with types corresponding to the original DTE, i.e. accessing

dte2.Solution will return Solution and not the Solution2 as it would seem.

Although these new EnvDTE80, EnvDTE90, EnvDTE100 namespaces do contain some of the updated functionality as mentioned above, still it is the EnvDTE interface that contains the majority of automation objects. Therefore, in order to possess access to all of the existing interfaces, it is necessary to link all versions of the managed COM wrapper libraries to the project, as well as to obtain the references to DTE and also to DTE2.

The way of obtaining top-level EnvDTE object reference is dependent upon the type of IDE extension being developed. Let's examine 3 of such extension types: Add-In, VSPackage and an MSVS-independent external process.

Add-In extension In the case of an Add-In extension, access to the DTE interface can be obtained inside the

OnConnection method which should be implemented for the

IDTExtensibility interface that provides access to the extension-environment interaction events. The

OnConnection method is called at the moment when the module is loaded by the IDE; it can happen either when the environment is being loaded itself or after the extension was called for the first time in the IDE session. The example of obtaining the reference follows:

public void OnConnection(object application,

ext_ConnectMode connectMode, object addInInst, ref Array custom)

{

_dte2 = (DTE2)application;

...

}An Add-In module can be initialized either at the moment of IDE start-up, or when it is called for the first time in current IDE session. So, the

connectMode can be used to correctly determine the moment of initialization inside the

OnConnection method.

switch(connectMode)

{

case ext_ConnectMode.ext_cm_UISetup:

...

break;

case ext_ConnectMode.ext_cm_Startup:

...

break;

case ext_ConnectMode.ext_cm_AfterStartup:

...

break;

case ext_ConnectMode.ext_cm_CommandLine:

...

break;

}As in the example above, Add-In could be loaded either simultaneously with the IDE itself (if the startup option in the Add-In manager is checked), when it is called the first time or when it is called through the command line. The

ext_ConnectMode.ext_cm_UISetup option is invoked only for a single time in the plug-in's overall lifetime, which is during its first initialization. This case should be used for initializing user UI elements which are to be integrated into the environment (more on this later on).

If an Add-In is being loaded during Visual Studio start-up (

ext_ConnectMode.ext_cm_Startup), then at the moment

OnConnect method receives control for the first time, it is possible that the IDE still is not fully initialized itself. In such a case, it is advised to postpone the acquisition of the DTE reference until the environment is fully loaded. The

OnStartupComplete handler provided by the

IDTExtensibility can be used for this.

public void OnStartupComplete(ref Array custom)

{

...

} VSPackage extension For VSPackage type of extension, the DTE could be obtained through the global Visual Studio service with the help of

GetService method of a

Package subclass:

DTE dte = MyPackage.GetService(typeof(DTE)) as DTE;

Please note that the

GetService method could potentially return null in case Visual Studio is not fully loaded or initialized at the moment of such access, i.e. it is in the so called "zombie" state. To correctly handle this situation, it is advised that the acquisition of DTE reference should be postponed until this interface is inquired. But in case the DTE reference is required inside the

Initialize method itself, the

IVsShellPropertyEvents interface can be utilized (also by deriving our

Package subclass from it) and then the reference could be safely obtained inside the

OnShellPropertyChange handler.

DTE dte;

uint cookie;

protected override void Initialize()

{

base.Initialize();

IVsShell shellService = GetService(typeof(SVsShell)) as IVsShell;

if (shellService != null)

ErrorHandler.ThrowOnFailure(

shellService.AdviseShellPropertyChanges(this,out cookie));

...

}

public int OnShellPropertyChange(int propid, object var)

{

// when zombie state changes to false, finish package initialization

if ((int)__VSSPROPID.VSSPROPID_Zombie == propid)

{

if ((bool)var == false)

{

this.dte = GetService(typeof(SDTE)) as DTE;

IVsShell shellService = GetService(typeof(SVsShell)) as IVsShell;

if (shellService != null)

ErrorHandler.ThrowOnFailure(

shellService.UnadviseShellPropertyChanges(this.cookie) );

this.cookie = 0;

}

}

return VSConstants.S_OK;

}It should be noted that the process of VSPackage module initialization at IDE startup could vary for different Visual Studio versions. For instance, in case of VS2005 and VS2008, an attempt at accessing DTE during IDE startup will almost always result in null being returned, owning to the relative fast loading times of these versions. But, one does not simply obtain access into DTE. In Visual Studio 2010 case, it mistakenly appears that one could simply obtain an access to the DTE from inside the

Initialize() method. In fact, this impression is a false one, as such method of DTE acquisition could potentially cause the occasional appearance of "floating" errors which are hard to identify and debug, and even the DTE itself may be still uninitialized when the reference is acquired. Because of these disparities, the aforementioned acquisition method for handling IDE loading states should not be ignored on any version of Visual Studio.

Independent external process The DTE interface is a top-level abstraction for Visual Studio environment in the automation model. In order to acquire a reference to this interface from an external application, its ProgID COM identifier could be utilized; for instance, it will be "VisualStudio.DTE.10.0" for Visual Studio 2010. Consider this example of initializing a new IDE instance and when obtaining a reference to the DTE interface.

// Get the ProgID for DTE 8.0.

System.Type t = System.Type.GetTypeFromProgID(

"VisualStudio.DTE.10.0", true);

// Create a new instance of the IDE.

object obj = System.Activator.CreateInstance(t, true);

// Cast the instance to DTE2 and assign to variable dte.

EnvDTE80.DTE2 dte = (EnvDTE80.DTE2)obj;

// Show IDE Main Window

dte.MainWindow.Activate();In the example above we've actually created a new DTE object, starting deven.exe process by the

CreateInstance method. But at the same time, the GUI window of the environment will be displayed only after the

Activate method is called.

Next, let's review a simple example of obtaining the DTE reference from an already running Visual Studio Instance:

EnvDTE80.DTE2 dte2;

dte2 = (EnvDTE80.DTE2)

System.Runtime.InteropServices.Marshal.GetActiveObject(

"VisualStudio.DTE.10.0");However, in case several instances of the Visual Studio are executing at the moment of our inquiry, the

GetActiveObject method will return a reference to the IDE instance that was started the earliest. Let's examine a possible way of obtaining the reference to DTE from a running Visual Studio instance by the PID of its process.

using EnvDTE80;

using System.Diagnostics;

using System.Runtime.InteropServices;

using System.Runtime.InteropServices.ComTypes;

[DllImport("ole32.dll")]

private static extern void CreateBindCtx(int reserved,

out IBindCtx ppbc);

[DllImport("ole32.dll")]

private static extern void GetRunningObjectTable(int reserved,

out IRunningObjectTable prot);

public static DTE2 GetByID(int ID)

{

//rot entry for visual studio running under current process.

string rotEntry = String.Format("!VisualStudio.DTE.10.0:{0}", ID);

IRunningObjectTable rot;

GetRunningObjectTable(0, out rot);

IEnumMoniker enumMoniker;

rot.EnumRunning(out enumMoniker);

enumMoniker.Reset();

IntPtr fetched = IntPtr.Zero;

IMoniker[] moniker = new IMoniker[1];

while (enumMoniker.Next(1, moniker, fetched) == 0)

{

IBindCtx bindCtx;

CreateBindCtx(0, out bindCtx);

string displayName;

moniker[0].GetDisplayName(bindCtx, null, out displayName);

if (displayName == rotEntry)

{

object comObject;

rot.GetObject(moniker[0], out comObject);

return (EnvDTE80.DTE2)comObject;

}

}

return null;

}Here we've acquired the DTE interface by identifying the required instance of the IDE in the table of running COM objects (ROT, Running Object Table) by its process identifier. Now we can access the DTE for all of the executing instances of Visual Studio, for example:

Process Devenv;

...

//Get DTE by Process ID

EnvDTE80.DTE2 dte2 = GetByID(Devenv.Id);

Additionally, to acquire any project-specific interface (including custom model extensions), for example the CSharpProjects model, through a valid DTE interface, the

GetObject method should be utilized:

Projects projects = (Projects)dte.GetObject("CSharpProjects");The

GetObject method will return a

Projects collection of regular

Project objects, and each one of them will contain a reference to our project-specific properties, among other regular ones.

Visual Studio text editor documents

Automation model represents Visual Studio text documents through the

TextDocument interface. For example, C/C++ source code files are opened by the environment as text documents. TextDocument is based upon the common automation model document interface (the

Document interface), which represents file of any type opened in Visual Studio editor or designer. A reference to the text document object can be obtained through the

Object field of the Document object. Let's acquire a text document for the currently active (i.e. the one possessing focus) document from IDE's text editor.

EnvDTE.TextDocument objTextDoc =

(TextDocument)PVSStudio.DTE.ActiveDocument.Object("TextDocument"); Modifying documents The

TextSelection document allows controlling text selection or to modify it. The methods of this interface represent the functionality of Visual Studio text editor, i.e. they allow the interaction with the text as it presented directly by the UI.

EnvDTE.TextDocument Doc =

(TextDocument)PVSStudio.DTE.ActiveDocument.Object(string.Empty);

Doc.Selection.SelectLine();

TextSelection Sel = Doc.Selection;

int CurLine = Sel.TopPoint.Line;

String Text = Sel.Text;

Sel.Insert("test\r\n");

In this example we selected a text line under the cursor, read the selected text and replaced it with a 'test' string.

TextDocument interface also allows text modification through the

EditPoint interface. This interface is somewhat similar to the TextSelection, but instead of operating with the text through the editor UI, it directly manipulates text buffer data. The difference between them is that the text buffer is not influenced by such editor-specific notions as WordWrap and Virtual Spaces. It should be noted that both of these editing methods are not able to modify read-only text blocks.

Let's examine the example of modifying text with EditPoint by placing additional lines at the end of current line with a cursor.

objEditPt = objTextDoc.StartPoint.CreateEditPoint();

int lineNumber = objTextDoc.Selection.CurrentLine;

objEditPt.LineDown(lineNumber - 1);

EditPoint objEditPt2 = objTextDoc.StartPoint.CreateEditPoint();

objEditPt2.LineDown(lineNumber - 1);

objEditPt2.CharRight(objEditPt2.LineLength);

String line = objEditPt.GetText(objEditPt.LineLength);

String newLine = line + "test";

objEditPt.ReplaceText(objEditPt2, newLine,

(int)vsEPReplaceTextOptions.vsEPReplaceTextKeepMarkers);

Navigating the documents VSPackage modules are able to obtain access to a series of global services which could be used for opening and handling environment documents. These services could be acquired by the

Package.GetGlobalService() method from Managed Package Framework. It should be noted that the services described here are not part of the EnvDTE model and are accessible only from a Package-type extension, and therefore they could not be utilized in other types of Visual Studio extensions. Nonetheless, they can be quite useful for handling IDE documents when they are utilized in addition to the Documents interface described earlier. Next, we'll examine these services in more detail.

The

IVsUIShellOpenDocument interface controls the state of documents opened in the environment. Following is the example that uses this interface to open a document through path to a file which this document will represent.

String path = "C:\Test\test.cpp";

IVsUIShellOpenDocument openDoc =

Package.GetGlobalService(typeof(IVsUIShellOpenDocument))

as IVsUIShellOpenDocument;

IVsWindowFrame frame;

Microsoft.VisualStudio.OLE.Interop.IServiceProvider sp;

IVsUIHierarchy hier;

uint itemid;

Guid logicalView = VSConstants.LOGVIEWID_Code;

if (ErrorHandler.Failed(

openDoc.OpenDocumentViaProject(path, ref logicalView, out sp,

out hier, out itemid, out frame))

|| frame == null)

{

return;

}

object docData;

frame.GetProperty((int)__VSFPROPID.VSFPROPID_DocData, out docData);

The file will be opened in a new editor or will receive focus in case it already has been opened earlier. Next, let's read a

VsTextBuffer text buffer from this document we opened:

// Get the VsTextBuffer

VsTextBuffer buffer = docData as VsTextBuffer;

if (buffer == null)

{

IVsTextBufferProvider bufferProvider = docData as

IVsTextBufferProvider;

if (bufferProvider != null)

{

IVsTextLines lines;

ErrorHandler.ThrowOnFailure(bufferProvider.GetTextBuffer(

out lines));

buffer = lines as VsTextBuffer;

Debug.Assert(buffer != null,

"IVsTextLines does not implement IVsTextBuffer");

if (buffer == null)

{

return;

}

}

}

The

IVsTextManager interface controls all of the active text buffers in the environment. For example we can navigate a text document using the

NavigateToLineAndColumn method of this manager on a buffer we've acquired earlier:

IVsTextManager mgr = Package.GetGlobalService(typeof(VsTextManagerClass))

as IVsTextManager;

mgr.NavigateToLineAndColumn(buffer, ref logicalView, line,

column, line, column);

Subscribing and handling events

Automation objects events are represented by the

DTE.Events property. This element references all of the common IDE events (such as CommandEvents, SolutionEvents), as well as the events of separate environment components (project types, editors, tools etc.), also including the ones designed by third-party developers. To acquire a reference for this automation object, the

GetObject method could be utilized.

When subscribing to the DTE events one should remember that this interface could be still unavailable at the moment of extension being initialized. So it is always important to consider the sequence of your extension initialization process if the access to DTE.Events is required in the

Initialize() method of your extension package. The correct handling of initialization sequence will vary for different extension types, as it was described earlier.

Let's acquire a reference for an events object of Visual C++ project model defined by the

VCProjectEngineEvents interface and assign a handler for the removal of an element from the Solution Explorer tree:

VCProjectEngineEvents m_ProjectItemsEvents =

PVSStudio.DTE.Events.GetObject("VCProjectEngineEventsObject")

as VCProjectEngineEvents;

m_ProjectItemsEvents.ItemRemoved +=

new _dispVCProjectEngineEvents_ItemRemovedEventHandler(

m_ProjectItemsEvents_ItemRemoved); MDI windows events The

Events.WindowEvents property could be utilized to handle regular events of an environment MDI window. This interface permits the assignment of a separate handler for a single window (defined through the

EnvDTE.Window interface) or the assignment of a common handler for all of the environment's windows. Following example contains the assignment of a handler for the event of switching between IDE windows:

WindowEvents WE = PVSStudio.DTE.Events.WindowEvents;

WE.WindowActivated +=

new _dispWindowEvents_WindowActivatedEventHandler(

Package.WE_WindowActivated);Next example is the assignment of a handler for window switching to the currently active MDI window through

WindowEvents indexer:

WindowEvents WE = m_dte.Events.WindowEvents[MyPackage.DTE.ActiveWindow];

WE.WindowActivated += new

_dispWindowEvents_WindowActivatedEventHandler(

MyPackage.WE_WindowActivated);IDE commands eventsThe actual handling of environment's commands and their extension through the automation model is covered in a separate article of this series. In this section we will examine the handling of the events related to these commands (and not of the execution of the commands themselves). Assigning the handlers to these events is possible through the

Events.CommandEvents interface. The

CommandEvents property, as in the case of MDI windows events, also permits the assignment of a handler either for all of the commands or for a single one through the indexer.

Let's examine the assignment of a handler for the event of a command execution being complete (i.e. when the command finishes its execution):

CommandEvents CEvents = DTE.Events.CommandEvents;

CEvents.AfterExecute += new

_dispCommandEvents_AfterExecuteEventHandler(C_AfterExecute);

But in order to assign such a handler for an individual command, it is necessary to identify this command in the first place. Each command of the environment is identified by a pair of GUID:ID, and in case of a user-created commands these values are specified directly by the developer during their integration, for example through the VSCT table. Visual Studio possesses a special debug mode which allows identifying any of the environment's commands. To activate this mode, it is required that the following key is to be added to the system registry (an example for Visual Studio 2010):

[HKEY_CURRENT_USER\Software\Microsoft\VisualStudio\10.0\General]

"EnableVSIPLogging"=dword:00000001

Now, after restarting the IDE, hovering your mouse over menu or toolbar elements with CTRL+SHIFT being simultaneously pressed (though sometime it will not work until you left-click it) will display a dialog window containing all of the command's internal identifiers. We are interested in the values of

Guid and

CmdID. Let's examine the handling of events for the

File.NewFile command:

CommandEvents CEvents = DTE.Events.CommandEvents[

"{5EFC7975-14BC-11CF-9B2B-00AA00573819}", 221];

CEvents.AfterExecute += new

_dispCommandEvents_AfterExecuteEventHandler(C_AfterExecute);The handler obtained in this way will receive control only after the command execution is finished.

void C_AfterExecute(string Guid, int ID, object CustomIn,

object CustomOut)

{

...

}This handler should not be confused with an immediate handler for the execution of the command itself which could be assigned during this command's initialization (from an extension package and in case the command is user-created). Handling the IDE commands is described in a separate article that is entirely devoted to IDE commands.

In conclusion to this section it should be mentioned that in the process of developing our own VSPackage extension, we've encountered the necessity to store the references to interface objects containing our handler delegates (such as CommandEvents, WindowEvents etc.) on the top-level fields of our main

Package subclass. The reason for this is that in case of the handler being assigned through a function-level local variable, it is lost immediately after leaving the method. Such behavior could probably be attributed to the .NET garbage collector, although we've obtained these references from the DTE interface which definitely exists during the entire lifetime of our extension package.

Handling project and solution events (for VSPackage extensions)

Let's examine some of the interfaces from the

Microsoft.VisualStudio.Shell.Interop namespace, the ones that permit us to handle the events related to Visual Studio projects and solution to be more precise. Although these interfaces are not a part of EnvDTE automation model, they could be implemented by the main class of VSPackage extension (that is the class that was inherited from

Package base class of Managed Package Framework). That is why, if you are developing the extension of this type, these interfaces a conveniently supplement the basic set of interfaces provided by the DTE object. By the way, this is another argument for creating a full-fledged VSPackage plugin using MPF.

The

IVsSolutionEvents could be implemented by the class inherited from

Package and it is available starting from Visual Studio version 2005, and the isolated\integrated shells based applications. This interface permits you to track the loading, unloading, opening and closing of projects or even the whole solutions in the development environment by implementing such of its methods as

OnAfterCloseSolution,

OnBeforeCloseProject,

OnQueryCloseSolution. For example:

public int OnAfterLoadProject(IVsHierarchy pStubHierarchy, IVsHierarchy pRealHierarchy)

{

//your custom handler code

return VSConstants.S_OK;

}As you can see, this method takes the

IVsHierarchy object as an input parameter which represents the loading project. Managing of such objects will be examined in another article devoted to the interaction with Visual Studio project model.

The

IVsSolutionLoadEvents interface, in a similar fashion to the interface described above, should be implemented by the

Package subclass and is available to versions of Visual Studio starting from 2010 and above. This interface allows you to handle such interesting aspects as batch loading of project groups and background solution loadings (the

OnBeforeLoadProjectBatch and

OnBeforeBackgroundSolutionLoadBegins methods), and also to intercept the end of this background loading operation as well (the

OnAfterBackgroundSolutionLoadComplete method).

Such event handlers should come in handy in case your plug-in needs to execute some code immediately after its initialization, and, at the same time, the plug-in depends on projects\solutions that are loaded inside the IDE. In this a case, executing such a code without waiting for the solution loading to be finished could lead to either incorrect (incomplete) results because of the incompletely formed projects tree, or even to runtime exceptions.

While developing PVS-Studio IDE plug-in, we've encountered another interesting aspect of VSPackage plug-in initialization. Then one Package plug-in enters a waiting state (for instance, by displaying a dialog window to the user), further initialization of VSPackage extensions is suspended until the blocking plug-in returns. So, when handling loading and initialization inside the environment, one should always remember this possible scenario as well.

And finally, I want to return one final time to the fact, that for the interface methods described above to operate correctly, you should inherit your main class from theses interfaces:

class MyPackage: Package, IVsSolutionLoadEvents, IVsSolutionEvents

{

//Implementation of Package, IVsSolutionLoadEvents, IVsSolutionEvents

...

}Supporting Visual Studio color schemes

If the extension you are developing will be integrated into the interface of the development environment, for instance, by creating custom tool windows or document MDI windows (and the most convenient way for such an integration is a VSPackage extesnion), it is advisable that the coloring of your custom UI components should match the common color scheme used by Visual Studio itself.

The importance of this task was elevated with the release of Visual Studio 2012, containing two hugely opposite color themes (Dark and Light) which the user could switch "on the fly" from the IDE options window.

The

GetVSSysColorEx method from Visual Studio Interop interface

IVsUIShell2 could be utilized to obtain environment's color settings. This interface is available to VSPackage plugins only.

IVsUIShell2 vsshell = this.GetService(typeof(SVsUIShell)) as IVsUIShell2;

By passing the the

__VSSYSCOLOREX and

__VSSYSCOLOREX3 enums to the

GetVSSysColorEx method, you can get the currently selected color for any of Visual Studio UI elements. For example, let's obtain one of the colors from the context menu's background gradient:

uint Win32Color;

vsshell.GetVSSysColorEx((int)__VSSYSCOLOREX3.VSCOLOR_COMMANDBAR_MENU_BACKGROUND_GRADIENTBEGIN, out Win32Color);

Color BackgroundGradient1 = ColorTranslator.FromWin32((int)Win32Color);

Now we can use this

Color object to "paint" our custom context menus. To determine the point in time at which the color theme of your components should be reapplied, you can, for example, utilize events of the environment command responsible for opening of IDE's settings window (Tools -> Options). How to subscribe your handlers to such an event was described earlier in this article.

But if you are, for some reason, unable to utilize the IVsUIShell2 object (for instance, in case you are developing a non-VSPackage extension), but at the same time you still need to support Visual Studio color themes, then it is possible to obtain color values for environment's various UI components directly from the system registry. We will not cover this approach in the article, but

here you can download a free and open-source tool designed for Visual Studio color theme editing. The tool is written in C# and it contains all the code required for reading and modifying Visual Studio 2012 color themes from the managed code.

Interacting with COM interfaces from within a multithreaded application

Initially PVS-Studio extension package had not contained any specific thread-safety mechanisms for its interaction with Visual Studio APIs. At the same time, we had been attempting to confine the interactions with this APIs within a single background thread which was created and owned by our plug-in. And such approach functioned flawlessly for quite a long period. However, several bug reports from our users, each one containing a similar

ComExeption error, prompted us to examine this issue in more detail and to implement a threading safety mechanism for our COM Interop.

Although Visual Studio automation model is not a thread-safe one, it still provides a way for interacting with multi-threaded applications. Visual Studio application is a COM (Component Object Mode) server. For the task of handling calls from COM clients (in our case, this will be our extension package) to thread-unsafe servers, COM provides a mechanism known as STA (Single-Threaded Apartment) model. In the terms of COM, an Apartment represents a logical container inside a process in which objects and threads share the same thread access rules. STA can hold only a single thread, but an unlimited number of objects, inside such container. Calls from other threads to such thread-unsafe objects inside STA are converted into messages and posted to a message queue. Messages are retrieved from the message queue and converted back into method calls one at a time by the thread running in the STA, so it becomes possible for only a single thread to access these unsafe objects on the server.

Utilizing Apartment mechanism inside managed code The .NET Framework does not utilize COM Apartment mechanics directly. Therefore, when a managed application calls a COM object in the COM interoperation scenarios, CLR (Common Language Runtime) creates and initializes Apartment container. A managed thread is able to create and enter either an MTA (Multi-Threaded Apartment, a container that, contrary to STA, can host several threads at the same time), or an STA, though a thread will be started as an MTA by default. The type of the Apartment could be specified before thread is launched:

Thread t = new Thread(ThreadProc);

t.SetApartmentState(ApartmentState.STA);

...

t.Start();

As an Apartment type could not be changed once thread had been started, the

STAThread attribute should be used to specify the main thread of a managed application as an STA:

[STAThread]

static void Main(string[] args)

{...} Implementing message filter for COM interoperation errors in a managed environment As STA serializes all of calls to the COM server, one of the calling clients could potentially be blocked or even rejected when the server is busy, processing different calls or another thread is already inside the apartment container. In case COM server rejects its client, .NET COM interop will generate a

System.Runtime.InteropServices.COMException ("The message filter indicated that the application is busy").

When working on a Visual Studio module (add-in, vspackage) or a macro, the execution control usually passes into the module from the environment's main STA UI thread (such as in case of handling events or environment state changes, etc.). Calling automation COM interfaces from this main IDE thread is safe. But if other background threads are planned to be utilized and EnvDTE COM interfaces are to be called from these background threads (as in case of long calculations that could potentially hang the IDE's interface, if these are performed on the main UI thread), then it is advised to implement a mechanism for handling calls rejected by a server.

While working on PVS-Studio plug-in we've often encountered these kinds of COM exceptions in situations when other third-party extensions were active inside the IDE simultaneously with PVS-Studio plug-in. Heavy user interaction with the UI also was the usual cause for such issues. It is quite logical that these situations often resulted in simultaneous parallel calls to COM objects inside STA and consequently to the rejection of some of them.

To selectively handle incoming and outgoing calls, COM provides the IMessageFilter interface. If it is implemented by the server, all of the calls are passed to the

HandleIncomingCall method, and the client is informed on the rejected calls through the

RetryRejectedCall method. This in turn allows the rejected calls to be repeated, or at least to correctly present this rejection to a user (for example, by displaying a dialog with a 'server is busy' message). Following is the example of implementing the rejected call handling for a managed application.

[ComImport()]

[Guid("00000016-0000-0000-C000-000000000046")]

[InterfaceType(ComInterfaceType.InterfaceIsIUnknown)]

public interface IMessageFilter

{

[PreserveSig]

int HandleInComingCall(

int dwCallType,

IntPtr hTaskCaller,

int dwTickCount,

IntPtr lpInterfaceInfo);

[PreserveSig]

int RetryRejectedCall(

IntPtr hTaskCallee,

int dwTickCount,

int dwRejectType);

[PreserveSig]

int MessagePending(

IntPtr hTaskCallee,

int dwTickCount,

int dwPendingType);

}

class MessageFilter : MarshalByRefObject, IDisposable, IMessageFilter

{

[DllImport("ole32.dll")]

[PreserveSig]

private static extern int CoRegisterMessageFilter(

IMessageFilter lpMessageFilter,

out IMessageFilter lplpMessageFilter);

private IMessageFilter oldFilter;

private const int SERVERCALL_ISHANDLED = 0;

private const int PENDINGMSG_WAITNOPROCESS = 2;

private const int SERVERCALL_RETRYLATER = 2;

public MessageFilter()

{

//Starting IMessageFilter for COM objects

int hr =

MessageFilter.CoRegisterMessageFilter(

(IMessageFilter)this,

out this.oldFilter);

System.Diagnostics.Debug.Assert(hr >= 0,

"Registering COM IMessageFilter failed!");

}

public void Dispose()

{

//disabling IMessageFilter

IMessageFilter dummy;

int hr = MessageFilter.CoRegisterMessageFilter(this.oldFilter,

out dummy);

System.Diagnostics.Debug.Assert(hr >= 0,

"De-Registering COM IMessageFilter failed!")

System.GC.SuppressFinalize(this);

}

int IMessageFilter.HandleInComingCall(int dwCallType,

IntPtr threadIdCaller, int dwTickCount, IntPtr lpInterfaceInfo)

{

// Return the ole default (don't let the call through).

return MessageFilter.SERVERCALL_ISHANDLED;

}

int IMessageFilter.RetryRejectedCall(IntPtr threadIDCallee,

int dwTickCount, int dwRejectType)

{

if (dwRejectType == MessageFilter.SERVERCALL_RETRYLATER)

{

// Retry the thread call immediately if return >=0 &

// <100.

return 150; //waiting 150 mseconds until retry

}

// Too busy; cancel call. SERVERCALL_REJECTED

return -1;

//Call was rejected by callee.

//(Exception from HRESULT: 0x80010001 (RPC_E_CALL_REJECTED))

}

int IMessageFilter.MessagePending(

IntPtr threadIDCallee, int dwTickCount, int dwPendingType)

{

// Perform default processing.

return MessageFilter.PENDINGMSG_WAITNOPROCESS;

}

}

Now we can utilize our

MessageFilter while calling COM interfaces from a background thread:

using (new MessageFilter())

{

//COM-interface dependent code

...

}References

- MSDN. Referencing Automation Assemblies and the DTE2 Object.

- MSDN. Functional Automation Groups.

- MZ-Tools. HOWTO: Use correctly the OnConnection method of a Visual Studio add-in.

- The Code Project. Understanding The COM Single-Threaded Apartment.

- MZ-Tools. HOWTO: Add an event handler from a Visual Studio add-in.

- Dr. eX's Blog. Using EnableVSIPLogging to identify menus and commands with VS 2005 + SP1.

Visual Studio commands

This item deals with creation, utilization and handling of Visual Studio commands in its extension modules through automation object model APIs and IDE services. The relations between IDE commands and environment UI elements, such as user menus and toolbars, will also be examined.

Introduction

Visual Studio commands provide a way for direct interaction with development environment through the keyboard input. Almost all capabilities of different dialog and tool windows, toolbars and user menus are represented by the environment's commands. In fact, main menu items and toolbar buttons are practically commands themselves. Although it is possible for a command not to possess a direct representation in the development environment's UI, as commands are not the UI elements per se, they can be represented by such UI elements as menu items and toolbar buttons.

PVS-Studio IDE extension package integrates several subgroups of its commands into Visual Studio main menu, and these commands serve as one of the plug-in's main UI components (with another one being its MDI tool window), allowing a user to control all of the aspects of static code analysis either from the environment's UI or by invoking the commands directly through command line.

Using IDE commands

Any IDE command, regardless of its UI representation in the IDE (or of the lack of it), could be executed directly through the Command or Immediate windows, as well as by starting devenv.exe with the '/command' argument.

The full name of a command is formed according to its affiliation with a functional group, as for example the commands of the 'File' main menu item. Command's full name could be examined in the 'Keyboard, Environment' Options page. Also, the 'Tools -> Customize -> Commands' dialog allows inspecting all of the commands which are currently registered within the environment. This dialog sorts the commands by their respective functional groups and UI presentation types (menus, toolbars), also allowing to modify, add or delete them.

Commands can receive additional arguments which should be separated from the command's name by a space. Let's examine a call to a standard system command of the main menu, 'File -> New -> File' for example, with a passing of additional parameters to it through the Command Window:

>File.NewFile Mytext /t:"General\Text File"

/e:"Source Code (text) Editor"

A command's syntax generally complies with the following rules:

- command's name and arguments are separated by a space

- arguments containing spaces are wrapped by double quotes

- The caret (^) is used as an escape character

- One-character abridgments for command names can be combined, as for example, /case(/c) and /word(/w) could be presented as /cw

When using the 'command' command-line switch, name of a command with all of its arguments should be wrapped by double quotes:

devenv.exe /command "MyGroup.MyCommandName arg1 arg2"

For the sake of convenience, a command could be associated with an alias:

>alias MyAlias File.NewFile MyFile

Commands integrated into IDE by PVS-Studio extension can be utilized through the /command switch as well. For example, this mode could be used for the integration of our static analysis into the automated build process. Our analyzer itself (PVS-Studio.exe) is a native command-line application, which operates quite similar to the compiler, i.e. it takes a path to the file containing source code and its compilation arguments and then it outputs analysis results to

stdout/

stderr streams. It's quite obvious that the analyzer could easily be integrated directly into the build system (for instance, into a system which is based on MSBuild, NMake or even GNU Make) at the same level where C/C++ compiler is being called. Of course, such integration already provides us, by its own definition, with complete enumeration of all of the source files being built, with all of their compilation parameters. In turn, this allows for a substitution (or supplementation) of a compiler call by call to the analyzer. Although the described scenario is fully supported by PVS-Studio.exe analyzer, it still requires a complete understanding of build system's internals as well as an opportunity to modify a system in the first place, which could be problematic or even impossible at times.

Therefore, the integration of the analyzer into the build process can be performed in a more convenient way, on a higher level (i.e. at the level of Continuous Integration Server), by utilizing Visual Studio extension commands through the /command switch, for example, by using the

PVS-Studio.CheckSolution command to perform analysis on MSVS solution. Of course, such use case is only possible when building Visual C++ native project types (vcproj/vcxproj).

In case Visual Studio is started from a command line, the /command switch will be executed immediately after the environment is fully loaded. In this case, the IDE will be started as a regular GUI application, without redirecting its standard I/O streams to the console that was used to launch the environment. It should be noted that, in general, Visual Studio is a UI based development environment and so it is not intended for command line operations. It is recommended to employ Microsoft MSBuild utility for building inside build automation systems, as this tool supports all of native Visual Studio project types.

Caution should be applied when using Visual Studio /command switch together with non-interactive desktop mode (for example when calling IDE from a Windows service). We've encountered several interesting issues ourselves when we were evaluating the possibility of integrating PVS-Studio static analysis into Microsoft Team Foundation build process, as Team Foundation operates as a Windows service by default. At that moment, our plug-in had not been tested for non-interactive desktop sessions and was incorrectly handling its child windows and dialogs, which in turn led to exceptions and crashes. But Visual Studio itself experienced none of such issues, almost none to be more precise. The case is, Visual Studio displays a particular dialog for every user when it is started for a first time after an installation, and this dialog offers the user to select a default UI configuration. And it was this dialog that Visual Studio displayed for a LocalSystem account, the account which actually owns the Team Foundation service. It turns out that the same dialog is 'displayed' even in the non-interactive desktop mode, and it subsequently blocks the execution of the /command switch. As this user doesn't have an interactive desktop, he is also unable to close this dialog normally by manually starting the IDE himself. But, in the end, we were able to close the dialog manually by launching Visual Studio for LocalSystem account in the interactive mode through psexec tool from

PSTools utilities.

Creating and handling commands in VSPackage. Vsct files.

VSPackage extension utilizes Visual Studio command table (*.vsct) file for creating and managing commands that it integrates into the IDE. Command tables are text files in XML format which can be compiled by VSCT compiler into binary CTO files (Command Table Output). CTO files are then included as a resources into final builds of IDE extension packages. With the help of VSCT, commands can be associated with menus or toolbar buttons. Support for VSCT is available starting from Visual Studio 2005. Earlier IDE versions utilized CTC (Command Table Compiler) files handling their commands, but they will not be covered in this article.

In a VSCT file each command is assigned a unique ID — CommandID, a name, a group and a quick access hotkey combination, while its representation in the interface (if any) is specified by special flags.

Let's examine a basic structure of VSCT file. The root element of file is 'CommandTable' node that contains the 'Commands' sub-node, which defines all of the user's commands, groups, menu items, toolbars etc. Value of the "Package" attribute of the "Commands" node must correspond with the ID of your extension. The "Symbols" sub-node should contain definitions for all identifiers used throughout this VSCT file. The 'KeyBindings' sub-node contains default quick access hotkey combinations for the commands.

<CommandTable"http://schemas.microsoft.com/VisualStudio/2005-10-

18/CommandTable">

<Extern href="stdidcmd.h"/>

<Extern href="vsshlids.h"/>

<Commands>

<Groups>

...

</Groups>

<Bitmaps>

...

</Bitmaps>

</Commands>

<Commands package="guidMyPackage">

<Menus>

...

</Menus>

<Buttons>

...

</Buttons>

</Commands>

<KeyBindings>

<KeyBinding guid="guidMyPackage" id="cmdidMyCommand1"

editor="guidVSStd97" key1="221" mod1="Alt" />

</KeyBindings>

<Symbols>

<GuidSymbol name="guidMyPackage" value="{B837A59E-5BF0-4190-B8FC-

FDC35BE5C342}" />

<GuidSymbol name="guidMyPackageCmdSet" value="{CC8B1E36-FE6B-48C1-

B9A9-2CC0EAB4E71F}">

<IDSymbol name="cmdidMyCommand1" value="0x0101" />

</GuidSymbol>

</Symbols>

</CommandTable>The 'Buttons' node defines the commands themselves by specifying their UI representation style and binding them to various command groups.

<Button guid="guidMyPackageCmdSet" id="cmdidMyCommand1"

priority="0x0102" type="Button">

<Parent guid="guidMyPackageCmdSet" id="MyTopLevelMenuGroup" />

<Icon guid="guidMyPackageCmdSet" id="bmpMyCommand1" />

<CommandFlag>Pict</CommandFlag>

<CommandFlag>TextOnly</CommandFlag>

<CommandFlag>IconAndText</CommandFlag>

<CommandFlag>DefaultDisabled</CommandFlag>

<Strings>

<ButtonText>My Command 1</ButtonText>

</Strings>

</Button>The 'Menus' node defines the structure of UI elements (such as menus and toolbars), also binding them to command groups in the 'Groups' node. A group of commands bound with a 'Menu' element will be displayed by the UI as a menu or a toolbar.