This article will be about a new way to approach narrative design in games - the 4 Layers Approach. It is based on a GDC talk I gave in March this year. The approach is primarily meant to suggest a workflow that focuses on the story and makes sure the narrative and gameplay are connected. The end goal is to create games that provide a better interactive narrative.

Narrative Basics

First off, "narrative" will need to be defined. At its most fundamental level, the narrative is what happens as you play the game over a longer period. It is basically the totality of the experience; something that happens when all elements are taken together: gameplay, dialog, notes, setting, graphics etc.; the player's subjective journey through the game. I know this clashes with other definitions that refer to narrative as a separate aspect of the game, but I think this is the one that's most helpful when discussing game design. It also fits with job titles such as "narrative designer", who is a person that doesn't just deal with writing or cut-scenes, but who works at a much higher level.

Quick note: A deep dive into various story-related terminology can be found here.

Let's compare this to the other basic elements of a game. Looking at a game second-by-second, you see the core mechanics. Moving up to look at it using a time-frame of minutes, you see tactics and problem-solving (which also includes things like puzzles). Higher still, often on the scale of hours, you see the narrative. Almost all game design is focused on the two lower levels, mechanics and tactics, and narrative mostly comes out as a sort of byproduct. Designing the narrative becomes a sort of patchwork process, where you try and create a coherent sense of storytelling from the small gaps left behind by the layers below. For instance, in games based on combat mechanics the narrative usually just acts as a form of set-up for encounters and is heavily constrained by how the fights work and so forth.

So a crucial step towards better storytelling in games is to give at least as much focus to the narrative layer as to the other two layers, mechanics and tactics. It is important to not devote all the focus to the story though; having a symbiosis between all of layers is a core element of what makes video games special. If we want proper interactive story, we need to preserve this.

Simply saying that we want to put more focus on the narrative level is still pretty vague; it doesn't tell us anything useful. So I'll make it a bit more concrete by listing five required cornerstones of an interactive story. This is where we get into highly subjective territory, but that can't be helped - there's a wide span of opinions on how narrative and gameplay should work together (some would even object to having focus on the narrative layer at all!). But in order to move on we need to have something concrete; if we just continue to talk in vague terms of "improving storytelling", any suggestion can be shot down on the basis of some personal preference. Doing it like that will just get us stuck in boring discussions and it becomes much harder to set a proper goal.

Core Elements of Storytelling

The following elements shouldn't prove too controversial and I think most people will agree with them. But it still feels important to acknowledge that this is an opinion and not something I regard as an eternal truth. That said, here are my core requirements for a game with focus on narrative.

1) The focus is on storytelling.

This is a trivial requirement, but still way too uncommon. Basically, the main goal of the game should be for the player to experience a specific story.

2) The bulk of the gameplay time is spent playing.

We want interactive storytelling, so players should play, not read notes, watch cutscenes, etc. These things are by no means forbidden, but they should not make up the bulk of the experience.

3) The interactions make narrative sense.

This means actions that:

- Move the story forward.

- Help the player understand their role.

- Are coherent with the narrative.

- Are not just there as padding.

4) There's no repetition.

Repetition leads to us noticing patterns, and noticing patterns in a game system is not far away from wanting to optimize them. And once you start thinking of the game in terms of "choices that give me the best systemic outcome", it takes a lot of focus away from the game's narrative aspects.

5) There are no major progression blocks.

There is no inherent problem with challenge, but if the goal here is to tell a story, then the player should not spend days pondering a puzzle or trying to overcome a skill-based challenge. Just as with repetition this takes the focus away from the narrative.

There is a lot more that can be said about these requirements, all of which you can

find here.

Good Examples To Strive For

Now for the crucial follow up question: what games satisfy these requirements?

![Attached Image: Heavy-Rain.jpg]()

Does Heavy Rain manage this? Nope, there's too little gameplay (requirement #2).

![Attached Image: Rapture_Bioshock.png]()

Bioshock, with all the environmental storytelling? Nope, too much shooting (requirement #4).

These two games symbolize the basic issues almost all video game storytelling have: either you do not play enough, or most of what the gameplay does is not related to the narrative.

![Attached Image: ThirtFlights01.jpg]()

There are a few good examples, though. Thirty Flights of Loving is a game that I think lives up to the requirements. But the problem here is that the storyline is extremely fuzzy and disjointed. The game is a series of vaguely connected scenes, and is lacking a certain pure storytelling quality.

![Attached Image: Brothers.jpg]()

![Attached Image: TheLastOfUs.jpg]()

We come much closer to finding something that lives up to the requirements by looking at specific sections in games. Two good ones are the giraffe scene in The Last of Us and the end sequence in Brothers: A Tale of Two Sons. Both of these sections have this strong sense of being inside a narrative and fulfill my requirements. You are definitely playing a story here. But these are just small scenes in a much larger game, and that larger game breaks most of the core elements that I have gone over. So what we really want is to have a full game filled with these sorts of sections. That would be perfect!

However, that isn't possible. These scenes depend on tons of previous game content and are extremely hard to set up. You cannot just simply strive to fill the game with stuff like this, it's just not doable. In order to get a game that consistently evokes this feeling, we have to approach it from a different direction.

This leads us to the main bulk of this article, where I'll talk about a way to achieve this. This is an approach named “4 Layers” and the basic idea is to not attack the problem directly, but reduce it into steps and thereby be able to get proper interactive storytelling into just about any section of the game.

The 4 Layers Approach

The framework is something that's been developed between myself and Adrian Chmielarz, the man responsible for Painkiller, Bulletstorm, etc. At Frictional Games we are using this a cornerstone for our new game

SOMA, and Adrian's new company, The Astronauts, is using it for their upcoming

The Vanishing of Ethan Carter.

![Attached Image: soma_title.jpg]()

![Attached Image: TheVanishingOfEthanCarter_logo_black.jpg]()

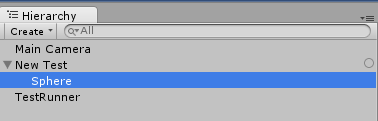

They way this approach works is that you divide the design process into four big steps. You start with the gameplay and then work your way through adding more and more layers of storytelling. The additional layers are Narrative Goal, Narrative Background and finally Mental Modeling.

Before I get more in-depth it is important to note that in order to use this approach correctly, the game must be broken down into scenes. Each scene could be a puzzle, an enemy encounter, and so on. Normally, gameplay refers to how the game plays out as a whole, but for this framework, we must split it up into sections. This is connected with the above requirement of not having repetition, and usually means that there needs to be a lot of logic and gameplay coded into the world. I think that this is presents a crucial piece of the puzzle for having better storytelling: to drop the need for an overarching play loop and instead make the gameplay fit each specific scene of the game.

So instead of having the gameplay describe the player's overall experience of the game, the narrative will provide this structure. Exactly how this is done will become more apparent as we go through the different layers.

Layer 1: Gameplay

First we need to start with the basic gameplay and it's crucial that the narrative aspects are kept in mind from the get-go. If the gameplay doesn't fit with the story, then problems will start to accrue and it'll make the later layers much harder to achieve and reduce the final quality. As a first step for ensuring this, there are four basic rules that must be followed:

1) Coherency

The gameplay must fit with the game's world, mood and characters. There should be no need for double-thinking when performing an action; it should fit with what has been laid out by the narrative. The player should be able to think about the actions made to get a deeper understanding of the game's story. What the player does must also make some sort of sense and not just be a sequence of random or nonsensical interactions. The infamous "mustache and cat"-puzzle from Gabriel Knight 3 is a shining example of what not to do.

2) Streamlining

It is important that the gameplay is not too convoluted and doesn't have too many steps. This is partly to minimize the chance of the player getting stuck. When the player is stuck for longer periods they focus on the mechanics or tactics for gameplay. Also, we want to have situations where the player can plan ahead and feel like they understand the world. If the steps required for any moment are too complicated, it's very easy to lose immersion and to lose track of the goal. This happens very often in classic adventure games, where the solution to something straightforward requires a massive number of steps to accomplish.

3) A Sense of Accomplishment

This sort of thing is normally built into the core gameplay, but might not be as straightforward in a narrative-focused game. It is really easy to fall in the trap of doing “press button to progress” type of gameplay when the main goal is to tell a story. But in order to make the player feel agency, there must be some sense of achievement. The challenge needed to evoke this sense of accomplishment does not have to be skill or puzzle-based, though. Here are a few other things that could be used instead: memory tasks, out-of-the-box thinking, grind, endurance tests, difficult story choices, sequence breaks, understanding of the plot, exploration, navigation, maze escape, overcoming fear and probably tons more.

4) Action Confirmation

When the player does something in the game, they must understand what it is that they are doing and why they are doing it. For basic mechanics this comes naturally, "I jumped over the hole to avoid falling down", "I shot the guy so he would not shoot me back" and so forth. But when taken to the level of a scene it is not always as straightforward. For instance, the player might accidentally activate some machinery without being aware that this was going to happen beforehand and afterwards not knowing what it accomplished. If this occurs too frequently, the player starts optimizing their thinking and stops reasoning about their actions. This then leads to an experience where the player feels as if they are just pulled along.

Getting all of these four rules into a gameplay scene and also making sure it is engaging is no small feat. Most games that want to focus on storytelling stop here. But in the 4-Layer approach this is just the first step.

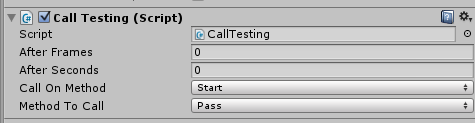

![Attached Image: example_iteration1.jpg]()

Before moving on to the next layer of the framework, I will give a simple gameplay example. Say the player encounters a locked door blocking their path. Some earlier information has hinted that there is a key is hidden nearby, and now they need to search the room to find it. Once they find the key they can unlock the door and progress. Very simple, and not very exciting, but it fulfills rules set up above.

- A locked door and hidden key should not conflict with the story.

- Given that the search space for the key is rather small, it is not likely the player will get stuck.

- It requires enough from the player to give a sense of accomplishment.

- Set up correctly, it should be very obvious to the player that the door needs to be opened and the key is the item used to accomplish this.

I will come back later and expand upon this with the other layers to give you a better feel for how the approach works.

Layer 2: Narrative Goal

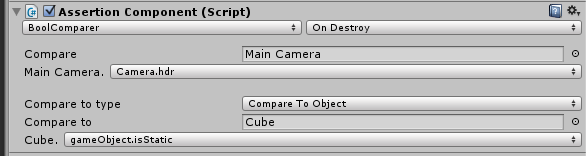

So, next step: the narrative goal. Normally the reason for the player to get through some gameplay segment is just pure progress. There is often some overarching story goal like “kill the evil wizard”, but that is quite far into the future, so when the player encounters an obstacle they try to overcome it because that is what the game demands of them. It is often very clear that they are in “gamer mode” at this point and until the obstacle is cleared. This is useful in order for the player to know what to do, but it is very problematic for the narrative - it removes the experience of being inside a story. The player stops seeing their actions as part of a story and instead sees them as steps towards an abstract gameplay goal. What can often happen is that the player starts thinking stuff like "Now I just need to get this section out of the way so I can get on with the story", a forced mental division between narrative and gameplay, which is diametrically opposed to the fusion we're striving for.

![Attached Image: layer3.jpg]()

The way to fix this is to give the player some sort of short-term narrative goal, one that is directly connected to the current gameplay. The aim is to keep the player in narrative mode so they do not brush the story aside for some puzzling or shooting action. When the player is engaged in the gameplay at hand we want them focused on and motivated by this narrative goal. This makes it harder for the player to separate the two, as the narrative goal is always in sight. It is no longer about "doing stuff to get the story going", instead it is about "doing stuff because of the story". The distinction might not seem that big, but it makes all the difference. Keep in mind this is at a local level, for a scene that might just last a few minutes or less; the narrative goal is constantly visible to the player and a steady reminder of why they are going through with the actions.

A nice side-effect of this is that since the goal is narrative in nature, it becomes a reward for completing the gameplay section. The player is motivated to go through actions because of story and is then promptly rewarded with a fresh piece of the story. In all, this binds the gameplay much more tightly to the storytelling. An additional side-effect is that it can keep the player on the right track. The player might not be sure what to do next, but if the narrative goal is connected with the solution to the obstacle, then the player will progress simply by being interested in the story.

Here are three different types of narrative goals that could be used:

Mystery

The most obvious and simple is mystery; that there is something unknown you want find out about. It's pretty easy to have environmental assets that constantly reminds the player of this - this sort of goal is also pretty easy to fit into a gameplay scene.

Uncomfortable Environment

Another way is to give the scene a narrative reason for the player not wanting to stick around. The most trivial example of this would be a dark and scary environment; the player is scared and wants to leave. It could also be that the situation is awkward or emotional in a way that the player can't cope with and wants to escape. For example, it could be a depressing scene, like a funeral reception, that makes the player sad. It's important, though, not to get caught up in game mechanics; it must be a story reason that makes the player uncomfortable, not some mechanic (spikes popping up here and there, etc.). We want the focus to be on the narrative, not the underlying systems.

Character Conflict

Character-based conflict can also be used as a narrative goal. Walking Dead is full of this; what are really just fairly simplistic activities become engaging because of story reasons. A great example is the food distribution "puzzle" where the player is instructed to determine how the remaining stash of food is divided. What makes it interesting is that the player cannot come up with a division that doesn't upset at least one of the characters. Any gameplay that results in the player changing the social dynamics can act as powerful narrative goal.

These are just three examples of what could be done and there are bound to be a ton more. I think you could use basic writing techniques to come up with more.

![Attached Image: example_iteration2.jpg]()

Now let's update the example from before and add a narrative goal. To keep it simple let's go with some mystery. Say there's a man on the other side of the door trying to get in. He wants to retrieve something that's in the room that the player is currently in, and is asking them to open the door. Now all of a sudden there's a short-term goal for wanting the door open, and it's no longer just due to wanting to progress. “Who is this man?”, “What object is it that he's after?” You want to get these questions answered and that adds narrative motivation.

Note: The 4-Layers framework is not a linear method, you'll have to constantly skip back and forth between the layers. In this case, you need to check the first layer, gameplay, and see if there's anything that could be updated in order to improve the narrative goal. You might need to change where the key is hidden, or even exchange the key for something else.

Layer 3: Narrative Background

With the addition of a narrative goal, the scene is now framed in a much more story-like manner. But there is still an issue: the actions the player does are quite gameplay-focused. In the above example, the player searches the environment simply in order to find a certain item; there is no proper sense of story-telling going on as the player goes through these actions. That is what this layer is all about fixing.

![Attached Image: drench_actions_in_story.jpg]()

The basic idea is that the actions the player is supposed to be doing are immersed in story substance. So when the player is interacting, it is not just pure gameplay, they are constantly being fed story at the same time. When the narrative goal was added, the player's thinking was changed from "doing stuff to get the story going" to "doing stuff because of the story". With narrative background in place we change it to "doing stuff in order to make the story appear". Narrative-wise, the player's actions are no longer just a means to an end, they are what causes the story to emerge as you play. Or at least that's how we want it to appear to the player. By having the gameplay actions and the narrative beats coincide, we make it hard for the player to distinguish between the two. The goal is for this to lead to a sense of always being inside a story.

Here are a few examples of the kind of background that can be used:

Story Fragments

This means having narrative clues scattered through the environment which are stumbled upon while playing. An important note is that shouldn't just be the standard audio logs and diary entries. While it can consist of those sort of elements, it's important that they never mean a large interruption in the gameplay, and that they're found as the player goes through with the actions needed to overcome the obstacle. The act of collecting clues should not feel like a separate activity, but come as a part of the scene's main gameplay.

Complementary Dialog

There can also be dialog going on at the same time, giving context to the player's actions. Bastion uses this to great effect. All of the standard gameplay elements like enemies, power-ups and breakable crates are given a place in the world and a sense of importance. It also gives a great sense of variation to similar activities, as their narrative significance can be quite diverse. Dear Esther is another good example of this at work. Here the simple act of walking is given the sense of being vital to the story.

Emotionally Significant Assets

If the the items involved in the gameplay have some sort of emotional value or a strong connection to the story, the player is much less likely to see them as abstract tools. Inside of picking up "item needed to progress", the player finds something that can be a story revelation in itself. There is a huge difference in finding "standard knife A" and "the murder weapon from a hideous crime".

These three are of course not the only methods at your disposal to create narrative background. Just like with the previous layer, there are bound to be tons of other things too.

![Attached Image: example_iteration3.jpg]()

To make things a bit more concrete, let's go back to the example scene and add some narrative background. First off, let's add story fragments in the form of clues. These can give hints to the player about who the man behind the door is. Pictures, painting, documents and so on. So while the player is searching for the key they'll also be fed hints about the story. Secondly, let's have the man comment on the player's actions and give hints, making him reveal his character a bit. Third, we could say that it was the man who hid the key and that he did so for some very important reason. That way the key has some narrative significance and is not just an abstract tool. Getting all of these things in might require us to change the puzzle a bit, but as said before, this not a linear design approach. What you learn from the later layers must be fed back into the previous ones.

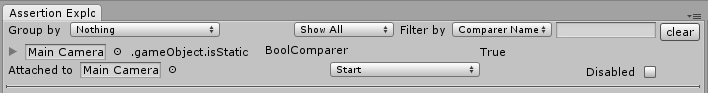

Layer 4: Mental Modeling

Now comes the 4th, and final, layer - Mental Modeling. The goal with this layer is to change the way the player perceives and thinks about the game. We want to tap into how the player evaluates their experience.

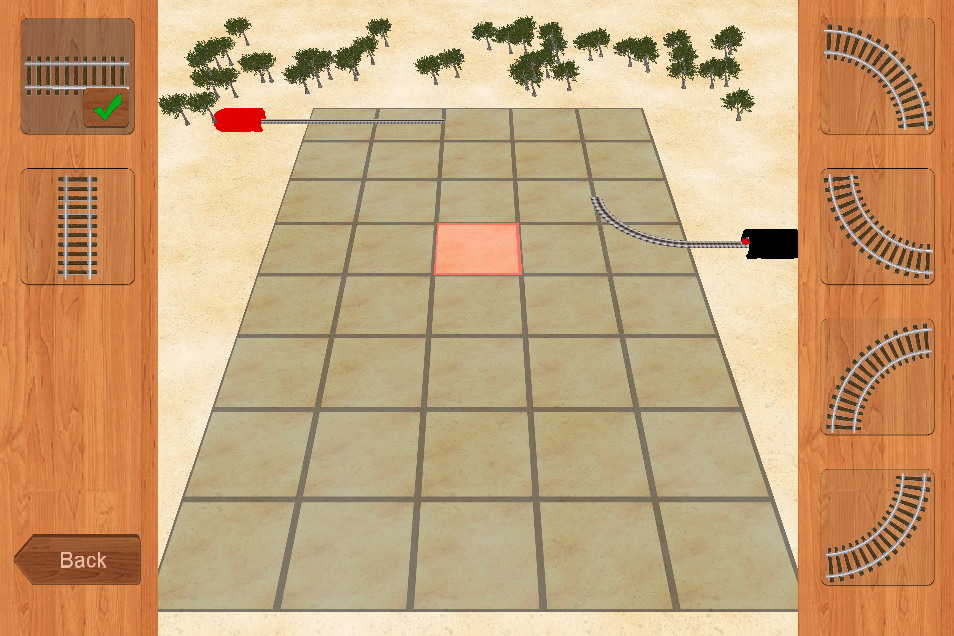

![Attached Image: screen_not_equal_minds_eye.jpg]()

The first and crucial fact you must be aware of is that what is actually on the screen when the player is playing is not what ends up in their head. Nor does the player rely directly on any abstract system to make choices. The player's brain builds up a mental model of the game, a sort of virtual representation based upon what they see, hear and do. It's this model that's used when you choose what to do next.

This might seem a bit bizarre and counter intuitive but it really isn't. Just consider how a player doesn't rely on direct feedback from the underlying systems in order to traverse a space. They don't bump into every wall in order to check where they can go. Instead they use their knowledge of the real world, intuition of the systems, and visual and auditory clues to plan a path. And once that plan is finished (which for simple tasks like walking takes a fraction of a second), the plan is executed. Stated like this it sounds really trivial, but if you think about it a bit more, it's actually quite profound.

The underlying gameplay systems only really become evident for the player if they do something wrong or when they directly contradict their mental model. Otherwise they play and plan largely in part based on an imaginary game. Obviously the underlying system is what keeps it all working, and the feedback between the systems and the player's input is crucial for anything to happen. But the systems are never directly queried to lay out the boundaries and options available to the player. In fact, keeping the player's sense of immersion is often directly related to keeping the systems hidden. The player is not a computer and doesn't make decisions based on tables of abstract data. Built-in brain functions handle all that, and the smoothest sense of play comes about when the player is relying on gut feeling and intuition. Constantly having to probe a system to figure out its exact make-up is almost never a pleasing experience. (Unless that is what the game is all about, as is the case with some puzzle games).

Side note: I need to note that the player's intuition is updated the more that a system is revealed to them. If the player first assumes some enemies can jump but later finds out that they can't, their mental model is updated accordingly. This can have devastating effect on a narrative-focused game, making life-like characters turn into dumb automatons and so on. For more information on how all that works, check this out.

![Attached Image: RainbowSix.jpg]()

Brian Upton has a great example of mental modeling in action based on his work with the original 1998 Rainbow Six. In Rainbow Six the player dies from a single shot and has to be very careful how they progress. Since they are constantly on the look out for hostiles, even a very simplistic world can have a lot of gameplay, and that's without the player doing much. For instance, if they are about to enter a new room they stop and try to figure out the best approach. They need to consider if someone might be hiding out of sight and so forth. Based on their mental model of the game they will simulate many different approaches in their mind, trying to figure out which will work best. Even an empty hallway can conjure up these sorts of thought processes. The game is filled with possibilities that the player needs to be aware of, and the only way to do this is to use their intuition on how the game's virtual world and its inhabitants work. These constant mental gymnastics are a crucial piece of the experience.

The important point here is that most of what exists in the player's mind has no systemic counterpart. The player might imagine a guard hiding behind a corner, thinking of how he might be looking around. But in reality there is no guard behind the corner. Thus, a great deal of the playing time is spent just imagining stuff. This might seem like a cop-out, and not like proper gameplay, but that's not the case at all. It's sort of like chess, where most of the gameplay comes from you thinking about a situation, and the actual interaction only makes up minor portion of the playing time. Making mental models is very much a valid form of play.

The takeaway here is that there is a lot of gameplay which doesn't translate into an input-output loop within the game's systems. And more importantly, this sort of mental model-based gameplay comes from the player's high level interpretation of the game's systems, graphics, sound and so forth. This means that it basically ties directly into narrative. The mental model and the narrative lie on the same level, they are the accumulation of all the lower level stuff. And if we can get them to work together, then what we have is the purest form of playable story where all your gameplay choices are made inside the narrative space. This is clearly something worth striving for.

What's also interesting is that these sort of thought processes share the imaginary nature of books and film. The player doesn't have to be 100% correct with all assumptions, just like you don't have to have a perfect mental recreation of the locale a novel takes place in. If the player imagines a non-existent guard being around the corner then that is OK. He might approach slowly trying to get signs of the guard's whereabouts and not finding a guard behind the corner doesn't need to mean the fantasy is broken. The player can now imagine that the guard soundlessly snuck away, or something similar. When interacting directly with systems, like shooting bullets at a clearly visible enemy, the player's assumptions can't stray very far from reality. If the player imagines the bullets hitting when they in fact don't, that fantasy will quickly be broken.

Quick note: In case you haven't already noticed, this layer isn't just confined to a single scene. It's something that overlaps a lot of of the game. While you could potentially have mental models that only last for short durations, it is more effective when it spans a greater part of the game.

Many narrative games already have some degree of mental modeling, but in the worst way possible: collectables. Say you have this story about a creepy forest and a protagonist trying to figure out what is real. And then picture the mental model constantly saying: “find all the thermoses, you know there are some around”. This will obviously make the game lose a lot of its potential. Be wary of this kind of issue.

Instead you want to have a mental model that fits with the rest of the narrative. What follows are a few suggestions:

Danger

There is something lurking about that constitutes a threat for the player. It's important that this threat is not some common occurrence that relies on twitch reflexes or similar, as it's just a normal gameplay element then. Instead it must be something hiding, only making brief appearances. The idea is for the player to constantly scan the environment for clues that the danger is near and present.

Goal-focused Mystery

This can mean that the player has the objective of solving a crime or similar. What we are after is that the player should see the game world as a place where important clues are to be discovered. So whenever the player finds a new location they should instantly start thinking about what new things it can teach them about the mystery.

Social Pressures

The player is amongst other people that they have to try and figure out. Now whenever the player finds something new or watches NPCs interact it updates their mental model of what makes the characters tick and what their motivations are.

The above should give an idea of what is possible, but as before, there are probably tons more to explore.

![Attached Image: example_iteration4.jpg]()

Now it's time to go back to the example scene and update it with the 4th and final layer. Let's add some sort of danger. Say the player is hunted by shape-shifting demons throughout the game and that these are also a big part of the story. This means the player won't be sure if the man behind the door is a friend or foe. We can tie this into the layer 3 stuff as well; as the player uncovers the narrative background they receive hints about the true nature of the man behind the door as well.

We've now gone from just having a really simplistic puzzle about opening a door to an entire story experience. The player is now under threat that there might be some kind of demon on the other side and is desperately trying to find clues on what the secret man's true identity is. At the same time, the man is also the key to a mystery, a mystery the player is very curious to figure out. The player is scavenging for the key, digging up more information as he goes along and when he finally finds it he needs to decide whether to use it or not. The basic gameplay hasn't changed much, but we've changed the wrapping and it totally transforms the experience.

Endnotes

What I think is extremely interesting about this approach is that it always forces you to think about story. Normally it's so easy to just be satisfied with a well-thought-out gameplay segment and to leave it at that. But when you follow 4-Layers you need to make sure that there's some story significance to the activity the player is currently doing. Story becomes an essential part of the game design.

It can also act as a filter. You can evaluate every gameplay scene and make sure it fulfills the criteria in each of the layers. This way you can easily tell if a some segment is just filler, or lacks in some other way. This is a great way to keep the design on track and make sure there is a strong narrative focus.

The method is not without its problems though.

First is that it requires a lot of planning. You need to design a lot of this up front and it's not very practical to build a scene from experimentation and iteration alone. Design documents are crucial, as there are just too many aspects to keep track of.

Second is that its core strength is also the biggest weakness. The gameplay and narrative are intertwined and if you change one the other needs to be updated too. This mean that you need to throw out and remake a lot more than usual during development. But I don't see this as a failure, I see this as evidence that the approach really is bringing gameplay and narrative close together.

In a way this approach doesn't really change the core ingredients of a game. It just adds a bit of trickery on top. This is exactly what I like about it though. It doesn't rely on anything that we don't have at our disposal. And, as with all good storytelling, it relies on the audience's imagination doing the bulk of the work. I am really excited to see how this approach will turn out in the finished games. So far it's been of great use to us, and hopefully someone else will be inspired to give it a go.

Acknowledgments:

Adrian Chmielarz, for all the great e-mail discussions that led to all this and feedback on the talk.

Brian Upton, for letting me read an early copy of his book and providing the basis for the Mental Model section.

Matthew Weise, for providing valuable feedback to the lecture.

Ian Thomas, for copy-editing this whole thing.

This article was originally published on the Frictional Games blog, and is reproduced here with kind permission from the author.