It’s true that you don’t need to create a formal business plan in order to start a business. You can

kickstart a business very quickly without having to plan out every detail in advance.

That said, there can be tremendous value in planning. Thinking through a business in advance is hard work and requires deep concentration (if you want to do it well), but the payoff is a significant increase in clarity and a better shot at creating or expanding a successful enterprise.

I spent most of last week creating a new long-term plan for my business, which I just completed on Friday. I hadn’t done anything this thorough since 2005. It was incredibly tough mental work, and I put in many 12-16 hour days in a row, sometimes working so hard that I literally fell asleep at my desk. Then I’d wake up and work on it some more.

Since I’ve just been through this process, let me share some thoughts on creating a written plan for your own business.

Planning for Yourself vs. Planning for Investors

There’s a big difference between creating a business plan for your personal clarity vs. creating a plan to attract funding. Most of the business planning information I’ve seen in books or online is heavy on the latter side. If you don’t need outside funding, you can probably ignore 30-50% of the typical suggestions for what to include in a business plan.

There can be value in doing some of the work that it would take to impress an investor. Thinking through the financials is a good idea, but in practice a lot of what goes into an investor-based plan is actually persuasion as opposed to serious planning. Financial projections can be incredibly subjective, and you can’t predict with much accuracy what’s going to happen under real-world market conditions anyway. Overplanning is also a waste of time — you need to guard against filling your plan with irrelevant details that simply won’t matter one way or another.

I set financial goals for my business, but I don’t bother making predictions which are merely guesswork. Instead I spend more time planning how my business can adapt to whatever conditions may occur.

My business plan is created solely for me, and to a lesser extent, for those who work closely with me. I’ll never show it to an investor or banker because I’m confident I can continue to grow the business with a strategy that requires no outside financing.

Thinking Strategically

Business planning helps you think strategically about the road ahead. You only have so much time each day, month, and year to make decisions and take action. For many business owners those actions are chaotic and unfocused. They start projects they never finish. They miss opportunities by failing to act promptly. It’s very easy to hit a plateau and get stuck there for years.

A clear, committed strategy helps to cut through all of that. It sharpens your day to day choices. It provides an intelligent framework for action.

The problem, however, is that there are many valid strategies for growing a business. You will undoubtedly have more opportunities than you have time to pursue them. You can’t do everything well. If in the back of your mind, you’re oscillating between several different primary strategies, you’ll have a hard time growing your business if these strategies don’t mesh incredibly well.

I could grow my business in a variety of different ways. I could blog more often. I could write more books. I could expand into videos. I could expand my workshop offerings and begin doing them in different cities. I could invest in more marketing and PR. I could do guest blogging and accept more interview requests. I could get back into podcasting. I could start a membership site or paid subscription service. I could hire a few personal coaches and open a coaching program. I could turn my blog posts into products to sell. I could expand my social media presence. I could launch my own affiliate program (for workshops and future products). I could do more joint venture deals.

We could do any or all of these things, and many of them would be effective. But we can’t do all of them well. We might be able to do one or two of them well at any given time.

Thinking strategically requires deciding which fronts not to open. To create a practical and realistic business plan, I had to make some tough choices about which directions not to pursue. At first glance, almost everything looks golden. But with some deeper probing and a lot of analysis, I could discern which opportunities are truly the best relative to the others.

The Planning Process

Planning is an iterative process. In many areas you won’t know the best decision to make. At best you’ll be able to identify some options, but you won’t have much clarity about which possibilities make the most sense.

The way I resolve this is by taking a stab at each part. You can’t leave things in a wishy washy state, or you’ll end up with no workable plan at all. You have to keep pushing towards resolution and convergence. A good way to do this is to force a decision in a particular part of your plan. Then see how it fits. If it doesn’t feel right, yank it out, and try another possible solution. Repeat till you get it right.

Planning is an exploration of the potential solution space. To find the right combination of products, pricing, marketing strategies, staffing, and more, take some guesses and see what the big picture looks like. Then notice how those different elements mesh together.

It’s much like creating a song. Choose some notes and sequence them together. Then listen to the result. Does it sound harmonious? At first it probably won’t. But what’s creating the disharmony? Can you identify one misalignment? And can you fix that?

Then you keep tweaking and listening, tweaking and listening. Write out each new idea in great detail. Then read it back.

Sometimes you’ll get inspired ideas. Sometimes you’ll have to use a lot of perspiration, testing multiple ideas to find the right one.

My business plan is only 23 pages, but I probably wrote at least triple that to create it. For some parts of my business, intelligent solutions were fairly obvious. But in other areas, the right approach wasn’t obvious at all. My first stab produced a lot of text, but when I stepped back and read it within the context of the rest of the plan, it wasn’t harmonious. Perhaps my website would be delivering one message, but my products and pricing were likely to be incongruent with that message; the predicted consequence of that disharmony is that my business would end up attracting people who’d resist being customers — not a very sustainable approach.

This is a really important point to emphasize. To achieve convergence you can’t just sit and ponder until the right idea pops into your head. You have to take some guesses and run with them. Take a stab and fully document how it’s going to work, as if you’ve already made your final choice. Then look at it within the context of the rest of your plan. Does it seem harmonious? Does it support the other areas beautifully and elegantly?

My major rule here is that if it doesn’t feel elegant (or sound harmonious, or look beautiful — take your pick of modality analogies), it’s wrong. I know I have the right solution when a wave of awe washes over me, when I have to get up out of my chair and pace around so I can just be with that feeling for a while. Then I know I’ve figured out a key piece.

Deep Honesty

Deep honesty means being able to look at what you’ve planned and answer these questions:

- Is this an intelligent approach?

- Is this an honest approach?

- Is this a loving approach?

- Is this a strong plan, or am I caving to weakness and low standards?

- Is this a harmonious plan? Is it elegant and beautiful?

- Will this be a path of continued growth for me?

- Is this a courageous path, or am I playing it safe?

This is akin to asking a musician after many days of hard work, What do you think of your finished song? Will you get a fair and honest assessment, or will the answer be overly biased by the musician’s personal investment in the song?

There’s a temptation, especially when you’re tired after working so hard, to capitulate to a flawed plan. At some point you’ll want to say, This is good enough. You’ll want to label weak as okay, okay as good, and good as great. You’ll want to turn in B-quality work hoping to get an A.

But if the plan isn’t harmonious and elegant… if it doesn’t knock you back in your chair… if it doesn’t quicken your pulse like a beautiful song… you’re not done. You mustn’t say “it’s good enough.”

Hold out for the truly elegant solution — not by waiting, but by continuing to diligently explore until you find it.

How do you know when you’ve found a beautiful solution? If you have to ask, you haven’t found it yet. When you find it, you’ll know. If you don’t know that you’ve found it, you haven’t.

Listen to your very favorite song, one that you’d consider a masterpiece. When you listen to it, ask how you know it’s beautiful. You probably can’t articulate exactly why. You know that it’s good by how it makes you feel. If you have to seriously ask yourself whether the song is beautiful, you already know that it isn’t. Beauty is recognized, not analyzed.

When Martin Gore wrote the song “It’s No Good,” he knew he’d created something good (ironic given the title). He called Depeche Mode bandmate Andy Fletcher and told him, “I think I’ve written a number one.” And in many countries, it did hit #1. (source: DM biography Stripped).

This is how it is with a good business plan. When it’s finally done, you’re compelled to take a deep breath and say something akin to, “I think I’ve written a number one.”

When you’ve created a song you know is amazing, you can’t wait to share it with people. Similarly, when you have a business plan that you truly love, you can’t wait to implement it. But if your song (or your plan) is weak, then moving forward is more difficult. You’re more likely to procrastinate because you know you haven’t done your best work.

If you don’t love it, you’re not done. A plan you don’t love isn’t finished. How do you know you love it? Again, if you have to ask the question, you’re not there yet. A great plan will excite you.

What to Include

There are many guides to creating a business plan, but so many of them are filled with fluff, or they may be inappropriate for your particular business. Most of the ones I’ve seen are ridiculously archaic. In doing some research, I came across a

business planning tutorial from Entrepreneur Magazine. Their template appears to be based on a manufacturing business. Seriously… what percentage of entrepreneurs are starting new manufacturing businesses these days? Perhaps they should note what century this is.

If you need to create a plan for investors, then you may want to follow conventions that they expect. But if, like me, you’re just creating a plan for yourself and your team members, then make sure the plan fits your business. Feel free to take advantage of online templates, but adapt them to your needs. If a section seems irrelevant, it probably is.

My plan has the following sections:

Overview – What’s the basic concept of the business? What is its purpose?

Business Description – What does the business actually do? Who are its customers? What are its products and services? What value does it provide? How does it earn income? What’s special or unique about it?

Market Strategies – What’s the target market for the business? How will you position it? How will you get the word out and reach potential customers? Why should anyone care about what you can provide? What’s your distribution strategy? What kind of PR will you do? Who’s your competition in the marketplace? What’s your strategy for dealing with competition? What’s your search engine strategy?

Pricing – What’s your pricing strategy? Do the numbers make sense? How will this affect your market positioning? This can be one of the most challenging sections to get right.

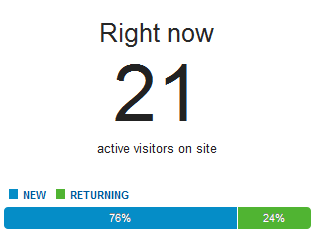

Social Media Strategy - How will you leverage social media? How does social media mesh with the rest of your business? Can you use it intelligently without seeing it become a distracting diversion? I haven’t seen any business plan templates that include a separate section for social media, but I include it because it’s a part of my business (blog, forums,

Google+, etc), and it’s a growing segment that will likely be around for at least the rest of the decade. StevePavlina.com’s own discussion forums will soon pass 1 million messages posted.

Development Plan – How will you take the business from where it is now to where you want it to go? This is where you linearly plan out the steps to go from A to B. Document the key processes your business will need to execute. Identify the major risks, and decide how you’ll manage them. I prefer to spin off separate documents for this section, so it doesn’t become too bloated. For instance, I have other planning docs for my staffing plans, my process for creating and delivering workshops, my process for creating new products, etc. Those plans are 2-7 pages each, so if I included them in the main doc, it would probably be around 50 pages in length. Expect to spend a lot of time on this part of the plan.

Business Finances - In this part of the plan you can include things like balance sheets, income statements, and cash flow statements. You can analyze your costs as well. For a new business these will be projections (which are often just guesses). For an existing business you can use historical data and also include projections if you so desire. I don’t bother to include this section in my plans because my business has been profitable for years (October 1st, 2011 was its 7-year anniversary). I’m not trying to impress any investors, and I can use my accounting software to review my financials whenever I desire. I don’t bother to make future projections since I think it’s largely a waste of time. Another reason this section is largely irrelevant to me is because my business has a very low cost structure. My growth plans don’t require spending much cash, and the existing cash flow will cover it. I also have plenty of ways to quickly adapt to a cash crunch, so I simply don’t need to pay as much attention to this area. This would be an important area to fill out if you’re investing a lot of capital into the business, and you need to convince yourself and/or others that you have a sound plan for recouping that investment. But if your projections ultimately amount to guessing, why bother?

Closing – I like to include a half-page closing of just a few paragraphs to summarize the key strategic decisions. Since I already have a business, my main focus here is about what I need to start doing differently in order to implement the plan. What do I need to start doing? What do I need to stop doing? What do I need to change about the ways I’m doing things?

Thinking Holistically

Each part of a business plan is like a puzzle piece, and the entire plan is the puzzle. Your puzzle may have 100 pieces to it. But you may be able to identify 500 puzzle pieces. Many of those pieces will look like they fit the puzzle, but when you include them, it will feel like the puzzle isn’t quite coming together.

A holistic plan is one where all of the pieces support each other to create a singular picture. When you have this picture, your business will seem much simpler. Without this picture all you have is a jumble of pieces, each one demanding your attention. You don’t have the capacity to give all 500 or even all 100 puzzle pieces your full attention. But you can give your attention to the big picture, and if those 100 pieces all fit together beautifully, you’ll be giving them the right level of attention when you focus on the big picture.

As I created my business plan, I realized that the process requires a lot of deleting and letting go. There were some puzzle pieces I was very attached to, pieces I’d assumed should be important components of my business, but when I included them, I had to conclude they didn’t fit the big picture.

Letting go of the unneeded bits requires a lot of self-awareness. I had to pause many times and admit to myself that I didn’t feel good about a particular aspect of my plan. Occasionally I worked through the math behind an idea, or I tried to project the idea forward in time to think about the long-term consequences. In some cases I could see that 5-10 years down the road, I’d be left with a very undesirable situation, even though the first year looked great. Other times my intuition would be the dissenting voice. If any part of me disagreed with the idea, I knew I had to rework it or let it go. My commitment was to create a plan that made logical sense, that felt good, and that satisfied my intuition.

One thing that helped me tremendously was to do a 7-day all raw no-fat cleanse before I began this planning process. I started with a 24-hour water fast, and then for the next 6 days I ate nothing but fresh fruits and vegetables. No salt. No spices. No oils. No sweeteners. No overt fat sources like avocados, nuts, seeds, or coconuts. Just raw, water-rich fruits and veggies, water, and some occasional herbal tea (no caffeine). I lost 4.5 lbs during that week, but that was nothing compared to the mental clarity I experienced. After about 3 days, my mind became super sharp, as if I had more working memory available for conscious thought. I wasn’t even going to make a business plan at this time, but when I started working on other planning documents, I couldn’t help but notice how sharp my thinking was. I blazed through a day’s worth of work in a couple hours. When I tackled really hard problems that had challenged me for months or years, simple solutions were suddenly obvious. I felt a bit stupid for not seeing them earlier.

I realized I had to take full advantage of this heightened clarity for as long as it lasted, so I dove into this business planning project and worked each day till I was ready to drop. I’m so glad I did because I think I was able to do a better job in a week than I probably would have been able to do in a month if I didn’t have this extra clarity. If you’ve seen the movie Limitless, the experience was almost like taking one of those pills — not quite that good, but enough to notice a difference.

I’m still feeling this heightened clarity now, but I can tell it’s not quite as high as it was near the end of the cleanse week (which ended last Sunday). I’m probably still enjoying 60-70% of that boost though. I’ve never done a cleanse like this before (I’ve done low fat but never no fat), so this was a new experience for me. I’ll very likely do more cleanses like this when I want to regain that mental boost. The productivity I’ve been enjoying these past couple weeks has been amazing. I’d love to learn how to create this level of mental performance permanently, but I’ve had problems with eating very low-fat in the past for more than 2-3 weeks (like having my skin become so dry that my knuckles were cracked and bleeding).

I’m not saying you have to do a similar cleanse to create a decent business plan, but I am suggeting that it makes sense to be at your mental and physiological best when you do it. The sharper your mind is, the better your plan will be. This is incredibly challenging work that will stretch your brain to its limits. Give yourself every advantage you can.

Competitive Advantage

One of the most important parts of a good business plan is identifying your business’ competitive advantages. Many planning templates have you start by doing market research and looking for market gaps. Then you deliberately target those gaps to position your business competitively relative to existing businesses. You look at what the other players are doing, and you target where they’re weak.

I prefer to approach this from a different angle, especially for small Internet businesses. Start by looking at your personal strengths. How are you different from others? What can you do better than most people? Or what could you eventually learn to do better than most if you worked at it?

If you start with a strengths-based approach, then you need to massage your strengths into a competitive advantage that people will care about. A strength is probably something that matters only to you. It may take some work to transform it into a benefit for your customers.

One of my strengths is that I can develop quality content on many topics much faster than most of my competitors can. I can create in an hour what takes many of them half a day to a day to do.

Being a prolific content creator isn’t necessarily a competitive advantage, but it can be turned into one. For instance, by using this strength to write lots of quality free content, I was able to build very high web traffic in just a couple years. This was largely under my direct control too. I didn’t need Oprah to host me on her show. I didn’t need outside investors to give me money. Now I’m able to leverage this traffic to do things that most of my competitors can’t, such as delivering workshops without spending any money on marketing or promotion. I can also develop workshops faster, which allows me to launch several new workshops simultaneously instead of doing the same one or two over and over.

While you may not like the idea of thinking competitively, it’s wise to view your business through this lens and give it some careful thought. People have an incredible array of choices today. Why on earth should they buy from you instead of from someone else? If you can’t come up with a good reason, don’t expect your customers to figure it out for you. They will indeed buy from someone else.

If you can’t think of any major strengths, then what makes you different? What sets you apart from other people? If you embrace your differences, you may see that you can turn them into strengths. For instance, I live in Las Vegas, which is different than where most people live but not necessarily better. However, I’m able to turn this into a strength by doing workshops on the Las Vegas Strip, which is a fun and lively place. I take full advantage of the location by inviting people to do special exercises in the casinos and on the Strip and by encouraging people to hang out socially after hours, see shows, etc. This provides them with fun, memorable experiences that they won’t have at other people’s workshops. Living in Las Vegas is merely different, but with a little creativity it can be made into a strength.

What’s different about you or your business but not necessarily better? Can you massage one or more of those differences into a strength for your customers? Is anyone else already using similar differences to create a competitive advantage?

Thinking Long-term

Business planning will challenge you to think long-term, years and decades ahead.

I use a time frame of 10-20 years for most aspects of my plan. If I think only 6-12 months ahead, I fail to see how particular paths can magnify into problems down the road, and I overlook major opportunities. If I try to think more than 10-20 years ahead, my plan becomes too speculative, although I can think further out for some aspects that are likely to remain stable.

A lot can change in 20 years. If you had a PC 20 years ago, you probably had a 386 or 486 running MS-DOS 5.0 and possibly Windows 3.0. Windows 3.1 didn’t ship till 1992, and Intel didn’t ship the Pentium processor till 1993. No smart phones. No iPods or iTunes. No web browsers. No Google or Yahoo. No YouTube. No social media unless you liked BBSing. You may have had email, but you probably checked it using a slow dial-up modem. If you did use the Internet, you may have accessed it via CompuServe, Prodigy, or AOL. If you owned a video game system, it was probably a NES, Super NES, Sega Genesis, Turbo Grafx, or Neo-Geo… or Game Boy or Game Gear for a handheld. If you went to the movies, you’d have be wowed by the 3D special effects in Terminator 2.

So if so much is going to change, how can you possibly create a long-term plan that makes sense? Isn’t planning pointless in light of such uncertainty?

The purpose of planning isn’t to predict the future. The purpose of planning is to sharpen your present day decisions and to give your business an intelligent basis for growth.

It’s true that you can’t know what’s going to happen even a few years from now. Surprises will occur. Some of those surprises will help your business. Others will throw you for a loop. No matter what, you’re going to have to adapt as you go along.

But some aspects of the future may be fairly predictable. I feel good in predicting that personal growth will still be important in 20 years. It’s been around for thousands of years. It will probably survive a few more decades. Actually I predict it will be even more important in 20 years than it is today. For at least the last few decades, this field has been trending towards expansion, growing by many billions of dollars in annual revenue within the past five years alone. People are spending more on personal growth than ever before. And as far as I can tell, this increase is expected to continue for many more years.

One of the reasons personal growth will become increasingly important is that change is accelerating, especially technological change. The job market will continue to shift. To be competitive workers, people will need to adapt more quickly than ever to changing circumstances. They won’t be able to trust that they can just get a job and keep it for decades.

I predict that traditional educational systems like universities will become increasingly less relevant, failing to adapt quickly enough to marketplace changes. By the time a student graduates from a 4-year degree program, so much of what they learned will already be obsolete. This is already a major issue today, but it will continue to get worse. College grads will enter the workforce wholly under-prepared for the competitive realities of the workforce. This creates tremendous opportunities for the personal growth field (which overlaps traditional education) to fill in the gaps. There will be increasing demand for faster, more intelligent, more practical sources of education — forms that can adapt their curriculums more quickly to changing circumstances. Archaic elements like tenure only make it harder for old systems to adapt, so if those structures aren’t replaced with more flexible systems, those institutions will be out-competed by smart entrepreneurs who are willing to embrace change. To some degree this is already happening, and I expect this sort of change to continue.

The business opportunities in education alone are staggering. I’ve lost track of how many millionaires I’ve met who built successful businesses teaching people important skills that aren’t normally taught at traditional universities. By leveraging the Internet, they can do it at much less cost for their students, they can do it faster, and they can keep their programs modern and practical under today’s conditions.

All this growth and expansion will create more confusion and stress. Self-discipline and focus will become increasingly important qualities for people to develop since distractions will surely keep expanding. The demand for better management of one’s life will increase significantly.

You don’t need to be a technologist to make some reasonable predictions about the future. Just look at some of the general trends that have been building for years, and project them forward. Smart phones will get smarter and will become even more common. Tablet computers will become more powerful and more common. Data transfer rates will increase. The Internet will become much bigger. New major players will emerge. There will be more interests competing for your attention than ever before.

Some major breakthroughs will occur, and human beings may begin integrating tech-based enhancements onto or into their bodies, but the concept of growth won’t go out of style. Very likely it will become even more important. The fastest growing, fastest adapting people will have a major competitive advantage over those who are slow to adapt. This remains true whether the world of the future becomes more abundant or more scarce.

By making some reasonable predictions about the needs of future humans (or cyborgs, or whatever we become down the road), you can make decisions today that set yourself and your business on a path to long-term success. You can avoid getting bogged down in short-term thinking that leads you astray. You can build a business to grow in alignment with the direction that the world is heading, not where it’s been.

I can see pretty clearly that people are going to need a lot more help with focus, self-discipline, and self-control over the next several years. I can see that many traditional educational institutions are going to get worse in terms of their ability to teach students skills they’ll need in today’s workplaces, especially as they have their budgets slashed. I can predict that more people are likely to access my work on devices that aren’t a desktop computer or a laptop. This helps me make intelligent choices about how my business can serve those needs while remaining flexible and adaptable.

It’s important to get clear on the difference between your medium and your message. Your message can remain fixed, even under changing circumstances, but your medium must remain flexible if you want to have a competitive business across decades in time. My message is conscious growth, and that message can adapt to many different media. I don’t need to worry that blogging may someday go out of style. Ten years from now, most of our interactions may occur through a medium other than blogging. Growth is my business, not blogging, and growth can be communicated in many forms. With a plan based on your message, you don’t need to fear change; rather, you can be excited by all the new opportunities change can bring. (For more on this notion, read

The Medium vs. the Message.)

Clarifying the Core

When you finally complete your business plan and clarify the big picture, you may feel a newfound sense of excitement about it. Ultimately the core of your business will probably be something very simple, perhaps something so simple that you were inclined to overlook it.

In my case when I saw the big picture, I realized that it ultimately came down to one simple principle. In order to have a business that really works, I have to focus first and foremost on pursuing my own path of growth. Making money doesn’t work as the main focus. Creating products or doing workshops can’t be the main focus either. In order to succeed, I have to make sure the business is tough on me. I can’t allow it to become so easy that I no longer feel challenged.

When I feel challenged, I’m much more motivated, so I work harder, and my business thrives. When it gets too easy or repetitive, I lose interest. If I don’t feel I’m growing by running the business, that’s a problem. So I have to run it in a way that keeps me in that sweet spot of challenge. That sweet spot, however, is a moving target. It’s not a static spot. And so I came to realize that the only way I can make my business viable and successful in the long term is that I have to relate to it as a vehicle for my own growth and development.

If I stop growing, my business loses its value to me. I begin to check out from it. I’ll turn my attention elsewhere to keep growing. And the business will ultimately suffer for that.

Intuitively I’ve known this all along, but it was difficult to see it till I worked through all the details and finally understood it logically too. It may seem like an emotional or even an irrational choice to define the primary purpose of my business as serving as a vehicle for my own growth. But when I worked through the consequences of that focus, I understood that if I make this my primary focus, then many other intelligent choices flow smoothly from there. I have to help other people grow in order to grow faster for myself — I can’t grow much in a vacuum. I have to innovate. I have to make the business financially sustainable since going broke isn’t going to help me as much as creating more abundance will. I already did the going broke thing more than a decade ago and don’t see much point in repeating it.

This simple understanding helped me remove many puzzle pieces I might otherwise have kept. I now see with much greater clarity that it’s unwise to try to expand my business in directions that won’t help me grow.

I don’t think this is particularly unique though. I think the appeal of entrepreneurship for many people is the long-term personal growth that’s gained from this path. That’s what keeps a business fresh and exciting for the founder. That’s what got me out of bed at 5am this morning. When that growth is no longer present, it’s a good time to sell or leave, so you can move on to new growth experiences.

What’s really interesting about this is that even though I mainly used the objective perspective to develop this business plan, the end result is nicely congruent with the subjective perspective as well. What does a business matter in a dream world? The subjective value is how the business affects you, the business owner. It doesn’t matter how much dream money you accumulate or how many dream characters you can count as customers. What matters is the story you’re creating and how it affects your character’s development. This is of course perfectly in line with what we should expect from the Equivalency Principle, which I’ll be covering in more detail at the

Subjective Reality Workshop in less than two weeks.