With only 20% of all applicants being selected for funding, the two agency reps and the game developer stressed how important it is to sell yourself. It wasn't until this moment, when the slightly scruffy toque-wearing game developer said this, that I realized how important sales technique is for the indie dev. Fortunately, this is something I have experience with. And I am happy to share these techniques with the rest of the indie dev community.

In this article, I attempt to demystify the science and psychology of selling (pitching). I will focus on selling to EXTERNAL parties (strategic partners, customers, etc.). If people see value in this, then I'll take the time to describe how to sell to INTERNAL parties (your team, your boss, etc.).

I'm writing primarily for small indie game developers who will often need to pitch themselves -- perhaps to journalists, publishers, investors, potential hires, strategic partners, game contests, government organizations, incubators, and many more. However, these principles of selling aren't specific to game development. Anyone can use them to build their business, land a job, or convince someone to marry them :)

Before I take advice from anyone, I like to know their experience. So before I go any further, let me digress a moment to summarize my professional sales experience.

I began selling as a full time commission-only computer sales person at a Canadian electronics retailer called Future Shop (similar to Circuit City or Best Buy). The company paid 25% of the profit of whatever you sold. As you can quickly see: 1) recruits either learn to sell FAST or die; and 2) if you can sell, you have unlimited maximum income. I took to it like a fish to water. But I also took my new profession seriously: I learned everything I could from the extensive training program (based on the Xerox method), managers, co-workers, books, tapes, and video training series from motivational speakers such as Zig Ziglar. I did well and eventually became a sales trainer for new recruits in the corporate head office.

Now sales execs generally look down on one-to-one business-to-consumer (B2C) sales, and retail in particular -- for some good reasons, I must admit. It's usually done very poorly. But here is one important advantage: The number of pitches you can do in a day in retail is astronomical: 20-40 pitches a day every day compared to business-to-business (B2B), which allows for 1-2 a day at best. That kind of regularity, repetition, and low cost of failure (if you misspeak and lose a sale, someone new will be along in the next 5 minutes) is the perfect training ground for learning how to pitch.

I moved into B2B sales first for a web dev company (1 pitch a month), then into business for myself (about 1 pitch a month). I was still 100% dependent on my ability to sell, but now with the pressure of supporting my staff and other overhead, too! For more than 10 years, I sold custom mobile software projects ranging from small ($25-50k) to large ($700-900k). Over the years, I reckon I've sold about $6+ million across 30+ projects with a 95% closing percentage. My pitches were primarily to a C-level audience (CEO, CFO, CTO, CMO, COO).

To conclude this summary, I'll share one of my proudest sales moments:

I was about two years into my business. I was introduced by conference call to a mammoth prospective customer: We had 4 employees, and they had $4 billion in annual revenue. They used IBM for most of their IT consulting and were considering a mobile software project -- and using IBM for it. I flew into town (I still can't believe I managed to get the client to pay for my flight and hotel!), spent a day with the CTO in the board room, flew home, and closed the deal the following week by phone. Take that, Big Blue! :)

Definitions

B2B sales is most similar to what the typical indie faces -- whether you are pitching your game to a console manufacturer or a journalist. I will use the lingo of "Customer" to mean the party you are selling to.

When I use the term sale, I want to be clear what I mean. Simply put, a "sale" is when you convince someone of something. It is a transaction of the mind. It’s like Inception – but this time everyone is awake :) Once this is accomplished, handing over money, signing contracts, creating a feature article, or any action the customer does is secondary. It wouldn't have happened if you hadn't convinced them it was worth doing in the first place.

OK, let's get to it!

1. Every Buy Decision is a Largely an Emotional One.

This is the most important and shockingly counter-intuitive truth I can share with you. If you don't remember any other principle, remember this one!

When making a decision, people like to think they are rational and logical. While they know they have emotions, they don't understand or believe that emotions make up probably 80% of their decisions. Don't burst their bubble! The poor souls living in the Matrix are happy there!

For example, let's say you are house shopping with your spouse. You look at two houses with roughly the same features, location, and price. But the more expensive house that is slightly older and needs more work just has a great living room that seems perfect for family visits. On a pro/con list, you should not choose this one -- but most people do. Why? Because you have an emotional attachment that drives a seemingly fully rational decision.

Ever got a job you were slightly unqualified for? Ever NOT get a job you were overqualified for? If your answer is “yes,” you know from experience the huge role emotion plays in human decision-making.

It is NOT all about features, merit, dollars and cents, brand or background; sales technique can overcome ANY weakness or hurdle if executed the right way. You too can beat IBM! Or you can be in the best position (factually and objectively) and totally blow it :) Success is within your grasp -- something you can control through sheer determination.

What I’m trying to say is that time spent learning and practicing sales technique will increase your closing percentage -- NOT because your product changed, but because of how you pitched it. More features won't sell your game; you will!

Pro Tip: My good friend and English professor taught me when writing persuasion (sales) literature for a friendly audience to save your strongest point for last. But when writing to a skeptical audience, use your strongest point first because they may never read any further. Good advice!

2. Sell Because it's Your Job.

No one else will sell you but you. If you won't sell you, you are screwed.

Most people are uncomfortable selling. I think salespeople rank just below politicians and lawyers on the Slimy Job Top Ten list.

I believe two major factors contribute to this:

- Because you gain something out of selling, somehow this makes the act immediately feel disingenuous. Your motives don't feel pure.

- Selling requires risking personal rejection and failure. Someone may make a face at you, respond with something hurtful, or (worse) ignore you completely.

This was true for me. I'm an introverted computer nerd who tried to attract the ladies with well-crafted autoexec.bats. I dislike meeting new people. I'll never forget the lesson a Future Shop manager shared when he noticed several shy new recruits reluctant to approach customers:

Have you ever been at a bar and seen a really attractive person across the room you'd like to meet? But you are too afraid to approach him or her? Maybe you think they are out of your league, or just want to be left alone, or look busy, or some other excuse.

Now consider this: What if you were hired by the bar owner to be a greeter. He made your job very clear: "I want you to make sure people have a good time here, so make sure you talk to each person at least once and see how they are doing."

Now how would you feel about approaching the attractive person? It's way easier! Whether it goes well or poorly, it doesn't matter anymore; you are just doing your job. You no longer feel threatened -- or threatening.

The difference between the two scenarios is not one of facts or features. Neither you nor the other person has changed. The change happened inside you. Now you feel permission or even the right to make the first move.

You need to get to the place where you give yourself permission to approach that publisher, journalist, voice actor, or general public. Until then, you will simply give yourself too many excuses not to sell.

Pro Tip: In every discussion with a customer a sale is being made. You are selling your ideas, but the customer is selling too! Either you are convincing them to buy, or they are convincing you why they shouldn't. Who will be the better salesperson?! Notice in the conclusion statement that you either give yourself permission or you give yourself excuses. A sale is being made here too! You either sell to yourself that you are allowed to sell, or you sell to yourself you aren't.

3. If you Don't Believe It, No One Else Will.

Humans are born with two unique abilities:

- to smell a fart in an elevator

- to smell a phony

In order to sell well, you must have conviction. You have conviction if you truly believe in yourself and your product. While I must admit it is possible for the highly skilled to fake conviction, there is no need to do so. Real conviction is easy and free when you are in love with your product. It will ooze out of every pore; little things like the tone of your voice, word choice, the speed at which you speak, and the brightness of your eyes. Conviction is infectious. People want to be caught up in it. Which goes right back to point #1 about the emotionality of selling.

But why does conviction sell? Because a customer is constantly scanning you to see if what you are saying is true. Conviction is important in how the customer reads you. Imagine you are trying to convince a friend to see a movie. Your friend thinks:

- He appears quite excited about this movie.

- I would only be that excited and passionate if it was a really good movie.

- Ergo, the movie must be really good.

In Jordan Mechner's book, The Making of Prince of Persia, he records the process of making the first Prince of Persia game (which was incredible for its time). The production team believed in the project immensely, but the marketing department did not. When they chose the box art and shipped the title, this great game had dismal sales for the first year. Only when a new marketing department came in, believed in the product, and revisited the box art and marketing plan did the game start selling.

Conviction gives the customer the data needed to sell themselves into believing what you are saying.

This dovetails nicely with my next point.

4. Want What is Best for the Customer.

I'm currently doing a sales job on you (oops, I seem to have broken the fourth wall!) I'm trying to convince you that what I am saying is true -- and when put into practice, will make you better at pitching your game.

Why am I typing this at 2:36 a.m. when I could be sleeping -- or better yet, playing Mario Kart 8? Because I genuinely believe this information will help someone. It costs me very little (some time at the keyboard) and could make a real difference in someone's life.

See, I'm not typing this article for me; I'm doing it for you. Whether or not I benefit from doing so, my primary motivator is to do something good for you.

If you want to get your game featured on a certain site, stop thinking about how it is good for you to be featured and start thinking about how it is good for them to feature you. Reasons (arguments) made from the perspective of their good will impact deeper and resonate longer.

So how can you know what is good for your prospective customer / journalist / publisher / government agency?

Do your homework. Know what makes your target tick. Find out what motivates them. Discover what is important to them. More importantly, find out what is not important to them.

For the conference I attended, the purpose of the government program was to generate digital media jobs in our province. The overseer specifically told us: "When you write your proposal, be sure to point out how this will lead to job creation."

This is great advice for two reasons: The customer is not only saying "Tell me how it's good for me," but also "I'm lazy, so make it easy for me." In other words, the customer is ‘tipping his hand’ by saying "All things being equal, the proposal that more easily shows what's in it for me will be chosen."

Don't rely on your target audience to do the work of understanding. Your pitches will vastly improve if you spoon feed them the goodness!

Pro Tip: Knowing what NOT to say is just as important as what TO say. For example, I regularly listen to the Indie Haven Podcast. On one specific episode all four journalists went on a bit of a rant that if you are contacting them for the first time, do not tell them about your KickStarter. Tell them about your GAME! They said if you start your email about your KickStarter they will stop reading. So know what NOT to say and avoid the virtual trash bin!

5. Don't say what is True, say what is Believable

I had just started my software company and was having lunch with a veteran entrepreneur millionaire friend to get some advice.

During the soup course he asked, "So what does your software company do?"

"We make amazing custom software," I answered.

"I understand that, but what specifically are you good at?"

"Here's the thing, we are so good with such a great process we can literally make any kind of software the customer wants -- be it web portal, client server, or mobile. We are amazing at building the first version of something, whereas many companies are not."

"That may be true, but it isn't believable."

I dropped my spoon in shock.

Maybe your role-playing game is 10x more fun than Skyrim -- not just to you, but empirically through diligent focus group testing. But don't try and approach a journalist or publisher with those claims. It may be true, but it certainly isn't believable.

What is true and believable is, "If you liked Skyrim, you'll like RPG-I-Made." Ever seen a byline or quote like that in an app description? Yep, because that is as far as you can go without crossing the line into the "unbelievable" territory.

6. Create the Need

Every sales pitch is like a story, and every story is like a sales pitch. Let me explain.

You can't give an answer to someone who doesn't have the question. You can walk up and down the street yelling "42!" to people -- but if they aren't struggling to know the answer to Life, the Universe, and Everything, it won't mean a thing to them.

You can't sell a product/idea to someone who doesn't have a need. Every pitch follows the three-act story structure:

Act 1: Setup

Act 2: Establish the need

Act 3: Present your solution

We see this in The Lord of the Rings:

Act 1: Frodo is happy at home, life is good. We meet a bunch of characters are Bilbo's birthday party. -- Setup

Act 2: A ring will destroy everything Frodo loves. And people are coming to get it right now. -- Need

Act 3: The fires of Mount Doom can unmake the ring. Frodo tosses it in, by way of Gollum. -- Solution

Study the first part of infomercials to see how need can be quickly established.

Humans have plenty of basic latent needs/desires you can tap into. You don't need to manufacture a new one. When it comes to gaming, one simple one is "to feel awesome." Pretty much every game I play makes me feel awesome. Now I may or may not be awesome in real life, but I have a need/desire to feel awesome -- and games fill that need nicely.

Bringing it back to the government program, what is their need? They are handing out money and tax incentives. At first blush, there doesn't seem to be a need that I can tap into. But applying principle #4 of what's good for them, we can do our homework and discover that if the program has 20 million dollars, they HAVE to give that money out. The people working there are not evaluated by how much money they keep; they are rewarded by how much they give away. They literally have a need to give away money. But not to just anyone; they need to give it to studios that will manage it well and create long-term jobs in digital media.

As a final example, notice how I establish need for this article in paragraphs 5 and 6. This article is based on the common need for indie game devs to promote themselves.

7. Talk to the Real Decision Maker

Who is the best person to pitch you? You. So don't expect all the time and effort spent pitching a minion means they will pitch their boss on your behalf just as well.

Aragorn did not find a mid-level orc, explain his position, then hope the orc makes as impassioned a presentation to Sauron. Aragorn knew he needed to climb the corporate ladder. He went directly to the Black Gate to take his issue up with Sauron directly!

Throughout most of my B2B sales career, I initially got in the door through a mid-level manager like a project manager, IT director, or operations manager. These people have "felt need". Their team needs to do something new or is inefficient and needs software to solve it. But a $250k decision is way beyond their pay grade; they need the CFO and maybe CEO to make the decision. You can spend hours and hours pitching the minion with amazing props and demonstrations, and they turn it into a 3-line email to their boss saying your presentation was very nice. Aaarrrggghhhh!!!

Even worse, what if the competition is talking to the CEO over lunch at the country club while you are spending all your efforts on the minion?! Flanking maneuvers like this are a common reason for losing a sale.

Remember in point #1 how all decisions are really emotional? By filtering your pitch through someone to the CEO, all of the emotional trappings disappear; it literally is just features/functions on a page. Meanwhile, the sales guy at the country club is showing interest in the CEO's children, sharing stories of his own, and having a good laugh. All things being equal, 9 out of 10 times when the CEO has to decide, he'll go with the person he met. Everyone trusts what they see with their own eyes more than what was reported to them by another. Use this to your advantage.

This doesn't mean you shouldn't talk to minions or treat them like a waste of time. That is mean and dehumanizing. You won't get anywhere with that. My point is not to RELY on the minion to do the sales job for you. You have to climb the corporate ladder to the actual decision maker and give it your best. A concrete example is when I organize a pitch session with the mid-level manager, I make sure their boss is invited to the meeting. Or I do the whole pitch to the mid-level manager and then ask, "Do you think your boss would see value in having this information, too? I would be happy to come back and run through it." If they are impressed with what you've done, they are more than willing to move you up the ladder.

Now, big companies are wise to these ways and may have strict rules on who can meet with external parties. This is frustrating. The best you can do is to find the closest person to the actual decision maker and pitch your heart out.

Personally, I find this ladder-climbing the most difficult aspect of selling. But then I have to remember principle #2: It's my job. If I don't do it, no one will.

8. Sell the Appointment, not the Product

When are you at your best, selling-wise? In front of the person with all your tools, demos, snappy dress -- and sharing fancy coffees.

When is it harder to say “no” to someone -- over the phone/email or in person? In person.

Most people suck at cold calling/emailing. While it is a sucky task, one big reason people fail is because they have the wrong objective. They think that as soon as they get the person's attention, it is time to pitch. By-the-power-of-Grayskull no!!!

When you first encounter someone you need to pitch, your goal is to get a meeting where the person is relaxed, focused, and mentally prepared to listen to what you have to say. Your email or call may have interrupted their day between meetings, or baby bottles -- and they don't have the headspace to listen, never mind think. You will get a “no” at this stage. So give yourself every chance of success; book the meeting!

To get the meeting, you must be diligent about three things:

Keep the conversation as short as possible.

Tell just enough to whet their appetite. DO NOT tip your hand -- build the need/desire for the meeting.

Keep steering them back to the appointment

Granted, this one takes some practice -- but here is a quick example to get you started:

"Hi Mrs. Big Shot. I'm Thomas, and I am making a new kind of role playing game that I think would be a great addition to your platform. Could I schedule just 15 minutes of your time to show you what I'm working on? I really think you will like what I have to show you. "

"Role-playing game, eh? Dime a dozen, pal. What's so great about yours?"

"Well, I have some new A.I. techniques and combat mechanics that haven't been seen before. I’d love to meet with you to go over the key features of the game and even show you some gameplay. How about a quick meeting later this week?"

"Have you made anything before, or this your first time?"

"I've released two games previously, but I would be more than happy to go over my qualifications and previous experience with you when we meet. Is next week better than this week?"

"I'm pretty busy this week, but schedule something next week with my assistant."

"Thank you Mrs. Big Shot! I look forward to meeting you!"

Why does this work? Because curiosity sells. Since you haven't given Mrs. Big Shot something yet to reject, she is open and slightly curious to see if maybe, just maybe, you have the next big thing.

9. Inoculation

The ability to inoculate against objections is probably the single biggest gain a newbie sales person can make. Removing and eliminating objections is the key to closing the sale.

In real life, we get vaccinations to prevent disease. The process is to introduce a small weak version of the disease into your body against which your immune system will build a proper defense for the long term. When the actual disease comes along, you are immune.

Inoculation (in sales) is the process by which a salesperson overcomes objections before they have a chance to build up and fester. The longer the objections gestate in the customers’ minds, the quicker the "virus” takes hold. You do this by bringing up the objection first yourself, and then immediately answering it. If you bring up the objection first, the virus is in its weakest possible state -- and the customer becomes impervious to it.

So after you prepare your pitch -- whether it’s website text, or email -- you have to look at it from the customer’s perspective and see where your weaknesses are. Maybe get a friend to help you with this.

Let's imagine you've come up with three likely objections to your game:

A) You've never made a game before.

B) Your selected genre is oversaturated.

C) Your scope is aggressively large.

Before I go any further, let's reflect for a minute on how likely you are you to close the deal with those three objections hanging in the customer’s mind. Not very likely. Even if they haven't voiced them yet, just thinking them will torpedo your chance of success.

Now imagine all three of those objections have been inoculated against. It's clear sailing to closing the deal!

So here is an important principle: If someone raises an objection when you try to close, what they are really saying is that you haven't successfully pre-empted the objection by inoculating against it. Learn from this! Remember this objection for next time. Spend time thinking through possible ways to inoculate against it. The more chances you have to pitch, the more experience you will have with objections, and the more inoculations you can build into the next version of your pitch. Sales is a real time strategy game! Prepare your defenses well!

Another principle to remember: Customers are not necessarily truthful and forthright. They may have objections but haven’t shared them with you. If they don't share them, you have no way to overcome them -- and your sale dies right then and there. Inoculation is the best defense against this.

Pro Tip: Don't save all your inoculations for the end of your pitch; it's too late then. Sprinkle them throughout the pitch; they are more easily digested one at a time. Early in the presentation, you should be inoculating against conceptual objections such as, "Is this even a good idea?" Later on in the presentation when you are about to go for the close, you need to address implementation objections such as, "Do you have a realistic team to build this?"

A further benefit of inoculation is that by bringing up your perceived weakness yourself, you gain credibility and show that you can think critically. This goes to character, and people generally want to work with credible people who can think critically.

So how can we inoculate against those three example objections?

A) You've never made a game before.

Early in the presentation, like when you are sharing your background or how you came up with the concept of the game. Say something like, "Now this is the first game I'm making myself. However, I have X industry experience doing Y. I also have two mentors who have released several titles that I meet with regularly. When I don't know what to do, I lean on their experience. "

B) Your selected genre is oversaturated.

Mid-presentation, show some screenshots or demo -- and the genre will be known. You can say something like, "Now I know what you are thinking: Another First Person Cake Decorating game? And initially when I was designing it, I felt the same way. But here is why I think our First Person Cake Decorator is unlike anything else in the market . . ."

C) Your scope is aggressively large.

Late presentation just before the close addresses objections like this. "Now I recognize that our scope seems too large for our small team. But team member X worked on such and such, and it had 3 times as many A.I. agents as our game. And we are open to discussing the scope with experienced developers. At the end of the day, we want to make something new and compelling for the genre and are looking for key partners like you to help us get there."

Pro Tip: When the customer asks for implementation details such as scheduling, resources, costs, specific technologies, start getting excited. These are buying signals. The customer is rolling your proposal around in their mind trying to imagine working with you. So make sure you answer all the questions truthfully and completely!

10. Leave Nothing to the Customer's Imagination

Since I was pitching custom software, I had nothing to show because it didn't exist yet. It's one thing to pitch a car or house that is right there in front of the customer. But to pitch an idea? And they have to agree to spend the money first before they see anything tangible? This is extremely difficult!

Now I imagine in the game space that the people you meet probably exercise their imaginations regularly. But in the business space, I can assure you that the CFOs are NOT hired for their creative imaginations. More likely, their lack of it.

So what do we do?

Do not rely on the customer's imagination to understand what you intend to do or build. Make it as concrete for them as possible. Words are cheap, so use props.

One reason my software company closed many deals despite being up against larger, more experienced competitors is the lengths we would go to show the customer how their eventual software may work. Our competitors would hand in four-page proposals; ours were 20-30 pages. We spent dozens of hours mocking up screens and writing out feature descriptions. Sometimes we would build a demo app and throw it on a handheld. All this so they could see, touch, and taste the potential software in the board room and close the deal. Even if our software solution cost more and would take longer to complete, the customer would go with us because our presentation was more concrete. They could see success with us; whereas, they would have to imagine success with the competitor.

In games, you can make a demo. But if that is too much, you can at least get an artist to make mock screens, get some royalty-free music that fits the theme, and then show footage from other games that inspire you.

Props beat words every day of the week.

Pro Tip: When up against competitors, you always want to present last. I've been in many "showcase showdowns" over the years where the customer will hear presentations from 3 or 4 companies throughout a day. The reason you want to go last is whatever presentations they saw before yours creates "the bar," the standard of excellence. If you are better than that, it will be so completely obvious to them that half your work is already done. But what if you aren't way better than the competition? However amazing the first presentation may have been, it fades in the customer's memory. Perhaps by the fourth presentation, they have forgotten all the glitz of the first. It's sucky to lose that way, but remember: The decisions are emotionally charged and based on faulty humans rather than faultless computers. Go last, have the strongest last impression, and improve your chances of winning!

11. Work Hard! Earn it!

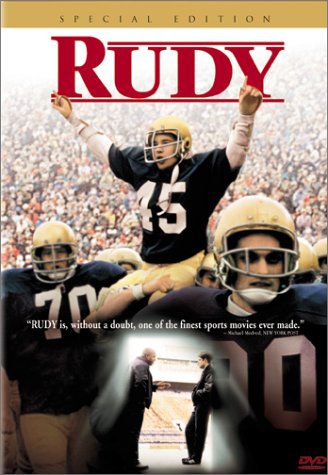

The movie Rudy is a great example of this principle. Based on a true story, Rudy wants to play football for Notre Dame. Trouble is he isn't big, fast, or particularly good at football. But he tries! Oh how he tries! He practices more and with greater gusto than anyone else. Finally, at the end of the movie, Rudy is given the chance to play in a game. The crowd chants and the movie audience cries because it's all just so wonderful!

Almost all of the software deals I closed were bid on by multiple competitors. Canadians love the "3 quotes" principle. When I would check in on my client waiting to hear that we won the job, it would boggle my mind to hear the decision is delayed because one of the competitors was in late with their proposal. Are you kidding me?!

We delivered our proposals on time every time. That may have meant some late nights, but failure wasn't an option. And as previously mentioned, we always delivered more in our proposals than our competitors did.

Everyone likes to reward a Rudy because we all want to believe you can overcome your weaknesses through hard work and dedication and achieve your goals.

Working hard during your pitch says more about your character than anything else. It gives the customer the impression, "If they work hard here, they will work hard for the whole project.” The reverse is also true: "If they are lazy and late here, they will be lazy and late for the whole project." Again, talent isn't everything; who you are inside and how you work is.

I have personally awarded work to companies/contractors because they worked harder for it than the others, even though they weren't the best proposal I received.

Pro Tip: Be the best to work with. When I am in the process of pitching someone, I am "all hands on deck" for instant responses to questions or ideas from the customer. An impression is not just made with how you answer but how quickly you answer. If customers encounter a question and get an email response within 12 minutes, they are impressed and know you are "earning it.”

12 You Have to Ask for the Close

You miss 100% of the shots you don't take. – Wayne Gretzky

I'm not great at networking or cold calling. I’ve already shared that I'm not great at ladder climbing. But where I really shine is closing. Closing a deal is like winning a thousand super bowls all wrapped up into a single moment.

With a bow.

And sparklers.

I could write a whole article just on closing (and there are books dedicated to it), so I've limited our time to just the most important, most missed principle: You have to ask for the close.

I have seen great sales presentations fail because the presenter never asked for the deal. They talked and talked and said lots of wonderful things, but then just sat there at the end. What were they expecting? The customer to jump out of their seat screaming, "I'll take it!" Or maybe it's as if there is a secret that the salesperson is there to make a sale and they don't want to blow their cover by actually saying, "So, will you choose us?"

If you don't ask for the close, you won't get the objections -- and if you don't get past the objections, you won't win. So ask for it!

Now to some specific techniques to help you.

First, be clear about asking for the close. If you want an interview, say "So do you think you can interview us?" If you want a meeting with someone, say "So can we book a meeting for Tuesday?"

If you really struggle with what I just said, try the pre-close to boost your confidence:

"So what do you think so far?"

That is not a close. That is a non-threatening temperature check. The customers are just sharing their thoughts, tipping their hand to tell you what they like and any immediate objections that come to mind. After you discuss their thoughts, you still have to circle back around to booking that interview or the meeting.

Second, when you ask for the close, the next person who speaks loses. Silence is generally uncomfortable for people, so this one requires real grit and determination. Many salespeople say something during the silence to try and help their case. They are doing the opposite. Asking for the close is a pointed question that requires the customer to make a mental evaluation and then a decision. If you say anything while they are doing the mental process, you will distract them and cause the conversation to veer away from the close to something else: tertiary details, objections, etc.

I was in a meeting with a potential client when I had the unfortunate task of telling them their software wouldn't be $250k but $400k and take months longer. I explained why and then asked for the close: "This is what it costs and how long it takes to do what you want to do. It will work exactly as you want. Would you like to go ahead?"

They were visibly mad at the ballooned cost/time. I sat in silence for what felt like hours but was probably 3-4 minutes as the VP stared at the sheets I'd given him. Finally, he said "I don't like it, I'm not happy, but ok. But this date has to be the date -- and no later!" The silence put the burden of making a decision squarely on the VP, and he decided.

Third, expect objections. Even if you did all your inoculations correctly, there will be something you never thought of that they did. Hopefully, you got the big ones out of the way -- but I don't think I've been in a meeting where they just said, "Great presentation. Let's do it!"

Sometimes people bring up objections for emotional reasons: They just don't want to work with you. Like the girl who won't go out with you because she has to wash her hair that night. There really is nothing you can do at that point. You've failed to build rapport or show how you can meet their needs. You won't recover these blunders at the closing stage.

But for real objections, these are legitimate reasons preventing them from going with you. Get past those, and it's time for the sparklers!

It is critical to first get all the objections in one go. This is most easily done with a simple question, "Other than X, is there anything else preventing us from working together?" I'll show you why this is important in a moment.

If possible, write down every objection they give you. Most people get hung up on one or two. In my hundreds of meetings I have never seen someone able to list 4+ objections to a pitch.

Now work through each one of the objections in turn -- treating them seriously. Treat them like they are the end of the world if unresolved; because they are! Before moving on to the next objection, say "Does what I just shared address your concern?" If they say yes, cross that off the list.

Pro Tip: You don't have to deal with the objections in the same order they raised them in. If there are some quick ones and then a hard one, get the quickies out of the way first, build up momentum, turn the room temperature in your favor, and go for the hard one. Also, if you do handle them out of order you maintain complete control of the conversation because they can't anticipate what is coming next.

Once you have dealt with each of the listed objections, say something like, "Well we've addressed A, B, and C. So now do you think we can work together?"

By gathering the list of objections first, you have achieved several things. First, you've shown you listened to them. Listening and understanding can overcome much of the objection. Second, it brings a natural path back to the close! They listed out the agenda, and you dealt with it; there is nothing left to do but close! Finally, you are preventing them from coming up with new objections. This is a psychological trick, since you gave them every opportunity to list out their objections earlier -- now that time has passed. They look foolish if they do it again. Sort of like when you get to a certain point in a conversation, it's just too late to ask the person their name. If they raise new objections at this point, it looks like they are just stalling or delaying. Maybe that is what they are doing -- because the objections were emotional ones.

These principles apply to writing as well! Like a website "squeeze" page to get newsletter subscribers. You have to be clear and obvious about what you want: You want a newsletter signup. Well, make it clear and easy for them to do that!

Pro Tip: When negotiating (which is just a sale in a different form), when is it better to name a price? Should you go first -- or let them be first instead? Common knowledge is to go last, which happens to be the wrong answer. According to the Harvard Business Essentials: Negotiation book you should speak first. The person who speaks first frames the rest of the conversation and is more likely to get it to go their way.

I saw the truth of this early on in my business. I went to downtown Toronto to meet with a client and negotiate the value of something. I sat down with the CFO, and he was going on and on about how what he wanted wasn't very valuable to me. Then he said, "What do you think its worth, Thomas?" I said $30,000 -- and he almost fell backwards out of his chair. He was thinking only $1,000-2,000. But since I went first, his paltry fee looked insulting and ridiculous. We ended up at $15,000. Half of what I wanted, but 8x-15x more than he thought going in. Speaking first works.

Conclusion

Well, there you have it: roughly 12 years of sales experience boiled down to 12 principles.

Did I "close" you? Was this information helpful in improving your pitches? Use the comments to let me know!

SDG

You can follow the game I'm working on, Archmage Rises, by joining the newsletter and frequently updated Facebook page.

You can tweet me @LordYabo